Thursday, August 22nd 2019

AMD CEO Lisa Su: "CrossFire Isn't a Significant Focus"

AMD CEO Lisa Su at the Hot Chips conference answered some questions from the attending press. One of these regarded AMD's stance on CrossFire and whether or not it remains a focus for the company. Once the poster child for a scalable consumer graphics future, with AMD even going as far as enabling mixed-GPU support (with debatable merits). Lisa Su came out and said what we all have been seeing happening in the background: "To be honest, the software is going faster than the hardware, I would say that CrossFire isn't a significant focus".

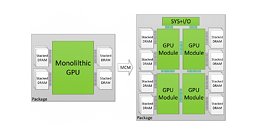

There isn't anything really new here; we've all seen the consumer GPU trends as of late, with CrossFire barely being deserving of mention (and the NVIDIA camp does the same for their SLI technology, which has been cut from all but the higher-tier graphics cards). Support seems to be enabled as more of an afterthought than a "focus", and that's just the way things are. It seems that the old, old practice of buying a lower-tier GPU at launch and then buying an additional graphics processor further down the line to leapfrog performance of higher-performance, single GPU solutions is going the way of the proverbial dodo - at least until an MCM (Multi-Chip-Module) approach sees the light of day, paired with a hardware syncing solution that does away with the software side of things. A true, integrated, software-blind multi-GPU solution comprised of two or more smaller dies than a single monolithic solution seems to be the way to go. We'll see.

Source:

TweakTown

There isn't anything really new here; we've all seen the consumer GPU trends as of late, with CrossFire barely being deserving of mention (and the NVIDIA camp does the same for their SLI technology, which has been cut from all but the higher-tier graphics cards). Support seems to be enabled as more of an afterthought than a "focus", and that's just the way things are. It seems that the old, old practice of buying a lower-tier GPU at launch and then buying an additional graphics processor further down the line to leapfrog performance of higher-performance, single GPU solutions is going the way of the proverbial dodo - at least until an MCM (Multi-Chip-Module) approach sees the light of day, paired with a hardware syncing solution that does away with the software side of things. A true, integrated, software-blind multi-GPU solution comprised of two or more smaller dies than a single monolithic solution seems to be the way to go. We'll see.

88 Comments on AMD CEO Lisa Su: "CrossFire Isn't a Significant Focus"

When it works scaling was always meh with maybe 50-70% average. Few better, more worse or none at all. Only if it is a NEED, say 4K with mid-range tier cards, can I see it being worthwhile.

In the words of Elsa....LET IT GO... LET IT GO! CANT HOLD IT BACK ANY MORE!!!!! :p

But but b...I can buy two cheap gpus to make a midrange card.... stooooooooopit..lol

;)

Radeon Instinct MI50 and MI60 need a bridge for Infinity Fabric Link to support high-bandwidth transfers (200GB/s+).

I run 2 Vega64s in CF and it's mostly fine. Newer driver features like Enhanced Sync or even Freesync cause stuttering though, so as long as you know about the limitations, it's okay. Using ReLive during Crossfire gameplay also reduces performance as it triggers adaptive GPU clocking, which is normally disabled in Crossfire to maximize XDMA performance.

Drivers after 19.7.1 have Crossfire profiles missing too.

Any other thoughts captain?

Remember when gaming was good? Pepperidge Farm remembers.

If anyone stands to gain from mGPU it AMD. If mGPU worked well AMD could sell two 580s at a pop so someone could get 2080 performance or two 5700s to get a 2080ti. nVidia probably doesn't care too much about it because they make way more money on 2080+ gpus then they would on the lower cards. I'd wager they make more money on a single 2080S than they do on two 2060S purchases. Probably why they have been slowly raising the bar for the gpus that can do sli - 960, 1070, 2080.

With all that, both of the GPU makers say it isn't worth spending resources developing drivers and helping studios implement mGPU. Why? Because almost no one uses it.

"It may be free performance for gamers, but that doesn't mean it's free for developers to implement. As PCPer points out, "Unlinked Explicit Multiadapter is also the bottom of three-tiers of developer hand-holding. You will not see any benefits at all, unless the game developer puts a lot of care in creating a load-balancing algorithm, and even more care in their QA department to make sure it works efficiently across arbitrary configurations."

And:

"Likewise, DirectX12 making it possible for Nvidia and AMD graphics cards to work together doesn't guarantee either company will happily support that functionality. "You seem to be the only one saying it was easy. The very article pointed out that it wouldn't be easy and that the game developer and hardware driver teams would still have to dedicated resources to it. Just because you decided something should be easy, doesn't mean anyone lied to you, especially when they said that it wouldn't be from the beginning.

this is the article it is taken from

www.gpucheck.com/gpu/nvidia-geforce-rtx-2080-ti/intel-core-i7-7700k-4-20ghz/

Be realistic, please.Every single one of those games are playable at those framerates. Rephrase the question to "Is this 4K60 gaming?" and then the answer indeed is no.

in my opinion CF & SLI is a waste of money especially due higher power consumption, if games don't support these features