Friday, December 23rd 2011

AMD Dual-GPU Radeon HD 7990 to Launch in Q1 2012, Packs 6 GB Memory

Even 12 months ago, an Intel Nehalem-powered gaming PC with 6 GB of system memory was considered high-end. Now there's already talk of a graphics card taking shape, that has that much memory. On Thursday this week, AMD launched its Radeon HD 7970 graphics card, which features its newest 28 nm "Tahiti" GPU, and 3 GB of GDDR5 memory across a 384-bit wide memory interface. All along, it had plans of making a dual-GPU graphics card that made use of two of these GPUs to give you a Crossfire-on-a-stick solution. AMD codenamed this product "New Zealand". We are now getting to learn that codename "New Zealand" will carry the intuitive-sounding market name Radeon HD 7990, and that it is headed for a Q1 2012 launch.

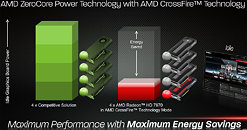

This means that Radeon HD 7990 should arrive before April 2012. Tests show that Tahiti has superior energy-efficiency compared to previous-generation "Cayman" GPU, even as it has increased performance. From a technical standpoint, a graphics card featuring two of these Tahiti GPUs, running with specifications matching those of the single-GPU HD 7970, looks workable. Hence, there is talk of 6 GB of total graphics memory (3 GB per GPU system).One can also expect the fruition of AMD's new ZeroCore technology. This technology powers down the GPU to zero draw when the monitor is blanked (idling), but in CrossFire setups, this technology completely powers down other GPUs than the primary one to zero state, when the system is not running graphics-heavy applications. This means that the idle, desktop, and Blu-ray playback power-draw of the HD 7990 will be nearly equal to that of the HD 7970, which is already impressive.

Source:

Softpedia

This means that Radeon HD 7990 should arrive before April 2012. Tests show that Tahiti has superior energy-efficiency compared to previous-generation "Cayman" GPU, even as it has increased performance. From a technical standpoint, a graphics card featuring two of these Tahiti GPUs, running with specifications matching those of the single-GPU HD 7970, looks workable. Hence, there is talk of 6 GB of total graphics memory (3 GB per GPU system).One can also expect the fruition of AMD's new ZeroCore technology. This technology powers down the GPU to zero draw when the monitor is blanked (idling), but in CrossFire setups, this technology completely powers down other GPUs than the primary one to zero state, when the system is not running graphics-heavy applications. This means that the idle, desktop, and Blu-ray playback power-draw of the HD 7990 will be nearly equal to that of the HD 7970, which is already impressive.

74 Comments on AMD Dual-GPU Radeon HD 7990 to Launch in Q1 2012, Packs 6 GB Memory

In fact , I'll go check now :)

manufacturer's bring equipment to the market

software houses write software that pushes the hardware to the limit

so manufacturer's revise and improve their hardware

we all upgrade to the new and latest spec hardware and so the software houses produce more demanding software to take advantage ect on on and on the cycle goes on

Would it climb to 1536MB if fully used or is there some left as buffer, i.e. is tha my card being maxed?

Though Photoshop will surely love the obscene amount of memory...

Becuase its simply half true. They can market it just becuase the chips are there

but people might think they can use up to 6GB of VRAM

(I know some AIB's have done it...)

I would expect the performance to increase by at least 10% across the board with mature drivers meaning 30-40% faster on all counts than a GTX 580.

Slap 2 of these chips together without lowering core clocks and shaders etc a'la GTX 590 and this dual GPU card should be a monster and stomp the 590 by a LOT

This card is nuts :laugh:

If what you said was correct, why can a sub $100 CPU and sub $150 GPU run any game today at max settings?

Like somebody already said, the software needs to catch up to the hardware. Anybody claiming otherwise is gaming too much in their sleep.

( I can max it out and then some and I'm at 4.3 and using a 6870)

And I am more interested to see the 7850!!!

3DMark 2011, although not being a game, is a fine example of how much a full DirectX11 game could be heavy on GPUs, if all developers would implement all DX11 libraries we'd need more than dual Tahiti to cope with the need for hardware.

Metro2033 itself is a GPU hog, sure some games are CPU bound and that's developing flaws.

And a Bulldozer is not an 8-core CPU, because it's like saying that mine is a 12-core CPU... doesn't work that way.