Monday, March 16th 2015

More Radeon R9 390X Specs Leak: Close to 70% Faster than R9 290X

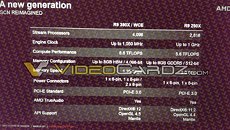

Earlier today, AMD reportedly showed its industry partners (likely add-in board partners) a presentation, which was leaked to the web as photographs, and look reasonably legitimate, at first glance. If these numbers of AMD's upcoming flagship product, the Radeon R9 390X WCE (water-cooled edition) hold up, then it could spell trouble for NVIDIA and its GeForce GTX TITAN X. To begin with, the slides confirm that the R9 390X will feature 4,096 stream processors, based on a more refined version of Graphics CoreNext architecture. The core ticks at speeds of up to 1050 MHz. The R9 390X could sell in two variants, an air-cooled one with tamed speeds, and a WCE (water-cooled edition) variant, which comes with an AIO liquid-cooling solution, which lets it throw everything else out of the window in psychotic and murderous pursuit of performance.

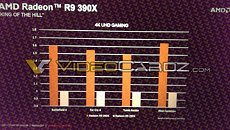

It's the memory, where AMD appears to be an early adopter (as its HD 4870 was the first to run the faster GDDR5). The R9 390X features a 4096-bit wide HBM memory bus, holding up to 8 GB of memory. The memory is clocked at 1.25 GHz. The actual memory bandwidth will yet end up much higher than the 5.00 GHz 512-bit GDDR5 on the R9 290X. Power connectors will be the same combination as the previous generation (6-pin + 8-pin). What does this all boil down to? A claimed single-precision floating point performance figure of 8.6 TFLOP/s. Wonder how NVIDIA's GM200 compares to that. AMD claims that the R9 390X will be 50-60% faster than the R9 290X, and we're talking about benchmarks such as Battlefield 4 and FarCry 4. The expectations on NVIDIA's upcoming product are only bound to get higher.

Source:

VideoCardz

It's the memory, where AMD appears to be an early adopter (as its HD 4870 was the first to run the faster GDDR5). The R9 390X features a 4096-bit wide HBM memory bus, holding up to 8 GB of memory. The memory is clocked at 1.25 GHz. The actual memory bandwidth will yet end up much higher than the 5.00 GHz 512-bit GDDR5 on the R9 290X. Power connectors will be the same combination as the previous generation (6-pin + 8-pin). What does this all boil down to? A claimed single-precision floating point performance figure of 8.6 TFLOP/s. Wonder how NVIDIA's GM200 compares to that. AMD claims that the R9 390X will be 50-60% faster than the R9 290X, and we're talking about benchmarks such as Battlefield 4 and FarCry 4. The expectations on NVIDIA's upcoming product are only bound to get higher.

99 Comments on More Radeon R9 390X Specs Leak: Close to 70% Faster than R9 290X

1) I'm not seeing much bitching about price.

2) Head over to most Titan threads for a lesson in price bitchery.

Really, there are no double standards here, if anything, less vocal ranting than Titan threads. Go look. Dont make me go find quotes.

and maybe true :).

Well, I just am curious now about these 8gb cards and the WC editions...

open source != free to write

a vast majority of "serious" open-source code is, in fact, written by paid[1] developers, working on it full-time.

So, making it open source doesn't mean they don't have to pay for it being written. (and AMD does hire quite a few developers to write FOSS code for them)

[1]and paid quite well at it, too.

The alternative here is to make the card run slow all the time even if the conditions are perfect for 24/7 boost clock speeds.

The solution is a practice that I believe most review websites do follow, which is to simply play through a game and record clock speeds and temps over time. A good review would test real-world clock speeds even after the card has heated up.Don't forget the 390 non-X. We don't have specs for that yet. Even then, I don't think a performance gap this big isn't unheard of, is it?

I do hope the WCE is reasonably priced. It's about a 4x step up from my 5870. $400/450 maybe but I'm not about to blow $500+ on it.

Nvidia's Maxwell doesn't support Tier 3 resource binding, and AMD doesn't support conservative rasterization Tier 1, so it will depend upon whether these are implemented. Conservative rasterization most assuredly will, but not DX features are automatic for gaming (DX11.2 springs to mind.)

Hatas gon hate. que taylor swift song bros

Wonder if the AIO cooling solution will get in the way of multiple card configs, I'm sure I wont be able to fit two separate 120mm or 140mm coolers on my current HAF-XB Radeon build...

What's weird is that one of those slides was leaked a couple of days ago, and ppl called it out as a fake because of a couple of typos:

Notice how "functionallity" was misspelled, and Direct X 12_Tier has a weird underscore.

Now in this new leak, the typos have been corrected; oh well, it might that the slide was fixed between the time it was leaked and this new presentation... Kinda suspicious though :wtf:

Source: www.guru3d.com/news-story/amd-radeon-390x-wce-water-cooled-edition.html