Friday, February 26th 2016

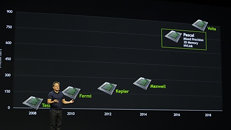

NVIDIA to Unveil "Pascal" at the 2016 Computex

NVIDIA is reportedly planning to unveil its next-generation GeForce GTX "Pascal" GPUs at the 2016 Computex show, in Taipei, scheduled for early-June. This unveiling doesn't necessarily mean market availability. SweClockers reports that problems, particularly related to NVIDIA supplier TSMC getting its 16 nm FinFET node up to speed, especially following the recent Taiwan earthquake, could delay market available to late- or even post-Summer. It remains to be seen if the "Pascal" architecture debuts as an all-mighty "GP100" chip, or a smaller, performance-segment "GP104" that will be peddled as enthusiast-segment over being faster than the current big-chip, the GM200. NVIDIA's next generation GeForce nomenclature will also be particularly interesting to look out for, given that the current lineup is already at the GTX 900 series.

Source:

SweClockers

97 Comments on NVIDIA to Unveil "Pascal" at the 2016 Computex

Nevertheless Zynq is hybrid FPGA+SoC, and if I not mistaken their much bigger brother Virtex FPGA also start shipping. FPGA's probably only second to GPU in sheer number of transistors.

I would also have thought people could think laterally and use use package size dimensions to get an approximate size of the die as shown at the beginning of some of Xilinx's promotional videos and product literature - which shows that the die is still comfortably larger than the ~ 100mm² ARM chips currently in production at Samsung. Bear in mind the Zynq SKU shown below is one of the smaller die UltraScale+ chips.

The sad truth is, nVidia has likely banked a crap ton of money by selling mid-range GPUs for $500 that would have normally sold for no more than $200 in the past. And when they finally have to release their high end chip, they sell them for $650+. AMD hasn't had this luxury, and we all know cash isn't something they have a lot of, this gives nVidia a very nice advantage.

Polaris is expected to have "up to 18 billion transistors" where Pascal has about 17 billion.

I still think the only reason why Maxwell can best Fiji is because Maxwell's async compute is half software, half hardware, where AMD's is all hardware. Transistors for making async compute work in GCN were otherwise spent increasing compute performance in Maxwell. It's not clear whether or not Pascal has a complete hardware implementation of async compute or not.

As with all multitasking, there is a overhead penalty. So long as you aren't using async compute (which not much software does, regrettably), Maxwell will come out ahead because everything is synchronous.

I think the billion transistor difference comes from two areas: 1) AMD is already familiar with HBM and interposers. They knew the exact limitations they were facing walking into the Polaris design so they could push the limit with little risk. 2) 14nm versus 16nm so more transistors can be packed into the same space.

Knowing the experience Apple had with both processes, it seems rather likely that AMD's 14nm chips may run hotter than NVIDIA's 16nm chips. This likely translates to lower clocks but, with more transistors, more work can be accomplished per clock.

I think it ends up being very competitive between the two. If Samsung improved their process since Apple's contract (which they should have, right?), AMD could end up with a 5-15% advantage over NVIDIA.

I think people expecting a 2x jump will be disappointed. A small increase in performance (~20%) with a bigger reduction in power consumption would be more like it. And don't expect any of it to be cheap.

Also bare in mind that Intel has been making the cores smaller and increasing the size of the GPU with each iteration. Each generation is a tiny bit faster...and cheaper for Intel to produce.

In GPUs, the physical dimesions stay more or less the same (right now, limited by interposer):

AMD is not "hanging by a thread" in the graphics department. They have 20% marketshare in the discreet card market and 100% of the console market.

The console market could turn into a huge boon for AMD as more developers use async compute. Xbox One has 16-32 compute queues while the Playstation 4 has 64. Rise of the Tomb Raider may be the only game to date that uses them for volumetric lighting. This is going to increase as more developers learn to use the ACEs. As these titles are ported to Windows, NVIDIA cards may come up lacking (depends on whether or not they moved async compute to the hardware in Pascal).

Then again, the reason why NVIDIA has 80% of the market while AMD doesn't is because of shady backroom deals. AMD getting the performance lead won't change that.

AMD's processors bested Intel processors from K6 to K8. Their market share grew during that period but they didn't even come close to overtaking Intel. It was later discovered Intel did shady dealings of their own (offering rebates to OEMs that refused to sell AMD processors) and AMD won a lawsuit that had Intel paying AMD.

It's all about brand recognition. People recognize Intel and, to a lesser extent, NVIDIA. Only tech junkies are aware of AMD. NVIDIA, like Intel, is in a better position to broker big deals with OEMs.

They have missed some pretty good opportunities. The Nano could have been great if they hadn't overpriced it(the Fury X too). The Nano at $450 at launch would have flown out the door. The 390 is a decent contender now, but now is too late. The 390 needed to be on the market 4 months sooner than it was, and cost $20 less than the 970, not $20 more. You don't gain market share by simply matching what your competitor has had on the market for a few months, you have to offer the consumer something worth switching to.

HD 7950 -> R9 280(X)

R9 290X has been out since 2013. GTX 970 didn't come for another year. 2014 and 2015 were crappy years for cards not because of what AMD and NVIDIA did but because both were stuck on TSMC 28nm. The only difference is that NVIDIA debuted a new architecture while AMD didn't do much of anything.

Fiji is an expensive chip. They couldn't sell Nano on the cheap because the chip itself is not cheap.

390 is effectively a 290 with clocks bumped and 8 GiB of VRAM (which only a handful of applications at ridiculous resolutions can even reach). 390, all things considered, is about on par with 290X which is only about a 13% difference. Not something to write home about.

AMD did have a superior product in K7 and K8 and were competitive during that era - and for certain weren't helped by Intel's predatory practices (nor were Cyrix, C&T, Intergraph, Seeq and a whole bunch of other companies). It is also a fact that AMD were incredibly slow to realize the potential of their own product. As early as 1998 there were doubts about the companies ability to fulfill contracts and supply the channel, and while the cross-licence agreement with Intel allowed AMD to outsource 20% of the x86 production, Jerry Sanders refused point blank to do so. By the time shortages were acute, the company poured funds they could ill afford to spend into developing Dresden's Fab 36 at breakneck speed and cost rather than just outsource production to Chartered Semi (which they eventually did way too late in the game) or UMC, or TSMC. AMD never took advantage of the third-party provision of the x86 agreement past 7% of production when sales were there for the taking. The hubris of Jerry Sanders and his influence on lapdog Ruiz was true in the early years of the decade as it was when AMD's own ex-president and COO, Atiq Raza reiterated the same thing in 2013.

As for the whole Nvidia/AMD debate, that is less about hardware than the entire package. Nearly twenty years ago ATI was content to just sell good hardware knowing that a good product sells itself - which was a truism back in the day when the people buying hardware were engineers for OEMs rather than consumers. Nvidia saw what SGI was achieving with a whole ecosystem (basically the same model that served IBM so well until Intel started dominating the big iron markets), allied with SGI - and then were gifted the pro graphics area in the lawsuit settlement between the two companies - and reasoned that there was no reason that they couldn't strengthen their own position in a similar matter. Cue 2002-2003, and the company begin design of the G80, a defined strategy of pro software (CUDA) and gaming (TWIMTBP). The company are still reaping rewards of a strategy defined 15 years ago. Why do people still buy Nvidia products? Because they laid down the groundwork years ago and many people were brought up with the hardware and software - especially via boring OEM boxes and TWIMTBP splash screens at the start of games. AMD could have gained massive inroads into that market, but shortsightedness in cancelling ATI's own GIGT program, basically put the company back to square one in customer awareness and all because AMD couldn't see the benefit of a gaming development program or actively sponsoring OpenCL. Fast forward to the last couple of years, and the penny has finally dropped, but it is always tough to topple a market leader if that leader basically delivers - I'm talking about delivering to the vast majority of customers - OEMs and the average user that just uses the hardware and software, not an minority of enthusiasts whose presence barely registers outside of specialized sites like this.

Feel free to blame everything concerning AMD's failings on outside influences and big bads in the tech industry. The company has fostered exactly that image. I'm sure the previous cadre of deadwood in the board room collecting compensation packages for 10-14 years during AMD's slow decline appreciate having a built in excuse for not having to perform. It's just a real pity that it's the enthusiast that pays for the laissez-faire attitude of a BoD that were content to not to have to justify their positions.

If that changes I'll be more than happy to go with AMD.