Friday, March 18th 2016

NVIDIA's Next Flagship Graphics Cards will be the GeForce X80 Series

With the GeForce GTX 900 series, NVIDIA has exhausted its GeForce GTX nomenclature, according to a sensational scoop from the rumor mill. Instead of going with the GTX 1000 series that has one digit too many, the company is turning the page on the GeForce GTX brand altogether. The company's next-generation high-end graphics card series will be the GeForce X80 series. Based on the performance-segment "GP104" and high-end "GP100" chips, the GeForce X80 series will consist of the performance-segment GeForce X80, the high-end GeForce X80 Ti, and the enthusiast-segment GeForce X80 TITAN.

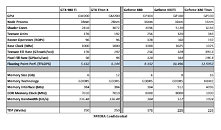

Based on the "Pascal" architecture, the GP104 silicon is expected to feature as many as 4,096 CUDA cores. It will also feature 256 TMUs, 128 ROPs, and a GDDR5X memory interface, with 384 GB/s memory bandwidth. 6 GB could be the standard memory amount. Its texture- and pixel-fillrates are rated to be 33% higher than those of the GM200-based GeForce GTX TITAN X. The GP104 chip will be built on the 16 nm FinFET process. The TDP of this chip is rated at 175W.Moving on, the GP100 is a whole different beast. It's built on the same 16 nm FinFET process as the GP104, and its TDP is rated at 225W. A unique feature of this silicon is its memory controllers, which are rumored to support both GDDR5X and HBM2 memory interfaces. There could be two packages for the GP100 silicon, depending on the memory type. The GDDR5X package will look simpler, with a large pin-count to wire out to the external memory chips; while the HBM2 package will be larger, to house the HBM stacks on the package, much like AMD "Fiji." The GeForce X80 Ti and the X80 TITAN will hence be two significantly different products besides their CUDA core counts and memory amounts.

The GP100 silicon physically features 6,144 CUDA cores, 384 TMUs, and 192 ROPs. On the X80 Ti, you'll get 5,120 CUDA cores, 320 TMUs, 160 ROPs, and a 512-bit wide GDDR5X memory interface, holding 8 GB of memory, with a bandwidth of 512 GB/s. The X80 TITAN, on the other hand, features all the CUDA cores, TMUs, and ROPs present on the silicon, plus features a 4096-bit wide HBM2 memory interface, holding 16 GB of memory, at a scorching 1 TB/s memory bandwidth. Both the X80 Ti and the X80 TITAN double the pixel- and texture- fill-rates from the GTX 980 Ti and GTX TITAN X, respectively.

Source:

VideoCardz

Based on the "Pascal" architecture, the GP104 silicon is expected to feature as many as 4,096 CUDA cores. It will also feature 256 TMUs, 128 ROPs, and a GDDR5X memory interface, with 384 GB/s memory bandwidth. 6 GB could be the standard memory amount. Its texture- and pixel-fillrates are rated to be 33% higher than those of the GM200-based GeForce GTX TITAN X. The GP104 chip will be built on the 16 nm FinFET process. The TDP of this chip is rated at 175W.Moving on, the GP100 is a whole different beast. It's built on the same 16 nm FinFET process as the GP104, and its TDP is rated at 225W. A unique feature of this silicon is its memory controllers, which are rumored to support both GDDR5X and HBM2 memory interfaces. There could be two packages for the GP100 silicon, depending on the memory type. The GDDR5X package will look simpler, with a large pin-count to wire out to the external memory chips; while the HBM2 package will be larger, to house the HBM stacks on the package, much like AMD "Fiji." The GeForce X80 Ti and the X80 TITAN will hence be two significantly different products besides their CUDA core counts and memory amounts.

The GP100 silicon physically features 6,144 CUDA cores, 384 TMUs, and 192 ROPs. On the X80 Ti, you'll get 5,120 CUDA cores, 320 TMUs, 160 ROPs, and a 512-bit wide GDDR5X memory interface, holding 8 GB of memory, with a bandwidth of 512 GB/s. The X80 TITAN, on the other hand, features all the CUDA cores, TMUs, and ROPs present on the silicon, plus features a 4096-bit wide HBM2 memory interface, holding 16 GB of memory, at a scorching 1 TB/s memory bandwidth. Both the X80 Ti and the X80 TITAN double the pixel- and texture- fill-rates from the GTX 980 Ti and GTX TITAN X, respectively.

180 Comments on NVIDIA's Next Flagship Graphics Cards will be the GeForce X80 Series

Let me correct that:

X80 - 1000 $

X80ti - 1500$

X80 Titan - 3000$

After all the Fury X manages to beat the TITAN-X in TR at 4K even though it only has 4GB of RAM compared to the TITAN-X's 12GB.

So TR doesn't need 7.5GB of VRAM it will just use them if they are provided.

Rise of the Tomb Raider

Shadows of Mordor

GTA V

There might be other titles that also use more, however, these are the games I am interested in and have noticed use a lot of VRAM. :)

With DX12 games coming out, I am sure we will see even more VRAM used in the near future. Games like Deus Ex - Mankind Divided and so forth.

Then there is the modding community, for games, which add extra details to textures, more objects, more landscapes. I am sure when Fallout 4's Creation Kits comes out, that will also go over the 6GB VRAM usage for some of us.

Things like Anti-Aliasing and Resolution also comes into play for people like me that don't like jaggies. Downside? More VRAM usage.

Then there are all the monitors with their refresh rates... 60hz, 75hz 85Hz 100Hz 120Hz 144Hz+ I prefer mine stable at 120FPS if you run out of VRAM, you can be sure to see those numbers dip more often than you like... loosely translated... in-game stuttering.

And last but not least. It's always a good thing to have that little extra head room when it comes to VRAM to make things a little future proof, especially when you are someone like me that doesn't enjoy upgrading his GPU once a year and being wallet raped while doing it.

Many factors are involved, more than people would like to realize.The performance impact translates into what people refer to as "Stuttering" this happens when new textures have to be loaded into the VRAM and older textures have to be removed. When there is enough VRAM headroom for all the textures, then there is no problem, especially when you have to move back and forth to prior locations within the game world.

Note: there are other components that will also have to work in harmony and conjunction with each other for everything to work smoothly. For example, a PC using a SSD won't struggle as much vs one that still uses an HDD.

Often referred to as a bottleneck.

I've tested this well in Killing Floor 2 as I was making tweaker for it. With Texture Streaming enabled, on 4GB VRAM graphic card, memory can go up to 2 GB with everything set to Ultra at 1080p. But if I disable Texture Streaming, memory usage goes to 3,9 GB. Game doesn't look any better. So, there's that. If I had 12GB, game could fit all of it in memory. Would there be any actual benefit? Not really. Maybe less stuttering on systems with crappy data storage subsystem (slow HDD). If you have SSD or SSHD like I do, I frankly don't notice any difference.

Don't know how clever other engines are, but Texture Streaming does wonders when you have tons of textures, but you're limited by VRAM capacity.

If you want to target as many users as possible while still delivering high image quality, this is excellent way to do it.

VRAM is filled because it is possible, not because it is required. When you increase IQ, you decrease the ability for the GPU to push out 120/144 fps, which means the 'stutter' is masked by the lower framerate. Frametimes are never stable and neither is FPS. The real limitation is the GPU core, not the VRAM. Memory overclocking gains are always lower than core overclocking.

There are almost zero situations in which the GPU is out of horsepower before the VRAM starts to complain. High end GPU's are balanced around core versus VRAM usage.

Now, the real issues with regards to having 'more gpu core horsepower' than the VRAM can work with, can occur in SLI/Crossfire. But when you go into that realm, you are already saying goodbye to the lowest input lag numbers ánd you say goodbye to a fully stutter-free gaming experience. GPU's in SLI are never 100% of the time in perfect sync. Any deviation from that sync will either result in a higher frametime OR higher input lag.

You can't just say 'a game can use 8 GB so I need to have 8 GB GPU's'.

From the review here on the Titan X

W1zzard's conclusion after testing

"Our results clearly show that 12 GB is overkill for the Titan X. Eight GB of memory would have been more than enough, and, in my opinion, future-proof. Even today, the 6 GB of the two-year-old Titan are too much as 4 GB is enough for all titles to date. Both the Xbox One and PS4 have 8 GB of VRAM of which 5 GB and 4.5 GB are, respectively, available to games, so I seriously doubt developers will exceed that amount any time soon.

Modern games use various memory allocation strategies, and these usually involve loading as much into VRAM as fits even if the texture might never or only rarely be used. Call of Duty: AW seems even worse as it keeps stuffing textures into the memory while you play and not as a level loads, without ever removing anything in the hope that it might need whatever it puts there at some point in the future, which it does not as the FPS on 3 GB cards would otherwise be seriously compromised."

www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_Titan_X/33.html

Does COD AW use 7.3 GB of VRAM if available? Yes.

Does COD AW need 7.3 GB of VRAM? No.

Again, they will be sold incomplite cut out chip for best gameplay product (x80ti it is scrapped chip 5120 core !!!!!!!!!!!!!! and scraped RAM DDR5 to !!!!!!!!!!!!!!!!!!!!!!!!):mad: ! TITAN is another class( for D.P.and CUDA use) I hope that polaris will be better .12.6TFLOPS on TITAN.It sounds good to be a little in relation to the predicted 300%. 10.5TFLOPS X80ti also does not promise 100% jump. We will see ,sustained waiteng and earneng money !I hope that will be wise enough and quickly issued a dual GPU product.

Sorry for the rant.

Your position, however, is alot different than saying bta or someone else on TPU made it up.

LOL @ vRAM discussion... SOME games will use more ram for giggles and not show stuttering (until something else needs the space). BF4 did this... but make no mistake about it... there was stuttering with 2GB cards at 1080p Ultra settings.... regardless if it used 3.5GB on a 4GB card or 2.5GB on a 2GB card.

X80 = X = 10 = 1080 ;) so they thought X sounded "cooler" because marketing!

Time for a set of X80 Ti,s ... :)

Leaks, PR, and Nvidia have all said Pascal is bringing back compute performance for deep learning which could limit all but the Titan GPU to GDDR5x. Reasoning behind this is because the compute cards were pretty much confirmed already to have HBM2 and a minimum of 16GB at that, and if that's the case it could also mean the Titan will have the double duty classification brought back with HBM2, leaving the rest with GDDR5x. This really isn't an issue, but I suppose people will argue otherwise.

the naming makes sense the rest of it sounds a tad on the optimistic side.. but who knows.. he he..

6 gigs of memory is a realistic "enough" the fact that some amd cards have 8 is pure marketing hype.. no current game needs more than 4 gigs.. people who think otherwise are wrong..

i will be bit pissed off if my currently top end cards become mere mid range all in one go but such is life.. :)

trog

It honestly looks like anyone could have typed this up in Excel and posted to Imgur, as the table is very generic and does not have indicators of Nvidia's more presentation-based "confidential" slides.

History of notable GPU naming schemes:

GeForce 3 Ti500 (October 2001)

GeForce FX 4800 (March 2003)

GeForce 6800 GT (June 2004)

GeForce GTX 680 (March 2012)

GeForce PX sounds more appropriate for Pascal, considering they already branded the automotive solution Drive PX 2. I would have no problem purchasing a GPU called "GeForce PX 4096," "GeForce PX 5120 Ti" or "GeForce PX Titan," where 4096 and 5120 indicate the number of shaders.

They could even do the same with Volta in 2018: GeForce VX, GeForce VX Ti, and GeForce VX Titan, etc.

Sorry just love how people get hung up on the name!

You must remember that marketing names these and it is based feel good not technical reasons. how ever they can sell more! a X in front of anything sells!!!!!

But look at the bright side... you neuter yourself (limit FPS) so when you need the horsepower, you have some headroom built in from your curious methods. :p