Wednesday, May 11th 2016

AMD Pulls Radeon "Vega" Launch to October

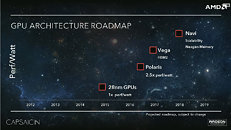

In the wake of NVIDIA's GeForce GTX 1080 and GTX 1070 graphics cards, which if live up to their launch marketing, could render AMD's high-end lineup woefully outperformed, AMD reportedly decided to pull the launch of its next big silicon, Vega10, from its scheduled early-2017 launch, to October 2016. Vega10 is a successor to "Grenada," and will be built on the 5th generation Graphics CoreNext architecture (codenamed "Vega").

Vega10 will be a multi-chip module, and feature HBM2 memory. The 14 nm architecture will feature higher performance/Watt than even the upcoming "Polaris" architecture. "Vega10" isn't a successor to "Fiji," though. That honor is reserved for "Vega11." It is speculated that Vega10 will feature 4096 stream processors, and will power graphics cards that compete with the GTX 1080 and GTX 1070. Vega11, on the other hand, is expected to feature 6144 stream processors, and could take on the bigger GP100-based SKUs. Both Vega10 and Vega11 will feature 4096-bit HBM2 memory interfaces, but could differ in standard memory sizes (think 8 GB vs. 16 GB).

Source:

3DCenter.org

Vega10 will be a multi-chip module, and feature HBM2 memory. The 14 nm architecture will feature higher performance/Watt than even the upcoming "Polaris" architecture. "Vega10" isn't a successor to "Fiji," though. That honor is reserved for "Vega11." It is speculated that Vega10 will feature 4096 stream processors, and will power graphics cards that compete with the GTX 1080 and GTX 1070. Vega11, on the other hand, is expected to feature 6144 stream processors, and could take on the bigger GP100-based SKUs. Both Vega10 and Vega11 will feature 4096-bit HBM2 memory interfaces, but could differ in standard memory sizes (think 8 GB vs. 16 GB).

116 Comments on AMD Pulls Radeon "Vega" Launch to October

I believe we already know about an AMD graphics chip with 4096 SPs. And if the rumors are true, then it's a ~350mm^2 - 360mm^2 chip with HBM2 memory.

HBM1 -Fury X Memory bandwidth 512 (GB/s)

HBM2 - 1024 (GB/s) ?

GDDRX - 1080 Memory bandwidth 320 (GB/s)

www.techpowerup.com/221720/amd-to-launch-radeon-r7-470-and-r9-480-at-computex

So, only 470 and 480 they launch.

Say 470 is at 380x levels, 480 at 390x levels.

Both slower than 1070

Sure, that Vega chip must come earlier.

PS

[INDENT]During a recent interview, Roy Taylor – AMD / RTG’s head executive for Alliances, Content and VR went to defend the decision to only launch mainstream and performance products based on the Polaris architecture. “The reason Polaris is a big deal, is because I believe we will be able to grow that TAM [total addressable market] significantly.”

With Nvidia launching the GeForce GTX 1070 and 1080 for the Enthusiast market, and preparing its third Pascal chip – the GP106 – to fight Polaris 10/11, such approach might be a costly mistake. if the Radeon would be the only product family where the Polaris GPU architecture would make an appearance. In the same interview, Roy said something interesting: “We’re going on the record right now to say Polaris will expand the TAM. Full stop.”

At the same time, we managed to learn that SONY ran into a roadblock with their original PlayStation 4 plans. Just like all the previous consoles (PSX to PSOne, PS2, PS3), the plan was to re-do the silicon with a ‘simple’ die shrink, moving its APU and GPU combination from 28nm to 14nm. While this move was ‘easy’ in the past – you pay for the tapeout and NRE (Non-Recurring Engineering), neither Microsoft nor Sony were ready to pay for the cost of moving from a planar transistor (28nm) to a FinFET transistor design (14nm).

This ‘die-shrink’ requires to re-develop the same chip again, with a cost measured in excess of a hundred million dollars (est. $120-220 million). With Sony PlayStation VR retail packaging being a mess of cables and what appears to be a second video processing console, in the spring of 2014 SONY pulled the trigger and informed AMD that they would like to adopt AMD’s upcoming 14nm FinFET product line, based on successor of low-power Puma (16h) CPU and Polaris GPU processor architecture.

The only mandate the company received was to keep the hardware changes invisible to the game developers, but that was also changed when Polaris 10 delivered a substantial performance improvement over the original hardware. The new 14nm FinFET APU consists out of eight x86 LP cores at 2.1 GHz (they’re not Zen nor Jaguar) and a Polaris GPU, operating on 15-20% faster clock than the original PS4.

According to sources in the know, the Polaris for PlayStation Neo is clocked at 911 MHz, up from 800 MHz on the PS4. The number of units should increase from the current 1152. Apparently, we might see a number higher than 1500, and lower than 2560 cores which are physically packed inside the Polaris 10 GPU i.e. Radeon R9 400 Series. Still, the number of units is larger than Polaris 11 (Radeon R7 400 Series), and the memory controller is 256-bit wide, with GDDR5 memory running higher than the current 1.38 GHz QDR. Given the recent developments with 20nm GDDR5 modules, we should see a 1.75 GHz QDR, 7 Gbps clock – resulting in 224 GB/s, almost a 20% boost.

Internally known as PlayStation Neo, the console should make its debut at the Tokyo Game Show, with availability coming as soon as Holiday Season 2016 – in time for the PlayStation VR headset.

vrworld.com/2016/05/11/amd-confirms-sony-playstation-neo-based-zen-polaris/

PPS

New technology isn't working perfect at the start and this is normal, but some one brave should lead us to the light, and AMD always did that.

This is what AMD is targeting with Polaris. It doesn't matter that it can't compete with the GTX 1080 or possibly the GTX 1070, it was never meant to go head to head with those two. But if one variant of the Polaris 10 chip can deliver performance north of R9 390x/R9 Fury for let's say $200-250 they have already won in that segment, and cut version of Polaris 10 might even go sub $200, and then there's Polaris 11 if they make discreet graphics out of it. Not to mention Apple is already going with Polaris GPUs in their future laptops.

Some tend to forget that people who buy cards for $500+ are in the vast minority. The market is blooming in the $100-$250 range.

Vega was always speculated to hit early Q1 of fiscal 2017, which starts for all companies pretty much in late 2016. It has nothing to do with Nvidia, and AMD starting to panic. It's proceeding as planned, and if they really moved it up, maybe the yields are better for both the chips and HBM2. Naturally AMD also knows that people want enthusiast grade cards, they have not forgot about them, so do AMD AIBs, even tho it doesn't make that much money in the big picture.

People are spelling doom and gloom, when this was never the case, not a single time in history. Both Nvidia and AMD are always neck on neck with their performance in comparable segments, I don't know why this should change now, except if they mess up real badly, but looking at the leaked benchmarks, Polaris is doing just fine for what it was meant to do, so really I don't know why people would worry at all.

Problem is people who don't follow this specific tech industry, don't know that Polaris was never meant as a top card, and they will be disappointed. But that happens all the time anyway, and the Nvidia "fanboys" are always louder, no wonder when they hold ~80% market share, it's to be expected. But at the end of the day, AMD Radeon Group is doing just fine, financial wise as performance wise with their past, current and future cards, no doubt about it.

If AMD really had this planned all along, they would have mentioned it by now. Instead, they waited almost till the last moment and came up with the "expanding TAM for VR" that no one buys. Well, aside from fans, maybe.

I know it's hard to understand that most money comes from cheap(er) products even if you have it black and white on paper. Nobody aside of the internet fanboys gives a crap that brand A is 5-15 frames faster as brand B but both cost $700, exactly nobody. 80% PCs sold are in the OEM, Entry level or mid-range market, and let's not mention laptops.

Just because you want something and you judge a brand by that, doesn't mean it's a vital product for them. If AMD removed the Fury X, their profits would marginally decrease, same with nvidia and their Titan X and 980Ti. They sell nothing compared to GTX 950, 960.

That is basically why AMD isn't doing what you claim they're doing. They're not dropping high-end, they're rushing Vega instead. It's just that probably after all these years in the red, they simply don't have the resources to go both after high-end and mid-range at the same time.

AMD are in court because Glofo promised that they had fixed their yield issues with 32nm

Globalfoundries, despite their rosy predictions for 28nm, had both ramp and yield issues.

Globalfoundies announce 20nmLPM among great fanfare and kill the process when everyone's back was turned

Remember when 14nm-XM was going to take the world by storm? GloFo doesn't remember.

Glofo is doing so well that the latest story doing the rounds could be a cut and paste from any of Glofo's previous exploits: AMD's Polaris fails clock speed validation at 850MHz

While AMD were quick to refute the claim they also claimed that Computex was going to see wall to wall Polaris. Guess we won't have long to wait to find out where the truth lies.

GDDR5X speed is relative. 1) it's only 10 GHz GDDR5X used on GTX 1080, it gets as far as 14 GHz, 2) GTX 1080 is only using a 256 bit bus - other cards could use 384 bit or even 512 bit, which severely increases the bandwidth even with 10 GHz GDDR5X. HBM2 bandwidth isn't really greater then, but HBM2 consumes still less power, I guess.

Must be my OCD, because when you "pull" something, it is cancelled, not moved forward. :shadedshu:

www.techpowerup.com/222294/nvidia-geforce-gtx-1080-specifications-released

E.g. Fury release boosted 3xx sales, etc.

Whether AMD 470/480 would sell well, if there is nothing to take on at least 1070, uhm, who knows.

Will depend on reviewers really. If they focus on 'OMG< so slow" - nope. If on "OMG amazing value", possibly.

It's a possibility, yes. But that possibility depends on the process itself being mature enough to mitigate leakage and stuff. If that's not up to par, you won't be able to jack the clock up. And at this point, we simply don't know. We know it's possible, but we're simply not sure.