Wednesday, May 11th 2016

AMD Pulls Radeon "Vega" Launch to October

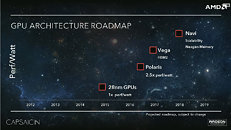

In the wake of NVIDIA's GeForce GTX 1080 and GTX 1070 graphics cards, which if live up to their launch marketing, could render AMD's high-end lineup woefully outperformed, AMD reportedly decided to pull the launch of its next big silicon, Vega10, from its scheduled early-2017 launch, to October 2016. Vega10 is a successor to "Grenada," and will be built on the 5th generation Graphics CoreNext architecture (codenamed "Vega").

Vega10 will be a multi-chip module, and feature HBM2 memory. The 14 nm architecture will feature higher performance/Watt than even the upcoming "Polaris" architecture. "Vega10" isn't a successor to "Fiji," though. That honor is reserved for "Vega11." It is speculated that Vega10 will feature 4096 stream processors, and will power graphics cards that compete with the GTX 1080 and GTX 1070. Vega11, on the other hand, is expected to feature 6144 stream processors, and could take on the bigger GP100-based SKUs. Both Vega10 and Vega11 will feature 4096-bit HBM2 memory interfaces, but could differ in standard memory sizes (think 8 GB vs. 16 GB).

Source:

3DCenter.org

Vega10 will be a multi-chip module, and feature HBM2 memory. The 14 nm architecture will feature higher performance/Watt than even the upcoming "Polaris" architecture. "Vega10" isn't a successor to "Fiji," though. That honor is reserved for "Vega11." It is speculated that Vega10 will feature 4096 stream processors, and will power graphics cards that compete with the GTX 1080 and GTX 1070. Vega11, on the other hand, is expected to feature 6144 stream processors, and could take on the bigger GP100-based SKUs. Both Vega10 and Vega11 will feature 4096-bit HBM2 memory interfaces, but could differ in standard memory sizes (think 8 GB vs. 16 GB).

116 Comments on AMD Pulls Radeon "Vega" Launch to October

Basically, the only new thing is doubling the read/write capability, but even that has been done before. The rest is just refinements on top of GDDR5 (improved efficiency).

It does benefit Nvidia, because fewer traces on the PCB are not only cheaper to manufacture, but cheaper to test as well. You can expect part of those saving to be passed onto the customers as well.

While I don't have numbers on how much savings you can get from a narrower bus, just look at how long the mid range cards have lived without 256bit buses. I was expecting them to have transitioned 5 years ago and it still hasn't happened. I had it on my GTX 460 and the GTX 760 was also on a 256bit bus. But 660(Ti) and 960 took a more conservative approach. This feature seems to get the axe as often as possible...

And to address your original dilemma ("I don't quite understand the point of GDDR5X") - it's not about cost. If you want 10Gbps today, only GDDR5X can deliver (HBM does better, but is limited to 4GB).

What you're saying is like the old joke: what's heavier, 1Kg of lead or 1Kg of feathers?

For a bit of perspective AMD has bought ATI for $5.4bn and now the whole company is worth $3bn (and bleeding non-stop).

You obviously didn't understand what was said... :slap: