Friday, July 15th 2016

SK Hynix to Ship HBM2 Memory by Q3-2016

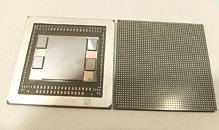

Korean memory and NAND flash giant SK Hynix announced that it will have HBM2 memory ready for order within Q3-2016 (July-September). The company will ship 4 gigabyte HBM2 stacks in the 4 Hi-stack (4-die stack) form-factor, in two speeds - 2.00 Gbps (256 GB/s per stack), bearing model number H5VR32ESM4H-20C; and 1.60 Gbps (204 GB/s per stack), bearing model number H5VR32ESM4H-12C. With four such stacks, graphics cards over a 4096-bit HBM2 interface, graphics cards with 16 GB of total memory can be built.

Source:

SK Hynix Q3 Catalog

77 Comments on SK Hynix to Ship HBM2 Memory by Q3-2016

Because sure, if you look here:

www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_1070/27.html

and here:

www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_1080/30.html

We see the GTX1080 having roughly 80 GB/s more bandwidth, but in performance the memory difference does not seem to matter and in the end the GTX1080 does lose out to the R9 295X2 (if you scroll down to that benchmark).

If the bandwidth was really holding gpu's back already that should not be right?

All the other advantages of smaller cooler cheaper gpu's sure, but in performance it does not seem to change the game at all.

Like i said we are at a Era of GPU's that are about to offer pure performance for 4K and even higher, and that is where the need for real bandwidth kicks in. You cant put 1000TB/s into GDDR5x chips that dont require a minimum of 16 or even more chips on 1 pcb. This is HBM2 and it offers more on PAPER then GDDR5X does at the moment.

wccftech.com/asus-radeon-r9-fury-overclocked-10-ghz-hbm-1400-mhz-gpu-clock-fully-unlocked-fury-features-1-tbs-bandwidth-ln2/

This dude OC'ed that HBM up to 1GHz which offered a rough 1TB/s memory bandwidth, however the Fury X chip itself is'nt strong enough to fully utilitize that 1TB/s at all. You need a GPU that can scale along with that memory. Both Vega and Pascal (high end) will carry HBM2 which should be more then enough bandwidth for both GPU's.

Same goes out for system memory for example on AMD systems. The CPU is'nt able to make use of memory that runs beyond 1866 or 2000MHz. Putting in DDR3 at 2400Mhz is'nt going to offer much.

Case in point is that GTX1080 that does have GDDR5X but does not need it, its more marketing then anything else for that, same as HBM for the FuryX.

And again, the R9 295X with its GDDR5 puts out higher fps which makes me believe there is quite some give in GDDR5 left still.

But yeah, why wait until it runs into those constraints, same with PCI-E slots and the bandwidth freedom it offers already.

GDDRX5 and HBM offer a lower latency, and lower power usage, making cards in general become more efficient. And with less power usage, it means more headroom for the GPU to be clocked higher.

And you forget one crucial thing: the enterprise market is where it all happens, and where that massive bandwidth in for example PCI-express 3.0 is welcomed. We consumers dont drive multiple NIC's and Hardware RAID controllers loaded with up to 32 SSD's at the same time.

on topic: imo the hbm2 will be left for the next gen cards by both companies. the use of hbm2 on anything else than the titan p will be a waste.

I would be thrilled if they had basically a Xeon D on a board twice the size of a Raspberry Pi. Now that would be impressive.

There's some computer work out there that does require massive reserves of memory (e.g. 3D scanning) and by having that much of ridiculously fast memory could translate to near instantaneous progress. Granted, there aren't many buyers for specialized hardware like that.

I could only see HBM used on die for high-performance embedded solutions. For example, video game consoles, home theater devices, phones, and tablets. You know, places where memory usually isn't expandable.~2 GB/s, what I described would be in the neighborhood of 26 TB/s (and that's single channel). They really aren't comparable. That said, you'd still need those NVMe storage devices to unload the data from the HBM.

And is that really a liquid cooler on the R9 in testing, vs a FE? lol.. Yea, not very fair on that either.. Cooling = performance.. At the end the 1080 is at 78c, meaning it's starting to throttle.. On the ACX 3.0, it wouldn't even be close to throttling. while that R9 with extra cooling is running very cool.

BF3, 2560x1440:

GTX1080: 138 fps

R9 295X: 151 fps

And liquid cooling as nothing to do with it, this was a discussion about GDDR5X or HBM giving an performance increase atm or not...pay attention pls.

That doesn't compute. Because the link I posted, it did not do better. Especially in games like witcher 3, 4k, with real HD graphics.. The 1080 whomped it like a red headed step child.

Regardless what the discussion is about. If someone posts BS, I'm going to call them on it. Don't get mad now.. Don't post nonsense and bad benchmarks pls..

Compare cards with similar cooling options or don't.. I mean you do know what throttling is right?

If I say for example the Honda S2000 has better windows then the Bugatti Veyron...

Are you going to mention thats not true because the Veyron is faster?

You seem to be unable to grasp the simple discussion about the need for GDDR5X/HBM memory vs standard GDDR5....which I find amazing...either that or you need to work on your reading comprehension, oh well.

PS:

You call Techpowerup's own benchmarks "nonsense" and "bad"?

Why are you even on this website then?

:)