Monday, July 23rd 2018

Top Three Intel 9th Generation Core Parts Detailed

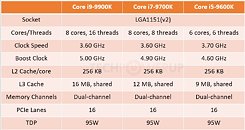

Intel is giving finishing touches to its 9th generation Core processor family, which will see the introduction of an 8-core part to the company's LGA115x mainstream desktop (MSDT) platform. The company is also making certain branding changes. The Core i9 brand, which is being introduced to MSDT, symbolizes 8-core/16-thread processors. The Core i7 brand is relegated to 8-core/8-thread (more cores but fewer threads than the current Core i7 parts). The Core i5 brand is unchanged at 6-core/6-thread. The three will be based on the new 14 nm+++ "Whiskey Lake" silicon, which is yet another "Skylake" refinement, and hence one can't expect per-core IPC improvements.

Leading the pack is the Core i9-9900K. This chip is endowed with 8 cores, and HyperThreading enabling 16 threads. It features the full 16 MB of shared L3 cache available on the silicon. It also has some stellar clock speeds - 3.60 GHz nominal, with 5.00 GHz maximum Turbo Boost. You get the 5.00 GHz across 1 to 2 cores, 4.80 GHz across 4 cores, 4.70 GHz across 6 to 8 cores. Interestingly, the TDP of this chip remains unchanged from its predecessor, at 95 W. Next up, is the Core i7-9700K. This chip apparently succeeds the i7-8700K. It has 8 cores, but lacks HyperThreading.The Core i7-9700K is an 8-core/8-thread chip clocked at 3.60 GHz, but its Turbo Boost states are a touch lower than those of the i9-9900K. You get 4.90 GHz single-core boost, 4.80 GHz 2-core, 4.70 GHz 4-core, and 4.60 GHz across 6 to 8 cores. The L3 cache amount is reduced to the 1.5 MB per core scheme reminiscent of previous-generation Core i5 chips, as opposed to 2 MB per core of the i9-9900K. You only get 12 MB of shared L3 cache.

Lastly, there's the Core i5-9600K. There's far too little changed from the current 8th generation Core i5 parts. These are still 6-core/6-thread parts. The nominal clock is the highest of the lot, at 3.70 GHz. You get 4.60 GHz 1-core boost, 4.50 GHz 2-core boost, 4.40 GHz 4-core boost, and 4.30 GHz all-core. The L3 cache amount is still 9 MB.

The three chips are backwards-compatible with existing motherboards based on the 300-series chipset with BIOS updates. Intel is expected to launch these chips towards the end of Q3-2018.

Source:

Coolaler

Leading the pack is the Core i9-9900K. This chip is endowed with 8 cores, and HyperThreading enabling 16 threads. It features the full 16 MB of shared L3 cache available on the silicon. It also has some stellar clock speeds - 3.60 GHz nominal, with 5.00 GHz maximum Turbo Boost. You get the 5.00 GHz across 1 to 2 cores, 4.80 GHz across 4 cores, 4.70 GHz across 6 to 8 cores. Interestingly, the TDP of this chip remains unchanged from its predecessor, at 95 W. Next up, is the Core i7-9700K. This chip apparently succeeds the i7-8700K. It has 8 cores, but lacks HyperThreading.The Core i7-9700K is an 8-core/8-thread chip clocked at 3.60 GHz, but its Turbo Boost states are a touch lower than those of the i9-9900K. You get 4.90 GHz single-core boost, 4.80 GHz 2-core, 4.70 GHz 4-core, and 4.60 GHz across 6 to 8 cores. The L3 cache amount is reduced to the 1.5 MB per core scheme reminiscent of previous-generation Core i5 chips, as opposed to 2 MB per core of the i9-9900K. You only get 12 MB of shared L3 cache.

Lastly, there's the Core i5-9600K. There's far too little changed from the current 8th generation Core i5 parts. These are still 6-core/6-thread parts. The nominal clock is the highest of the lot, at 3.70 GHz. You get 4.60 GHz 1-core boost, 4.50 GHz 2-core boost, 4.40 GHz 4-core boost, and 4.30 GHz all-core. The L3 cache amount is still 9 MB.

The three chips are backwards-compatible with existing motherboards based on the 300-series chipset with BIOS updates. Intel is expected to launch these chips towards the end of Q3-2018.

121 Comments on Top Three Intel 9th Generation Core Parts Detailed

Secondly, your phrasing makes it sound like you're saying Zen was built on Bulldozer. Zen was a clean-slate design, with no shared architecture with Bulldozer. Which, again, underscores how you've misunderstood/misremembered the whole "low-hanging fruit" thing. You don't get a 55% IPC improvement and a ~100% efficiency improvement by fixing "low-hanging fruit" in a design you've already iterated on multiple times.

Third, Ryzen's IPC is around 10% slower on average - more in a few benchmarks (particularly gaming), less in others, faster in some (like CB15 and most rendering tasks). A 15% increase (on average) would as such make it ... wait for it ... faster than Skylake. And as such also KBL and CFL and whateverLake Refresh 8-core. I really don't think this is going to happen, particularly in gaming, but I see no reason to think AMD won't close the IPC gap some with Zen 2. Zen is facing its first major update. The upcoming 8-core Intel chip is (likely) not an architecture update at all, and whatever comes after it will be the 5th architecture revision of the design. In other words: AMD will likely have a far easier time finding places to improve performance, as Intel has been at this for years.Remember, stock coolers aren't for OCing. And while I personally wouldn't run a 2700X on the stock cooler, at least it doesn't thermal throttle - unlike the "65W" i7-8700 with its stock cooler. Also, for those of us with custom water loops (or anyone with a faulty cooler or doing an "everything is working" test mount of a new build) stock coolers are very useful for troubleshooting or various pre-build tests. I agree that it's a shame with thousands of thrown-out coolers, but on the other hand, the savings would be negligible, and the coolers have utility for some of us. There's no way AMD is paying $20 for those coolers.

And just FYI, more cache does not always equal faster operation.

Who needs 10Gb ethernet anyway, outside of data centers? Do some home users enjoy moving massive files around between RAMdisks on a regular basis? 1Gb ethernet is still fine. Now 100Mb ethernet, or worse yet, wireless G :fear:is worth complaining about being slow... unless you're doing something weird, like mirroring 10TB of data to some backup box on a daily basis, 10Gb doesn't seem really useful for home users yet.

i8 9800kht - 8/16 95w

i8 9800ht 8/16, 95w

i8 9800k - 8/8, 95w

i8 9800 - 8/8, 65w

i7 9700kht - 6/12, 95

i7 9700ht - 6/12, 95w

i7 9700k - 6/6, 95w

i7 9700 - 6/6, 65w

So they would still all be unlocked by the "k" and the "ht" (hyper-threading) is included. I myself prefer cpus with hyper-threading off, ht adds 40% more heat and 25% more performance.

I guess intel does not like odd numbers. i3 i5 i7 i9. So we might never see, i2, i4 , i6, i8 or i10 hehe maybe ix?

And yes more cache does not always equal faster operation, having more than a dual core too. Do you still buy a dual core over a 8 cores because of that?

I kinda veered off a little there on what I was trying to get at. In short, my point is that just because lots of PCI-E lanes are not really necessary for most doesn't mean it shouldn't be available. AMD has proven it can be done without some huge increase in cost, as we have seen from Intel. They were getting too comfortable completely dominating the market and coming up with more ways to increase profits further by deliberately cutting out this and that and only making it available on more expensive products.

Still, I would love the option of a >GbE connection on my gaming/photo editing rig too. Hopefully an AM4 ITX board shows up in the next couple of years with 10(or at least 5)GbE built in. For now, I'll have to make do with some sort of local SSD caching scheme for photos I'm working on (with everything else on the NAS) - which isn't exactly cheap either. I'm currently working through the ~70GB of photos I came back with from travelling this summer (only ~1700 24MP photos, but uncompressed RAW files are not small), and it's really putting the hurt on my system SSD. An extra NVMe slot or 10GbE would be very nice to have - and I have zero need for an HEDT CPU (that would be quite detrimental to what I use the PC for, in fact).

Also: mainstream PCs have had ~16 PCIe lanes for quite a few years. AMD added 4. Intel has a bunch through their chipset, which is both good (plenty of potential devices connected) and bad (bottlenecked if using for example an SSD and a 10GbE NIC at the same time). On the other hand, NVMe storage has massively increased the potential use for PCIe in relatively mainstream PCs (especially for those who can't afford large SSDs, who can no longer buy additional ones as storage needs grow). We're getting to the point where a lot of people might be considering getting their second NVMe drive - especially now that prices are coming down. Is asking for a bit more really too much even for relatively inexpensive mainstream components? Intel increasing the QPI link between CPU and chipset from x4 to x8 bandwidth shouldn't be that challenging or expensive, no? Or just adding a teeny-tiny microcode update to allow for finer-grained lane bifurcation? I'd gladly go for splitting the CPU x16 slot into x8+x4+x4 (GPU, NIC, SSD) or similar (maybe x8 GPU + x8 dual-SSD riser card?).Photos are pretty much the definition of not throwaway data ;) Even though Lightroom stores edits separately from base files in a catalog file, I'd argue that the amount of work put into such a catalog makes that very much not throwaway too, even if the photos are safely stored elsewhere.

As for working off an HDD or HDD raid, response times (and transfer speeds for non-RAID) make that barely better than working over a GbE link. Worse if the data coming over Ethernet is off an SSD, as response times will be better even if transfer speeds are roughly the same. As I said above, I'll probably end up getting a bigger SSD for local "caching" of stuff I'm working on, but I'll have to look into ways of at least somewhat automating the caching as transferring back and forth over the network manually is a chore. Not to mention avoiding duplicate versions of files, making sure edits are synced across devices, and all that jazz.

Personally, I like to specialize PC builds somewhat based on their use. I don't see any reason for my desktop PC (which really doesn't need multiple TB of storage given that it's mostly used for office work and gaming (with frequent if not constant photo work - it's a hobby, after all)) to have large, noisy and hot HDDs. It's built to balance size, noise and power, and HDDs don't fit well into that balance. Conversely, we need enough backup storage (given that we're both hobby photographers and my GF does film production) that a NAS is a must anyway, which would make storing everything locally quite redundant. The NAS doubles as an HTPC for now, although I'm planning on separating that out so that the NAS doesn't have to live next to the TV. The NAS is also configured for power efficiency, 24/7 operation, and set up for remote access when we're travelling, which would be quite silly for my ~90W-at-idle water cooled desktop (not to mention the Threadripper workstation :eek:). A pump failure or coolant leak when I'm not coming home for two weeks is not something I want to experience.

This type of thinking (and these hobbies/this work, I suppose) is how you end up with 5-6 computers in a 2-person household, btw :D

As for noisy and hot drives, I know I'm picky, but I also have a bad track record with HDDs in my main systems. Luckily my NAS is both relatively quiet and stable, but I've had far too many failed HDDs and clicky, whiny ones (not to mention ones that cause vibration noises no matter the amount of rubber mounting hardware used) to keep them around in my main rig. Since my PC is around 50cm from my head at all times (off the floor to keep dust out, on a shelving unit next to my desk), silence is crucial. Currently, when not loading the GPU I can barely tell that it's on at all. That's how I want it. Any HDD would be audible above this.