Thursday, September 27th 2018

NVIDIA Fixes RTX 2080 Ti & RTX 2080 Power Consumption. Tested. Better, But not Good Enough

While conducting our first reviews for NVIDIA's new GeForce RTX 2080 and RTX 2080 Ti we noticed surprisingly high non-gaming power consumption from NVIDIA's latest flagship cards. Back then, we reached out to NVIDIA who confirmed that this is a known issue which will be fixed in an upcoming driver.

Today the company released version 411.70 of their GeForce graphics driver, which, besides adding GameReady support for new titles, includes the promised fix for RTX 2080 & RTX 2080 Ti.

We gave this new version a quick spin, using our standard graphics card power consumption testing methodology, to check how things have been improved.

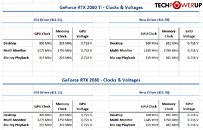

As you can see, single-monitor idle power consumption is much better now, bringing it to acceptable levels. Even though the numbers are not as low as Pascal, the improvement is great, reaching idle values similar to AMD's Vega. Blu-ray power is improved a little bit. Multi-monitor power consumption, which was really terrible, hasn't seen any improvements at all. This could turn into a deal breaker for many semi-professional users looking at using Turing, not just for gaming, but productivity with multiple monitors. An extra power draw of more than 40 W over Pascal will quickly add up into real Dollars for PCs that run all day, even though they're not used for gaming most of the time.We also tested gaming power consumption and Furmark, for completeness. Nothing to report here. It's still the most power efficient architecture on the planet (for gaming power).The table above shows monitored clocks and voltages for the non-gaming power states and it looks like NVIDIA did several things: First, the memory frequency in single-monitor idle and Blu-ray has been reduced by 50%, which definitely helps with power. Second, for the GTX 2080, the idle voltages have also been lowered slightly, to bring them in line with the idle voltages of RTX 2080 Ti. I'm sure there's additional under-the-hood improvements to power management, internal ones, that are not visible to any monitoring.

Let's just hope that multi-monitor idle power gets addressed soon, too.

Today the company released version 411.70 of their GeForce graphics driver, which, besides adding GameReady support for new titles, includes the promised fix for RTX 2080 & RTX 2080 Ti.

We gave this new version a quick spin, using our standard graphics card power consumption testing methodology, to check how things have been improved.

As you can see, single-monitor idle power consumption is much better now, bringing it to acceptable levels. Even though the numbers are not as low as Pascal, the improvement is great, reaching idle values similar to AMD's Vega. Blu-ray power is improved a little bit. Multi-monitor power consumption, which was really terrible, hasn't seen any improvements at all. This could turn into a deal breaker for many semi-professional users looking at using Turing, not just for gaming, but productivity with multiple monitors. An extra power draw of more than 40 W over Pascal will quickly add up into real Dollars for PCs that run all day, even though they're not used for gaming most of the time.We also tested gaming power consumption and Furmark, for completeness. Nothing to report here. It's still the most power efficient architecture on the planet (for gaming power).The table above shows monitored clocks and voltages for the non-gaming power states and it looks like NVIDIA did several things: First, the memory frequency in single-monitor idle and Blu-ray has been reduced by 50%, which definitely helps with power. Second, for the GTX 2080, the idle voltages have also been lowered slightly, to bring them in line with the idle voltages of RTX 2080 Ti. I'm sure there's additional under-the-hood improvements to power management, internal ones, that are not visible to any monitoring.

Let's just hope that multi-monitor idle power gets addressed soon, too.

53 Comments on NVIDIA Fixes RTX 2080 Ti & RTX 2080 Power Consumption. Tested. Better, But not Good Enough

@W1zzard Did you try testing the power consumption using different screen resolutions? I think the cards will show differences there and I suggest using 2K and 4K video modes since they're the most common.

the 10 series have a widely-documented problem with idle power consumption (which you guys being "journalists" should already know) which is popularly believed to stem from mixed refresh rates (example one or more 60hz monitor and one or more higher than that such as 144hz) causing the card to not actually idle when it should be, resulting in power draw right around ... guess what ... 40 watts

however, contrary to that popular belief, that problem isnt directly from the mixed refresh rates, but instead from the mix of which ports are used on the card, with displayport in particular being the culprit. if you only use hdmi and/or dvi then the card will actually idle ... but the moment you use displayport in a multi-monitor setup because youre "supposed" to use displayport for higher refresh rates, it wont. dont believe me? saying to yourself "yeah i could totally prove you wrong if i had the hardware tools readily available for measuing off-the-wall-power but im too lazy to do that rn"? well the good news is you dont need hardware tools, all you need is afterburner or precision, whichever tool you prefer that can show the current clocks (to see if the cards actually in an idle state or not). 400ish core clock? idle. 1000+ core clock? not idle. comprende?

plug your multiple monitors into hdmi and/or dvi and NOT displayport and watch what happens to the clockspeed in afterburner/precision WHAT THE FUCK THE CARD IS ACTUALLY IDLING NOW ..... or if you only have 1 hdmi and no dvi because you have an FE, then plug the higher refresh rate monitor into hdmi and the lower refresh one(s) into displayport WHAT THE FUCK THE CARD IS ACTUALLY IDLING NOW

youre welcome

oh and before you say "hurr durr my higher refresh monitor wont work on hdmi because its not 2.0" ..... how sure are you that its not 2.0? do it anyway, go into NCP, and see what's there. wait ... do you mean ... that people on the internet (like nvidia and OEMS) would go on the internet and just tell lies ...?

youre welcome, again

Pascal was a bit of an outlier. Due to lack of competition, nVidia simply tweaked Maxwell and, along with a die shrink, both increased performance and efficiency, and the power draw was superb. Now, with Turing, we have really big dies thanks to all the RT stuff (big die means inefficient) and more shaders/CUDA cores/whatever they're called now.

Next time competition heats up, especially in the high performance segment, nobody will give a flying whale turd about power draw and focus on getting the best performance, because that's what wins sales. While we like efficiency, almost nobody will buy a lower performing product because it's more efficient. We need FPS!

the original point was: "drew WAY more power (and ran way hotter)", you deflected it to make it temperature limit,while it was about gaming temps and power all the time.Also,you can keep arguing that one can talk about temps and noise as separate issues,but not with me anymore.If one card is running 83 degrees at 50% fan while the other has 85 degrees at 90% then we have a clear temperature winner here.At 90% fan the gtx 1080 fe stayed at 70 degrees with 2063/11000 OC IIRC, I had it for a couple of months only.

This rather pointedly dismisses your outlandish "90% fan speed" statement, and again reinforces my statement that the cards have a maximum thermal limit that is a mere 2 degrees above the GTX 1080. Not dependent on fan speed, high or low.

While 28nm > 16nm is a real node shrink, 16nm > 12nm actually is not. 12nm is more optimized (mainly power) but it is all basically the same process.I have two 1440p monitors (and a 4K TV that is connected half the time) and 2080 so far does not go below 50W even with the latest 411.70 drivers. It is not the first time Nvidia has fucked up the multi-monitor idle modes and will not be the last. Sounds like something they should be able to fix in the drivers though.Interesting. I was seeing that exact problem with 1080Ti for a while but it was resolved at some point in drivers. 2080 on the other hand seems to still run into the same issue.

@W1zzard, what monitors did you have in multi-monitor testing and which ports? :)

Also, have you re-tested the Vega64 or are you using older results? About a month and couple drivers after release my Vega 64 started to idle at some incredibly low frequency like 32Mhz with 1-2 monitors and reported single-digit power consumption at that.Cards do have a thermal limit but that is usually at 100-105C.

83, 85 or 90 are the usual suspects for maximum temperature you see because that is where the fan controller is configured to.

I'm still trying to decipher what this claimed bug is from your rant... are you saying there is an issue if using monitors with different refresh rates AND one is plugged into DP? Or is it only if more than one monitor is used, and one of them is plugged into a DP? The only reference to this I can find online is from 2016, can you maybe give me some recent links so I can do further research?

Not @no_ but I guess I can answer that - Different refresh rates and one plugged into DP (which often enough is a requirement for high refresh rate).

That power bug link you found is related but as far as I remember it was not the exact bug as after the fix for that there were still problems.

I honestly havent found a good source for this either. It only occasionally comes up in a conversation on different topics, mainly using mixed refresh rate monitors, high refresh rate monitors, sometimes driver updates, power consumption/tweaking threads etc. For what it's worth, I have not seen this in a long time with Nvidia 10-series GPUs. It used to be a problem but not any more.

These power numbers are not really that bad for desktop usage anyway. Where it's really matters is mobile space, I kind of doubt we will see RTX cards on laptops anytime soon. Maybe some TU106 variant will made it, but Nvidia will have the dilemma how marketing that. Pascal where all about equalizing naming with desktop and laptops parts, so will they name RTX 2070 as their highest mobile card or go back to laptop variant is tier lower than desktop variant.

1. Card A consumes 20 units of power and has 20 units of performance. Card B consumes 20 units of power and has 35 units of performance.

2. Card A consumes 20 units of power and has 20 units of performance. Card B consumes 12 units of power and has 20 units of performance.

In which situation and for which card is power consumption a problem?No, it is not. You can do a minor undervolt from stock settings that won't help much with anything. All the "Undervolt" articles are about overclocking and include raising the power limit to 150%.

Undervolting is a real thing as the GPU is (arguably) overvolted but it does not really do much at stock settings. You get about 20-30MHz from undervolting leaving everything else the same.

Undervolt is definitely a requirement for overclocking for Vega as OC potential without undervolting is very limited. Even at 150% power limit you will not see considerable boost and probably not even spec boost clock without undervolting first. This does not reduce the power consumption either.

C´mon people, this trend to defend the "underdog" at all costs is getting pretty ridiculous at this point.