Monday, October 8th 2018

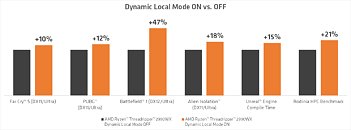

AMD Introduces Dynamic Local Mode for Threadripper: up to 47% Performance Gain

AMD has made a blog post describing an upcoming feature for their Threadripper processors called "Dynamic Local Mode", which should help a lot with gaming performance on AMD's latest flagship CPUs.Threadripper uses four dies in a multi-chip package, of which only two have a direct access path to the memory modules. The other two dies have to rely on Infinity Fabric for all their memory accesses, which comes with a significant latency hit. Many compute-heavy applications can run their workloads in the CPU cache, or require only very little memory access; these are not affected. Other applications, especially games, spread their workload over multiple cores, some of which end up with higher memory latency than expected, which results in a suboptimal performance.

The concept of multiple processors having different memory access paths is called NUMA (Non-uniform memory access). While technically it is possible for software to detect the NUMA configuration and attach each thread to the ideal processor core, most applications are not NUMA aware and the adoption rate is very slow, probably due to the low number of systems using such a concept.In ThreadRipper, using Ryzen Master, users are free to switch between "Local Memory Access" mode or "Distributed Memory Access" mode, with the latter being the default for ThreadRipper, resulting in highest compute application performance. Local Mode on the other hand is better suited to games, but switching between the modes requires a reboot, which is very inconvenient for users.

AMD's new "Dynamic Local Mode" seeks to abolish that requirement by introducing a background process that continually monitors all running applications for their CPU usage and pushes the more busy ones onto the cores that have direct memory access, by adjusting their process affinity mask, which selects which processors the application is allowed to be scheduled on. Applications that require very little CPU are in turn pushed onto the cores with no memory access, because they are not so important for fast execution.This update will be available starting October 29 in Ryzen Master, and will be automatically enabled unless the user manually chooses to disable it. AMD also plans to open the feature up to even more users by including Dynamic Local Mode as a default package in the AMD Chipset Drivers.

Source:

AMD Blog Post

The concept of multiple processors having different memory access paths is called NUMA (Non-uniform memory access). While technically it is possible for software to detect the NUMA configuration and attach each thread to the ideal processor core, most applications are not NUMA aware and the adoption rate is very slow, probably due to the low number of systems using such a concept.In ThreadRipper, using Ryzen Master, users are free to switch between "Local Memory Access" mode or "Distributed Memory Access" mode, with the latter being the default for ThreadRipper, resulting in highest compute application performance. Local Mode on the other hand is better suited to games, but switching between the modes requires a reboot, which is very inconvenient for users.

AMD's new "Dynamic Local Mode" seeks to abolish that requirement by introducing a background process that continually monitors all running applications for their CPU usage and pushes the more busy ones onto the cores that have direct memory access, by adjusting their process affinity mask, which selects which processors the application is allowed to be scheduled on. Applications that require very little CPU are in turn pushed onto the cores with no memory access, because they are not so important for fast execution.This update will be available starting October 29 in Ryzen Master, and will be automatically enabled unless the user manually chooses to disable it. AMD also plans to open the feature up to even more users by including Dynamic Local Mode as a default package in the AMD Chipset Drivers.

86 Comments on AMD Introduces Dynamic Local Mode for Threadripper: up to 47% Performance Gain

Interestingly enough, as diligent as HardOCP is testing Intel's IPC, they seem to have missed Zen completely. Still, I could find:

Amd/comments/8f0pxrwww.sweclockers.com/test/24701-intel-core-i9-7980xe-skylake-x/19#content

and if you don't mind looking at slightly older hardware:

forums.anandtech.com/threads/ryzen-strictly-technical.2500572/#post-38770109I don't think this is supposed to lower latency. Just to move threads that need lower latency to the cores that are connected to RAM.

I would like to still see some bios improvements the downcore modes for the 2990wx are silly on my asrock board. I want to just tell it to run two module mode and basically boot like a 2950x, but that isn't a thing unless I use ryzen master which kills my oc and I have to oc through windows which isn't the same for me.

AMD is at fault for outfitting 2970WX/2990WX with two "crippled" dies. When AMD needs to make a program to manipulate the running threads in real time, then something is not right.

While 2950X(16-core) is an okay product, 2970WX/2990WX only scales well for certain workloads, more server workloads rather than workstation. AMD would have to do better for Zen 2 Threadrippers.

Where there is no problem in Linux. So you are telling me that open software foundations are able to fix the problems yeta multi-billion-dollar company cannot?

I will tell you what is flawed that's Intel processors due to Spectre and meltdown, that bios updates and winblows patches were released that crippled their performance even further. Yet Microsoft Tried to force in those patches on AMD Rigs, but won't fix scheduler flaws?

Go back into your cave and hibernate.

It's an asymmetric design in a world that's not used to that. To that end, AMD could have probably done a better job and ensured everything was worked out before launch. That aside, if you understand the limitations and you really need all those cores*, these CPUs can deliver.

*admittedly a sliver of the market as a whole, but HEDT never catered to anything but

Go back to your Intel threads

is the hit that big ?

No, not at all. Where do you get this from? Two dies on 2970WX/2990WX have to go through the Infinity Fabric to access memory, which causes significant latency. Many workloads are latency sensitive, and this only gets worse when using multiple applications at once. AMD could have made Threadripper without these limitations, but perhaps not on this socket.

I thought this was a tech forum…

As i said before gtho, go back to your intel threads.

IIRC hardware unboxed showed that 2970WX will be a great product & not slow down as much due to the 4 channel memory limitation.