Sunday, December 30th 2018

NVIDIA GeForce RTX 2060 Founders Edition Pictured, Tested

Here are some of the first pictures of NVIDIA's upcoming GeForce RTX 2060 Founders Edition graphics card. You'll know from our older report that there could be as many as six variants of the RTX 2060 based on memory size and type. The Founders Edition is based on the top-spec one with 6 GB of GDDR6 memory. The card looks similar in design to the RTX 2070 Founders Edition, which is probably because NVIDIA is reusing the reference-design PCB and cooling solution, minus two of the eight memory chips. The card continues to pull power from a single 8-pin PCIe power connector.

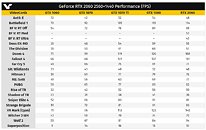

According to VideoCardz, NVIDIA could launch the RTX 2060 on the 15th of January, 2019. It could get an earlier unveiling by CEO Jen-Hsun Huang at NVIDIA's CES 2019 event, slated for January 7th. The top-spec RTX 2060 trim is based on the TU106-300 ASIC, configured with 1,920 CUDA cores, 120 TMUs, 48 ROPs, 240 tensor cores, and 30 RT cores. With an estimated FP32 compute performance of 6.5 TFLOP/s, the card is expected to perform on par with the GTX 1070 Ti from the previous generation in workloads that lack DXR. VideoCardz also posted performance numbers obtained from NVIDIA's Reviewer's Guide, that point to the same possibility.In its Reviewer's Guide document, NVIDIA tested the RTX 2060 Founders Edition on a machine powered by a Core i9-7900X processor and 16 GB of memory. The card was tested at 1920 x 1080 and 2560 x 1440, its target consumer segment. Performance numbers obtained at both resolutions point to the card performing within ±5% of the GTX 1070 Ti (and possibly the RX Vega 56 from the AMD camp). The guide also mentions an SEP pricing of the RTX 2060 6 GB at USD $349.99.

Source:

VideoCardz

According to VideoCardz, NVIDIA could launch the RTX 2060 on the 15th of January, 2019. It could get an earlier unveiling by CEO Jen-Hsun Huang at NVIDIA's CES 2019 event, slated for January 7th. The top-spec RTX 2060 trim is based on the TU106-300 ASIC, configured with 1,920 CUDA cores, 120 TMUs, 48 ROPs, 240 tensor cores, and 30 RT cores. With an estimated FP32 compute performance of 6.5 TFLOP/s, the card is expected to perform on par with the GTX 1070 Ti from the previous generation in workloads that lack DXR. VideoCardz also posted performance numbers obtained from NVIDIA's Reviewer's Guide, that point to the same possibility.In its Reviewer's Guide document, NVIDIA tested the RTX 2060 Founders Edition on a machine powered by a Core i9-7900X processor and 16 GB of memory. The card was tested at 1920 x 1080 and 2560 x 1440, its target consumer segment. Performance numbers obtained at both resolutions point to the card performing within ±5% of the GTX 1070 Ti (and possibly the RX Vega 56 from the AMD camp). The guide also mentions an SEP pricing of the RTX 2060 6 GB at USD $349.99.

234 Comments on NVIDIA GeForce RTX 2060 Founders Edition Pictured, Tested

- Full GK104 - GTX 680, GTX 770, GTX 880M and several profesional cards.

- Cut down GK104 - GTX 660, 760, 670, 680M, 860M and several professional cards

- GK106 - GTX 650ti boost, 650ti, 660, and several mobile and pro cards.

- GK107 - GTX 640, 740, 820 and lots of mobile cards

- GK110 - GTX 780 (cut down GK110), GTX 780ti and the original Titan, as well as the Titan Black, Titan Z and loads of Tesla / Quadro cards like the K6000

- GK208 - entry level 7 series and 8 series cards, both Geforce and Quadro branded

- GK208B - entry level 7 series and 8 series cards, both Geforce and Quadro branded

- GK210 - Revised and slightly cut-down version of the GK100/GK110. Launched as the Tesla K80

- GK20A - GPU built into the Tegra K1 SoC

I know from a trustworthy source (nvidia board partner employee) that nvidia had no issues whatsoever with the GK100. If fact internal testing showed what a huge leap in performance Kepler was over Fermi. This is THE REASON why nvidia decided to launch the GK104 mid-range chip as the GTX 680 - the GK104 is 30 to 50% faster then the GF100 used in the GTX 480 and 580. Some clever people in management came up with this marketing stunt - spread Kepler over two series of cards, 600 and 700 series, and release the GK104 first, and save the full GK100 (GK110) for the later 700 series and launch it as a premium product, creating a new market segment with the 780ti and original titan. GK110 is simply the name chosen for launch, replacing the GK100 moniker, mainly to attempt to obfuscate savvy consumers and the tech press. As nvidia naming schemes go, the GK110 should have been an entry level chip: GK104>GK106>GK107 -> the smaller the last number, the larger the chip. The only Kepler revision is named GK2xx (see above) and only includes entry level cards.