Thursday, January 3rd 2019

MSI GeForce RTX 2080 Ti Lightning Z PCB Pictured, Overclocked to 2450 MHz

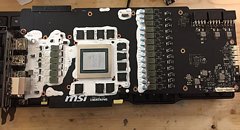

MSI is ready with a Lighting Z branded flagship graphics card based on the GeForce RTX 2080 Ti. While the company is rumored to launch the card at CES 2019, two pieces made their way to overclockers "Gunslinger" and "Littleboy." While there are no pictures of the card in one piece yet (with its cooling solution in place), there are plenty of its PCB. The large, spread-out PCB draws power from three 8-pin PCIe power connectors. A gargantuan 19-phase VRM conditions power for the GPU and memory, split between two portions of the PCB, on either side of the GPU.

The heatsink cooling the larger portion of the VRM takes up most of the vacant space over the rear end of the PCB. In addition to some finnage, this heatsink uses a flattened heat-pipe to spread heat pulled from the area over the DrMOS (indirect contact), to the tail-end of the heatsink. "Gunslinger" and "Littleboy" have each managed to overclock this card to Godlike GPU clock-speeds in excess of 2450 MHz, likely using exotic cooling, such as liquid nitrogen.

Sources:

VideoCardz, Littleboy (HWBot), Gunslinger (HWBot)

The heatsink cooling the larger portion of the VRM takes up most of the vacant space over the rear end of the PCB. In addition to some finnage, this heatsink uses a flattened heat-pipe to spread heat pulled from the area over the DrMOS (indirect contact), to the tail-end of the heatsink. "Gunslinger" and "Littleboy" have each managed to overclock this card to Godlike GPU clock-speeds in excess of 2450 MHz, likely using exotic cooling, such as liquid nitrogen.

18 Comments on MSI GeForce RTX 2080 Ti Lightning Z PCB Pictured, Overclocked to 2450 MHz

And that’s the reason I don’t understand why they’re pushing these extreme models on the market. Since maxwell, nvidia gpu oc was strickted heavily, even more with pascal. You just can’t simply use voltmod, overclocking it further exact point (it’s 2050-2150 Mhz for Ti’s, afaik), impossible without tampering with hardware - thus, you’ll stick your own PCB, so msi vrm the card comes with becomes useless.

Nowadays, lucky golden gpu makes 99% of high overclock - and vrm is almost insignificant here. Especially considering nvidia “recommendation” regarding vrm design, you won’t be having huge issues with phase temps either on any card, if it was designed just “well” - FE can oc better than strix or !amp.

So here is the question - are they gonna make exclusive override? for lightning, allowing soft voltmod? Because, otherwise, it is really useless stuff then - so much power, just in order to get stuck on 2020 MHz...

Many simple versions of RTX 2080 Ti can already handle a lot more than the core can do but blocked

But nvidia desires control over their consumers, hence why they tried to lock driver updates to a geforce now account some time back. Why these OCers even bother with nvidia anymore is a mystery. They have AMD, why not do insane tweaking to vega GPUs instead?

On Nvidia GPUs, their GPU Boost is so advanced it becomes pretty difficult to surpass with manual OC. You may get higher clocks out of it, but it may adversely affect performance. Seen it many times. These GPUs are delivered out of the box with minor OC headroom to play around with and all clock within a tight margin. What's not to like? You know what you get and duds are rare. 98% of the userbase wants that, which is why even AMD is moving to provide a more streamlined experience, revamping their driver GUI etc.

Real OC'ers hard mod anyway so AMD or Nvidia is irrelevant.

You're right, this was never about reducing the amount of RMAs. Its easy to deny a claim. Its about control - control over the performance delivered in each price bracket. The only reason AMD doesn't lock everything down is because its cheaper for them not to. Which is why you occasionally still find unlockable shaders. But it also means the number of 'muh card is bricked' or 'shit it doesn't work anymore' topics is that much higher in the red camp. And that eats away at the AMD mindshare, like it or not.

Even the X470 chipsets are over built for AMD's current offerings--maybe they were all just prepping extra early for Zen 2?

That's more about 3rd party pricks like ASUS, rather than skimping.

The VRM design is at least properly done; but it adds no extra's at all if the chip's current is limited. Look at Vega 56 > Gamernexus made it a hybrid one and bypassing AMD's powerlimit all the way up to 400Watts. When that thing could be LN2'd i'm pretty sure it should easily archieve 2Ghz and above or so. You have so much headroom to play with.

as great as this card is-- it really doesn't matter.

Typically, power limits are what handicap the performance of NVIDIA's silicon, but as many reviewers have shown: it does not matter with Turing. With the power target adjusted, shunt mods, or other methods, Turing hits a maximum clock speed before power target becomes an issue or visa versa.

This card, with Afterburner, and without modifications, will perform EXACTLY the same as their Duke or Gaming X Trio.

Is the hardware better? Yes. There are three 8 pin power connections, three 60A input filters, as well as an improved VRM setup across the board. This may mean less coil-whine, improved thermals, and more stable over clocks, but as others have pointed out, and will continue to point out, with Turing it doesn't matter. These high performance cards only benefit overclockers that are pushing their silicon to the limits with subzero cooling. If you're not going to modify this card and overclock the living hell out of it, you won't see any benefit.