Monday, February 18th 2019

AMD Radeon VII Retested With Latest Drivers

Just two weeks ago, AMD released their Radeon VII flagship graphics card. It is based on the new Vega 20 GPU, which is the world's first graphics processor built using a 7 nanometer production process. Priced at $699, the new card offers performance levels 20% higher than Radeon RX Vega 64, which should bring it much closer to NVIDIA's GeForce RTX 2080. In our testing we still saw a 14% performance deficit compared to RTX 2080. For the launch-day reviews AMD provided media outlets with a press driver dated January 22, 2019, which we used for our review.

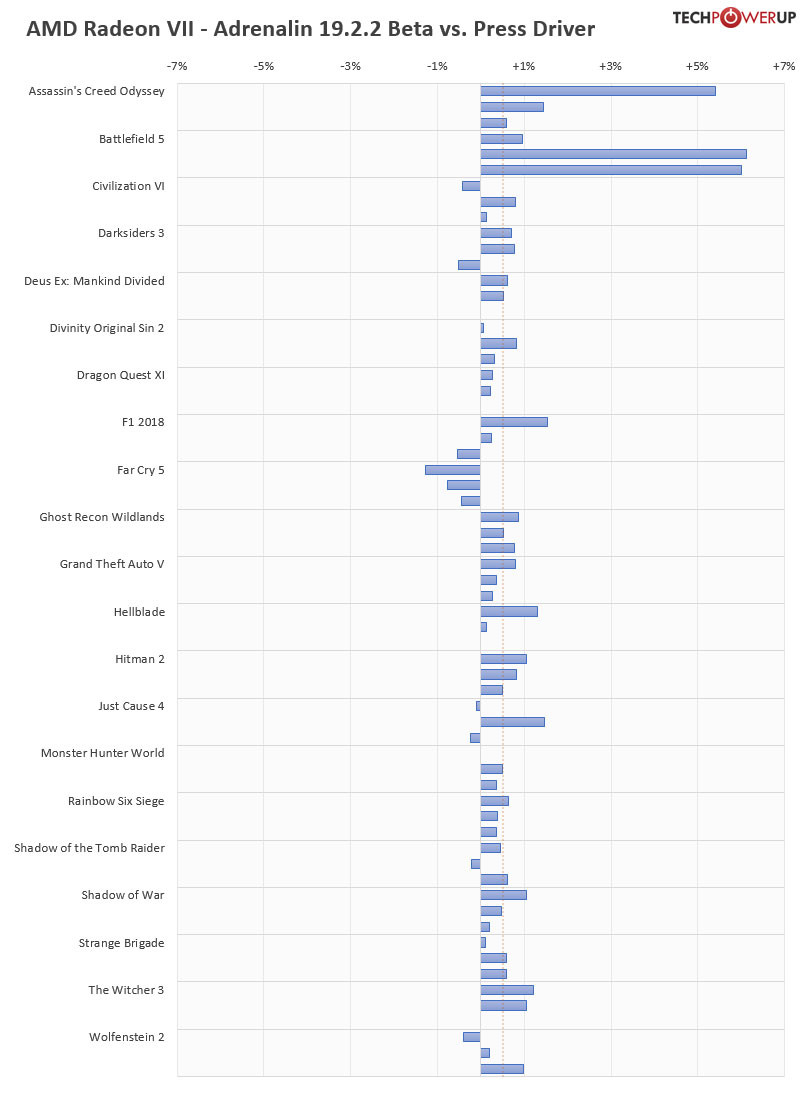

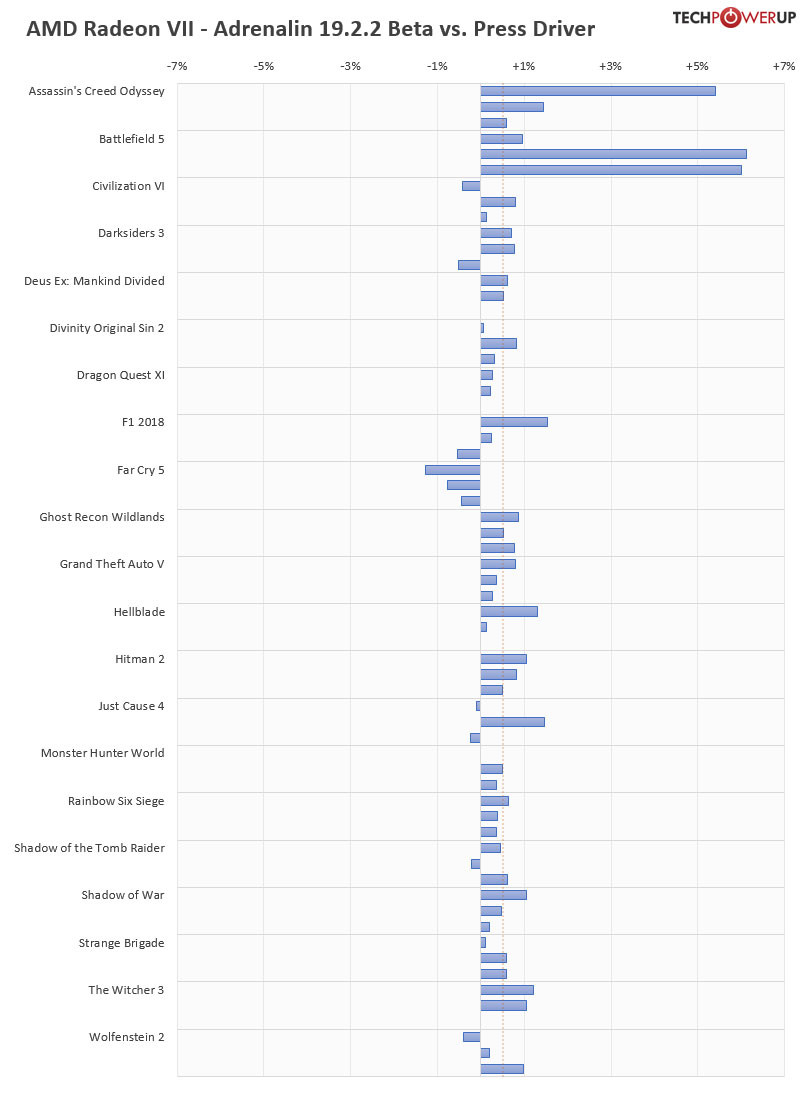

Since the first reviews went up, people in online communities have been speculating that these were early drivers and that new drivers will significantly boost the performance of Radeon VII, to make up lost ground over RTX 2080. There's also the mythical "fine wine" phenomenon where performance of Radeon GPUs significantly improve over time, incrementally. We've put these theories to the test by retesting Radeon VII using AMD's latest Adrenalin 2019 19.2.2 drivers, using our full suite of graphics card benchmarks.In the chart below, we show the performance deltas compared to our original review, for each title three resolutions are tested: 1920x1080, 2560x1440, 3840x2160 (in that order).

Please do note that these results include performance gained by the washer mod and thermal paste change that we had to do when reassembling of the card. These changes reduced hotspot temperatures by around 10°C, allowing the card to boost a little bit higher. To verify what performance improvements were due to the new driver, and what was due to the thermal changes, we first retested the card using the original press driver (with washer mod and TIM). The result was +0.2% improved performance.

Using the latest 19.2.2 drivers added +0.45% on top of that, for a total improvement of +0.653%. Taking a closer look at the results we can see that two specific titles have seen significant gains due to the new driver version. Assassin's Creed Odyssey, and Battlefield V both achieve several-percent improvements, looks like AMD has worked some magic in those games, to unlock extra performance. The remaining titles see small, but statistically significant gains, suggesting that there are some "global" tweaks that AMD can implement to improve performance across the board, but unsurprisingly, these gains are smaller than title-specific optimizations.

Looking further ahead, it seems plausible that AMD can increase performance of Radeon VII down the road, even though we have doubts that enough optimizations can be discovered to match RTX 2080, maybe if suddenly a lot of developers jump on the DirectX 12 bandwagon (which seems unlikely). It's also a question of resources, AMD can't waste time and money to micro-optimize every single title out there. Rather the company seems to be doing the right thing: invest into optimizations for big, popular titles, like Battlefield V and Assassin's Creed. Given how many new titles are coming out using Unreal Engine 4, and how much AMD is lagging behind in those titles, I'd focus on optimizations for UE4 next.

Since the first reviews went up, people in online communities have been speculating that these were early drivers and that new drivers will significantly boost the performance of Radeon VII, to make up lost ground over RTX 2080. There's also the mythical "fine wine" phenomenon where performance of Radeon GPUs significantly improve over time, incrementally. We've put these theories to the test by retesting Radeon VII using AMD's latest Adrenalin 2019 19.2.2 drivers, using our full suite of graphics card benchmarks.In the chart below, we show the performance deltas compared to our original review, for each title three resolutions are tested: 1920x1080, 2560x1440, 3840x2160 (in that order).

Please do note that these results include performance gained by the washer mod and thermal paste change that we had to do when reassembling of the card. These changes reduced hotspot temperatures by around 10°C, allowing the card to boost a little bit higher. To verify what performance improvements were due to the new driver, and what was due to the thermal changes, we first retested the card using the original press driver (with washer mod and TIM). The result was +0.2% improved performance.

Using the latest 19.2.2 drivers added +0.45% on top of that, for a total improvement of +0.653%. Taking a closer look at the results we can see that two specific titles have seen significant gains due to the new driver version. Assassin's Creed Odyssey, and Battlefield V both achieve several-percent improvements, looks like AMD has worked some magic in those games, to unlock extra performance. The remaining titles see small, but statistically significant gains, suggesting that there are some "global" tweaks that AMD can implement to improve performance across the board, but unsurprisingly, these gains are smaller than title-specific optimizations.

Looking further ahead, it seems plausible that AMD can increase performance of Radeon VII down the road, even though we have doubts that enough optimizations can be discovered to match RTX 2080, maybe if suddenly a lot of developers jump on the DirectX 12 bandwagon (which seems unlikely). It's also a question of resources, AMD can't waste time and money to micro-optimize every single title out there. Rather the company seems to be doing the right thing: invest into optimizations for big, popular titles, like Battlefield V and Assassin's Creed. Given how many new titles are coming out using Unreal Engine 4, and how much AMD is lagging behind in those titles, I'd focus on optimizations for UE4 next.

182 Comments on AMD Radeon VII Retested With Latest Drivers

Wow, already got -1 rating from that ignorant delusional braindead AMD cultist named medi01From top to the bottom,WRONG WRONG WRONG.

AC Origins gameworks?? It wasn't even nvidia sponsored,nobody sponsored that.

Battlefield 5 gameworks?? Are you having a giggle mate?? Do you even know what gameworks is?? Oh my god the ignorance and delusion in this comment is blowing my mind. Bf5 only has RTX and DLSS. It still uses Frostbite engine which vastly favours AMD.

Let me correct you.

"Assassin's Creed Origins (NVIDIA Gameworks, 2017)" - WRONG

"Battlefield V RTX (NVIDIA Gameworks, 2018)" - WORNG

"Darksiders 3 (NVIDIA Gameworks, 2018), old game remaster, where's Titan Fall 2." - WRONG

"Dragon Quest XI (Unreal 4 DX11, large NVIDIA bias, 2018)" - WRONG

"Ghost Recon Wildlands (NVIDIA Gameworks, 2017)" - WRONG

"Hellblade: Senuas Sacrif (Unreal 4 DX11, NVIDIA Gameworks)" -WRONG

Hitman 2 - it's an AMD title i think,not sure

"Monster Hunter World (NVIDIA Gameworks, 2018)" - not sure,but probably WRONG

"Middle-earth: Shadow of War (NVIDIA Gameworks, 2017)" - WRONG

"Prey (DX11, NVIDIA Bias, 2017 )" - WRONG

"Rainbow Six: Siege (NVIDIA Gameworks, 2015)" - WRONG

"Shadows of Tomb Raider (NVIDIA Gameworks, 2018)" - WRONG,it's only RTX but that hasn't even patched yet

"Wolfenstein II (2017, NVIDIA Gameworks)" - WRONG

Most of the games here aren't even sponsored by nvidia and doesn't have any gameworks in it. The only gameworks game in this list is Witcher 3. Most of the games in your nvidia list are even AMD sposnored title,like Wolfenstein 2 and Prey.Who cares? RE2 already cracked,DMC5 will get cracked, Division 2 will suck as division 1 did. Not a single appealing game. I would rather take price reduction than taking those games. So ultimately 1080Ti is a much better choice. Not to mention 1080Ti came out 2 year ago for the same 699$ price tag. LOL. After 2 years with 7nm process and same 699$ price tag,Radeon 7 still can't beat 1080Ti. How pathetic! Here's the generational improvement i wonder? And RTX 2060 is a great gpu for the price. So the whole RTX roundup isn't poor.

If you say so broActually his list is completely wrong.

There is simply no way everyone will be happy. But this method ensures game types are covered and the more popular ones and f-all to the AMD/NVIDIA sponsor pissing match.

Then, when everything settles, you reveal brands. It'd be very interesting in terms of perceived brand loyalty. Honestly this whole brand loyalty is totally strange to me. For either camp. Neither AMD or Nvidia are in it to make you happy, they're in the game to make money and keep the gears turning for the company. The consumer, and mostly 'gamer' is just a target, nothing else. Ironically BOTH camps are now releasing cards that almost explicitly tell us 'Go f*k yourself, this is what you get, like it or not' and the only reason is because the general performance level is what it is. AMD's midrange is 'fine' for most gaming, and Nvidia's stack last gen was also 'fine' for most gaming. Radeon VII is a leftover from the pro-segment, and Turing in a very similar way is a derivative of Volta, a GPU only released for pro markets. On top of that even the halo card isn't a full die.

We're getting scraps and leftovers and bicker about who got the least shitty ones. How about taking a stance of being the critical consumer instead towards BOTH companies. If you want to win, thát's how it works. And the most powerful message any consumer could ever send, is simply not buying it.

That minuscule performance gain isn't gonna give you better experience overall.

I used to own one and played many titles at 1440p and it was the worst mistake i ever made

There is a "finewine" but that comes mostly from having a bad driver in the first months of that card release and yeah then slowly there is an improvement over time to a level that should have had in the first place.

The performance has only gone up since I got it because of drivers and optimisations. I went from 1080p to 1440p without a second thought.

My friend has a 780Ti that constantly had stuttering issues in games... It cost him $400~ in 2014...

All in all, the AMD card is the better investment, because they are cheaper and just get better with updates...

Both camps have their great and no-so-great cards and also offer fantastic value propositions. Its hit or miss, all the time, for both green and red.In the end, yes, I think we can agree that the 290 versus the 780 (comparing the non-X to the TI is not fair, neither on price or performance, or market share - 290s and 780s sold like hotcakes and the higher models a whole lot less!) is a win for the 290 when it comes to both VRAM and overall performance, but not in terms of power/noise/heat. Also, you needed a pretty good AIB 290 or you'd have a vacuum cleaner - and those surely weren't 250 bucks.

Regardless. Consistency is an issue and the driver approach of both camps is different. I think its a personal view on what is preferable; full performance at launch, or increasing performance over time - with a minor chance of gaining a few % over the competition near the end of a lifecycle. It depends a lot as well on how long you tend to keep a GPU.

And yes, absolutely, a 780ti in 2017 and onwards ran into lots of problems, I've experienced those first hand. At the same time, I managed to sell that card for a nice sum of 280 EUR, which was exactly the amount I bought it for a year earlier, and meant a serious discount on my new GTX 1080. Longevity isn't just an advantage. Keeping a card for a long time also means that by the time you want to upgrade, the value is almost gone, while you're also slowly bleeding money over time because perf/watt is likely lower. Running ancient hardware is not by definition cheaper - that only is true if that hardware is obsolete and will never be replaced anyway. If you are continuously upgrading, keeping a GPU for a longer time than 1 ~1,5 years (make it 3 with the current gen junk being released) is almost NEVER cheaper than replacing it for something of a similar price point and reselling your old stuff at a good price. And you get a bonus: you stay current.

I used to own one until 2017 before I replaced it with Vega 64.

At that time I had 1080p monitor @120Hz without Freesync.

For that resolution the Fury X was not bad. The large number of games I played then worked all at Ultra setting with good frame rates.

I sold it at eBay at that time for over 300€. :D

Somebody may still be using it.

AMD no longer makes gaming cards they make compute accelerators

this card is never going to perform efficiently under gaming loads, if you wanna play games you buy nVidia

They wanted to unify workstation and high-end gaming. This whole business strategy turned out to be a failure.

Everything could change if AMD focused on making purpose-built gaming chips and unify consoles and PC gaming, which would make sense for a change...

We'll see what happens with their datacenter products. IMO they'll give up and Intel takes over.

Doesn't make AMD card lesser gaming cards. But, in comparison, it does make GCN resemble Netburst from an efficiency point of view.

your youtube drama is not welcome here

Relevance = -1000

If you want to troll, go to that place you found those screens at. Don't do it here.