Tuesday, January 19th 2021

NVIDIA Quietly Relaxes Certification Requirements for NVIDIA G-SYNC Ultimate Badge

UPDATED January 19th 2021: NVIDIA in a statement to Overclock3D had this to say on the issue:

Sources:

PC Monitor, via Videocardz

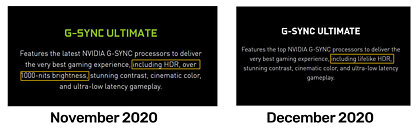

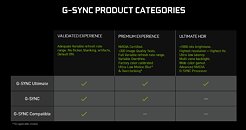

Late last year we updated G-SYNC ULTIMATE to include new display technologies such as OLED and edge-lit LCDs.NVIDIA has silently updated their NVIDIA G-SYNC Ultimate requirements compared to their initial assertion. Born as a spin-off from NVIDIA's G-SYNC program, whose requirements have also been laxed compared to their initial requirements for a custom and expensive G-SYNC module that had to be incorporated in monitor designs, the G-SYNC Ultimate badge is supposed to denote the best of the best in the realm of PC monitors: PC monitors that feature NVIDIA's proprietary G-SYNC module and HDR 1000, VESA-certified panels. This is opposed to NVIDIA's current G-SYNC Compatible (which enables monitors sans the G-SYNC module but with support for VESA's VRR standard to feature variable refresh rates) and G-SYNC (for monitors that only feature G-SYNC modules but may be lax in their HDR support) programs.The new, silently-edited requirements have now dropped the HDR 1000 certification requirement; instead, NVIDIA is now only requiring "lifelike HDR" capabilities from monitors that receive the G-SYNC Ultimate Badge - whatever that means. The fact of the matter is that at this year's CES, MSI's MEG MEG381CQR and LG's 34GP950G were announced with an NVIDIA G-Sync Ultimate badge - despite "only" featuring HDR 600 certifications from VESA. This certainly complicates matters for users, who only had to check for the Ultimate badge in order to know they're getting the best of the best when it comes to gaming monitors (as per NVIDIA guidelines). Now, those users are back at perusing through spec lists to find whether that particular monitor has the characteristics they want (or maybe require). It remains to be seen if other, previously-released monitors that shipped without the G-SYNC Ultimate certification will now be backwards-certified, and if I were a monitor manufacturer, I would sure demand that for my products.

All G-SYNC Ultimate displays are powered by advanced NVIDIA G-SYNC processors to deliver a fantastic gaming experience including lifelike HDR, stunning contract, cinematic colour and ultra-low latency gameplay. While the original G-SYNC Ultimate displays were 1000 nits with FALD, the newest displays, like OLED, deliver infinite contrast with only 600-700 nits, and advanced multi-zone edge-lit displays offer remarkable contrast with 600-700 nits. G-SYNC Ultimate was never defined by nits alone nor did it require a VESA DisplayHDR1000 certification. Regular G-SYNC displays are also powered by NVIDIA G-SYNC processors as well.

The ACER X34 S monitor was erroneously listed as G-SYNC ULTIMATE on the NVIDIA web site. It should be listed as "G-SYNC" and the web page is being corrected.

36 Comments on NVIDIA Quietly Relaxes Certification Requirements for NVIDIA G-SYNC Ultimate Badge

But HDR is mostly for content creators, most certified monitors are geared towards that.

(Plus, it's hardly a monitor at 55".)

edit: OK, I see something here but I'd have to check if those can be achieved without local dimming.

The monitor still sucks, but local dimming is the least of its worries: bulky, curved, static contrast is not even among the better ones for VA, some of the worst uniformity I have seen, will all hit you before local dimming does.

edit: okay, it's at the bottom of the page.

Black Level

Luminance

I understand a picture or badge can make it easier...but not when there are as many badges as you can find products...

I also like how, since v1.1, they lumped all certified monitors together, in an attempt to mask how almost 90% of them are actually crappy DisplayHDR 400.Agree, but at the same time I don't know how you can make it more useful when you want to take into account so many aspects. Well, not exactly "many", but certainly more than just efficiency(load).