Monday, April 26th 2021

What AMD Didn't Tell Us: 21.4.1 Drivers Improve Non-Gaming Power Consumption By Up To 72%

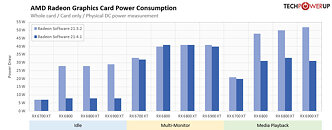

AMD's recently released Radeon Software Adrenalin 21.4.1 WHQL drivers lower non-gaming power consumption, our testing finds. AMD did not mention these reductions in the changelog of its new driver release. We did a round of testing, comparing the previous 21.3.2 drivers, with 21.4.1, using Radeon RX 6000 series SKUs, namely the RX 6700 XT, RX 6800, RX 6800 XT, and RX 6900 XT. Our results show significant power-consumption improvements in certain non-gaming scenarios, such as system idle and media playback.

The Radeon RX 6700 XT shows no idle power draw reduction; but the RX 6800, RX 6800 XT, and RX 6900 XT posted big drops in idle power consumption, at 1440p, going down from 25 W to 5 W (down by about 72%). There are no changes with multi-monitor. Media playback power draw sees up to 30% lower power consumption for the RX 6800, RX 6800 XT, and RX 6900 XT. This is a huge improvement for builders of media PC systems, as not only power is affected, but heat and noise, too.Why AMD didn't mention these huge improvements is anyone's guess, but a closer look at the numbers could drop some hints. Even with media playback power draw dropping from roughly 50 W to 35 W, the RX 6800/6900 series chips still end up using more power than competing NVIDIA GeForce RTX 30-series SKUs. The RTX 3070 pulls 18 W, while the RTX 3080 does 27 W, both of which are lower. We tested the driver on the older-generation RX 5700 XT, and saw no changes. Radeon RX 6700 XT already had very decent power consumption in these states, so our theory is that for the Navi 22 GPU on the RX 6700 XT AMD improved certain power consumption shortcomings that were found after RX 6800 release. Since those turned out to be stable, they were backported to the Navi 21-based RX 6800/6900 series, too.

The Radeon RX 6700 XT shows no idle power draw reduction; but the RX 6800, RX 6800 XT, and RX 6900 XT posted big drops in idle power consumption, at 1440p, going down from 25 W to 5 W (down by about 72%). There are no changes with multi-monitor. Media playback power draw sees up to 30% lower power consumption for the RX 6800, RX 6800 XT, and RX 6900 XT. This is a huge improvement for builders of media PC systems, as not only power is affected, but heat and noise, too.Why AMD didn't mention these huge improvements is anyone's guess, but a closer look at the numbers could drop some hints. Even with media playback power draw dropping from roughly 50 W to 35 W, the RX 6800/6900 series chips still end up using more power than competing NVIDIA GeForce RTX 30-series SKUs. The RTX 3070 pulls 18 W, while the RTX 3080 does 27 W, both of which are lower. We tested the driver on the older-generation RX 5700 XT, and saw no changes. Radeon RX 6700 XT already had very decent power consumption in these states, so our theory is that for the Navi 22 GPU on the RX 6700 XT AMD improved certain power consumption shortcomings that were found after RX 6800 release. Since those turned out to be stable, they were backported to the Navi 21-based RX 6800/6900 series, too.

63 Comments on What AMD Didn't Tell Us: 21.4.1 Drivers Improve Non-Gaming Power Consumption By Up To 72%

goo AMD (normally you'd expect this to work at launch, but a year after: i take it!)

Why is this still happening? I use 4 screen setup and measure no difference between 1 and 4 monitors plugged in good old EVGA 1080TI (10W) but my gigabyte 5700XT draws +50W in idle with the same 4 screen setup. I wanna keep my power draw to a minimum when not gaming so I can have my open case system completely passively cooled when in idle (I hate PC noise). While 1080TI fans never turn when in idle and case is open, 5700XT fans do turn on from time to time and are quite audible. AMD should fix this ever persisting problem with their GPUs a long time ago. It should not be all that hard to fix it, given the fact that Nvidia has nailed it 6 years ago on much bigger die.

Simply look up powerconsumption in earlier reviews to verify, AMD used way to much power outside of 3d, always been the case

So I'm not sure what you are celebrating

nVidia might be better, because they knew most of its users are sticking with power hungry CPU's :rolleyes:

E.g. they removed gamma/proper color configuration in Radeon Drivers five over five years ago. The topic has been raised numerous times. Have they done anything? F no.

Don't overestimate their eagerness to fix anything unless it's burning under them. Oh, that's funny, I'm actually ignoring you.

Oh, and NVIDIA and Intel are no different unfortunately.

I used to get into the registry and XML files to change it but recently they get overwritten on reboot.

For example - in theory 2160p monitor should need the same clocks as 4*1080p monitors - in reality there is obviously some overhead in running more monitors.

I have two monitors - 1440p@165Hz and 1440p@60Hz. GPU drivers do not really seem to figure out nice low clocks for this combination. I have had the same monitor setup over over last 5-6 GPUs and all of them have been running at increased clocks at one point or another. Currently using Nvidia GPU and the last fix for this was somewhere middle of last year if I remember correctly.

This is how it looked in old Radeon Drivers:

Amd/comments/etfm7a (that's for video)

Or this:

And now we have just this: www.amd.com/en/support/kb/faq/dh3-021

Miss-match in resolutions and frequencies between monitors could well be the problem, but I use 4x1920*1200p 60Hz identical IPS monitors, so resolution-frequency miss-match should be ruled out at least in my case.

I still believe this could probably be fixed on driver level, but it might be some architectural under optimization since Polaris, RNDA1 and RDNA2 all suffer from this overconsumption problem but not VEGA arch based GPUs 56/64/RVII and also NVidia's GPUs seem not to suffer from it since releasing Kepler arch.

Suppose you want manual setup, do you know your monitor supports DDC / CI or you just blindly trust nVidia? Just like GSync Ultimate, graphics card support it, but the monitor is only Compatible, are you sure that works just because control panel says so? As I said, manual settings are useless, as most of them do not conform to ICC profiles, which came from monitor driver, constructed through manual calibration with the X-Rite / Datacolor SpyderX, or just factory pre-calibrated monitor. The idea of manual override is good, but there's no guarantee its value won't change in games or apps with built-in ICC toggles (ie. Adobe, DaVinci).