Wednesday, July 13th 2016

DOOM with Vulkan Renderer Significantly Faster on AMD GPUs

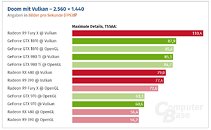

Over the weekend, Bethesda shipped the much awaited update to "DOOM" which can now take advantage of the Vulkan API. A performance investigation by ComputerBase.de comparing the game's Vulkan renderer to its default OpenGL renderer reveals that Vulkan benefits AMD GPUs far more than it does to NVIDIA ones. At 2560 x 1440, an AMD Radeon R9 Fury X with Vulkan is 25 percent faster than a GeForce GTX 1070 with Vulkan. The R9 Fury X is 15 percent slower than the GTX 1070 with OpenGL renderer on both GPUs. Vulkan increases the R9 Fury X frame-rates over OpenGL by a staggering 52 percent! Similar performance trends were noted with 1080p. Find the review in the link below.

Source:

ComputerBase.de

200 Comments on DOOM with Vulkan Renderer Significantly Faster on AMD GPUs

Nvidia cards uses preemptions to compensate the lack of asynchronous compute in their hardware.

I highly doubt that compute is being properly leveraged, too, being a pretty new API and Async being new for that matter.

Nvidia would benefit, but they wanted to cut power usage for today's perf/watt.

Vulkan: 80.1 fps

DX11: 96.6 fps

It doesn't have the massive boost on GCN cards that Doom got--at least not yet. It is still beta.

links to couple articles they had:

www.hardwareunboxed.com/gtx-1060-vs-rx-480-in-6-year-old-amd-and-intel-computers/

www.hardwareunboxed.com/amd-vs-nvidia-low-level-api-performance-what-exactly-is-going-on/

All we can draw from that benchmark is that it's pretty amazing that ancient CPU can even play that game over 60 FPS. You could drop $50 on a G3258 and it would easily double the performance. If you don't have that yet are buying an RX 480 there is something wrong.

Lowering CPU overhead is meant to let weaker CPUs push more FPS. Too early to tell just by looking at one title, though.

This is why I will not draw any conclusions based on one title. Where we had AMD and Nvidia doing the optimizations till now, we now (potentially) have every single developer to account for. In theory, everyone now only has to optimize for the API (kind of like coding for HTML5, not for a specific broswer), but sadly, there will always be calls that work better on one hardware than they do on the next. To be honest, it's not clear that smaller developers are even moving to Vulkan/DX12 at all. Interesting times ahead, though.

www.hardwareunboxed.com/gtx-1060-vs-rx-480-fx-showdown/

Results are quite schizophrenic, on 1080p with FX 8350 RX 480 is victorious on OGL but looses on Vulkan to gtx 1060. But in 1440p it's vice versa. I would say that RX 480 is on 1080p cpu limited and on 1440p gpu limited on vulkan, while gtx 1060 is gpu limited on both resolutions. In OGL gtx 1060 is cpu limited on 1080p but gpu limited on 1440p, amd is gpu limited in both res.

Maybe devs have some leftover code from console's heterogeneous memory optimizations for GCN code path :laugh: that would be ironic ... we have x86 everywhere and instead of maintaining only x86+GCN and x86+CUDA codepaths, you still have to maintain all codepaths even xb1 separately to benefit from that weird extra on-chip cache.