Friday, November 4th 2016

Intel Core i5-7600K Tested, Negligible IPC Gains

Ahead of its launch, a Core i5-7600K processor (not ES) made its way to Chinese tech publication PCOnline, who wasted no time in putting it through their test-bench, taking advantage of the next-gen CPU support BIOS updates put out by several socket LGA1151 motherboard manufacturers. Based on the 14 nm "Kaby Lake" silicon, the i5-7600K succeeds the current i5-6600K, and could be positioned around the $250 price-point in Intel's product-stack. The quad-core chip features clock speeds of 3.80 GHz, with 4.20 GHz max Turbo Boost frequency, and 6 MB of L3 cache. Like all its predecessors, it lacks HyperThreading.

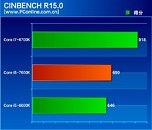

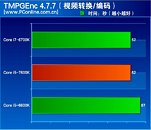

In its review of the Core i5-7600K, PCOnline found that the chip is about 9-10% faster than the i5-6600K, but that's mostly only due to its higher clock speeds out of the box (3.80/4.20 GHz vs. 3.50/3.90 GHz of the i5-6600K). Clock-for-clock, the i5-7600K is just about 1% faster, indicating that the "Kaby Lake" architecture offers only negligible IPC (instructions per clock) performance gains over the "Skylake" architecture. The power-draw of the CPU appears to be about the same as the i5-6600K, so there appear to be certain fab process-level improvements, given the higher clock speeds the chip is having to sustain, without a proportionate increase in power-draw. Most of the innovation appears to be centered on the integrated graphics, which is slightly faster, and has certain new features. Find more performance figures in the review link to PCOnline below.

Sources:

PCOnline.com.cn, WCCFTech

In its review of the Core i5-7600K, PCOnline found that the chip is about 9-10% faster than the i5-6600K, but that's mostly only due to its higher clock speeds out of the box (3.80/4.20 GHz vs. 3.50/3.90 GHz of the i5-6600K). Clock-for-clock, the i5-7600K is just about 1% faster, indicating that the "Kaby Lake" architecture offers only negligible IPC (instructions per clock) performance gains over the "Skylake" architecture. The power-draw of the CPU appears to be about the same as the i5-6600K, so there appear to be certain fab process-level improvements, given the higher clock speeds the chip is having to sustain, without a proportionate increase in power-draw. Most of the innovation appears to be centered on the integrated graphics, which is slightly faster, and has certain new features. Find more performance figures in the review link to PCOnline below.

116 Comments on Intel Core i5-7600K Tested, Negligible IPC Gains

Of course, AMD was so intent on beating Intel to market with a 64-bit desktop processor, that they had to leave out other features on the processor. Like dual-channel memory...which just killed their performance. It wasn't until Socket 939 was released that we got to really see what desktop 64-bit processors from AMD were really capable of, and when AMD really started to lay the beating down on Intel in performance.

The lack of dual channel memory, however, only affected the older socket 754 CPUs, socket 939 and 940 chips, including the 2003 "sledgehammer" athlon 64 (FX-51), had dual channel memory. The Athlon 64 3200+, which released at the same time, was limited to single channel on socket 754.

But hardware do and can degrade when we try to go outside of manufacturers specifications.

Heat, overclocking, humidity, etc. may shorten the lifetime even more.

The same can't be said about some of the other components in the systems though. Cheap electrolytic capacitiers, for example, can sometimes start to degrade within a year. And I've seen computers that were only a few years old with caps that tested way out of spec, even if they look fine(though some didn't look so fine). The computer was unstable for sure, and a recap of the motherboard fixed it.

I sill have an old Athlon XP (thoroughbred) somewhere and intel 6600 quad core, Core 2 duo E8200 and I7 860( I think) :D With Mobo and everything. I bet they still work :)

Also, both chips can lower their voltage at stock speeds.

Table shows different what goes with that will generate more heat.

Here man... I googed some results for you regarding IPC where you can validate my UNDERESTIMATE of its IPC performance. ;)

www.anandtech.com/show/9483/intel-skylake-review-6700k-6600k-ddr4-ddr3-ipc-6th-generation/9

Cliff's:So closer to 15%, not 5% as I read that link. ;)

imo we aren't going to see a lot more gains until Intel moves away from silicon. Necessity is the mother of invention so I guess it comes down to whether Intel believes that there really needs to be significantly faster CPUs than we already have for the average user to justify the costs of R&D.

You also have a 1080 which has a glass ceiling on it in some titles from the CPU.

Minimal IPC gain but i guess its time to upgrade for newer features ie PCI-E 3 and M.2, probably gain 7-12fps and thats the dilemma dropping 500-600$ for little gain worth it.

That 2600k though most worth it CPU i ever bought! nah Intel is just freaking slacking.

Other workloads, such as photo and video editing might scale better on newer CPUs.

Laptops are a completely different thing

The power gain from KabyLake+DDR4 isn't really worth it right now if I'm only looking at games/general performance for money. I'm more concerned by newer tech but I feel like that tech (USB 3.1 gen 2, NVMe, Thunderbolt, etc) isn't mainstream enough to be really an investment for the future.

How many pcie NVMe hard drives are on the market right now ? It's pretty limited.

What about USB Type C ?

How many mobo support alternative uses of USB 3.1 type C like hdmi (No wait, scratch that, I hate dongles)

Haswell and Skylake were new architectures over SandyBridge, both featured improved larger prefetchers and other improvements which offer limited IPC gains, but highly dependent on workload.

AVX 2 was introduced with Haswell.