Monday, September 3rd 2018

NVIDIA GeForce RTX 2080 Ti Benchmarks Allegedly Leaked- Twice

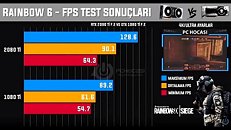

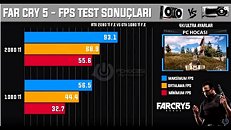

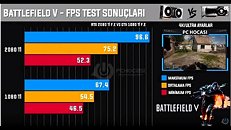

Caveat emptor, take this with a grain of salt, and the usual warnings when dealing with rumors about hardware performance come to mind here foremost. That said, a Turkish YouTuber, PC Hocasi TV, put up and then quickly took back down a video going through his benchmark results for the new NVIDIA GPU flagship, the GeForce RTX 2080 Ti across a plethora of game titles. The results, which you can see by clicking to read the whole story, are not out of line but some of the game titles involve a beta stage (Battlefield 5) and an online shooter (PUBG) so there is a second grain of salt needed to season this gravy.

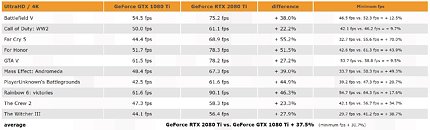

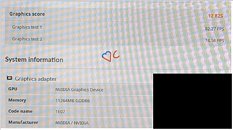

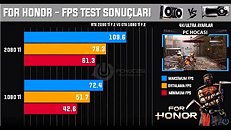

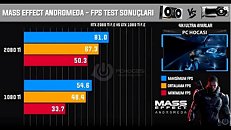

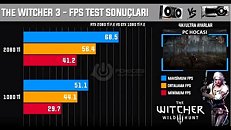

As it stands, 3DCenter.org put together a nice summary of the relative performance of the RTX 2080 Ti compared to the GeForce GTX 1080 Ti from last generation. Based on these results, the RTX 20T0 Ti is approximately 37.5% better than the GTX 1080 Ti as far as average FPS goes and ~30% better on minimum FPS. These are in line with expectations from hardware analysts and the timing of these results tying in to when the GPU launches does lead some credence to the numbers. Adding to this leak is yet another, this time based off a 3DMark Time Spy benchmark, which we will see past the break.The second leak in question is from an anonymous source to VideoCardz.com that sent a photograph of a monitor displaying a 3DMark Time Spy result for a generic NVIDIA graphics device with code name 1E07 and 11 GB of VRAM on board. With a graphics score of 12, 825, this is approximately 35% higher than the average score of ~9500 for the GeForce GTX 1080 Ti Founders Edition. This increase in performance matches up closely to the average increase in game benchmarks seen before and, if these stand with release drivers as well, then the RTX 2080 Ti brings with it a decent but not overwhelming performance increase compared to the previous generation in titles that do not make use of in-game real-time ray tracing. As always, look out for a detailed review on TechPowerUp before making up your minds on whether this is the GPU for you.For those interested, screenshots of the first set of benchmarks are attached below (taken from Joker Productions on YouTube):

As it stands, 3DCenter.org put together a nice summary of the relative performance of the RTX 2080 Ti compared to the GeForce GTX 1080 Ti from last generation. Based on these results, the RTX 20T0 Ti is approximately 37.5% better than the GTX 1080 Ti as far as average FPS goes and ~30% better on minimum FPS. These are in line with expectations from hardware analysts and the timing of these results tying in to when the GPU launches does lead some credence to the numbers. Adding to this leak is yet another, this time based off a 3DMark Time Spy benchmark, which we will see past the break.The second leak in question is from an anonymous source to VideoCardz.com that sent a photograph of a monitor displaying a 3DMark Time Spy result for a generic NVIDIA graphics device with code name 1E07 and 11 GB of VRAM on board. With a graphics score of 12, 825, this is approximately 35% higher than the average score of ~9500 for the GeForce GTX 1080 Ti Founders Edition. This increase in performance matches up closely to the average increase in game benchmarks seen before and, if these stand with release drivers as well, then the RTX 2080 Ti brings with it a decent but not overwhelming performance increase compared to the previous generation in titles that do not make use of in-game real-time ray tracing. As always, look out for a detailed review on TechPowerUp before making up your minds on whether this is the GPU for you.For those interested, screenshots of the first set of benchmarks are attached below (taken from Joker Productions on YouTube):

86 Comments on NVIDIA GeForce RTX 2080 Ti Benchmarks Allegedly Leaked- Twice

forums.guru3d.com/threads/rtx-2080ti-versus-gtx-1080ti-game-benchmarks-allegedly-first-leaks-appear-online.422790/#post-5580623

forums.guru3d.com/threads/rtx-2080ti-versus-gtx-1080ti-game-benchmarks-allegedly-first-leaks-appear-online.422790/#post-5580626

As for the 2080ti and the sad people who need to post everywhere that they pre-ordered one... the joke's on you :D Enjoy those 10 Giga Rays at 30 fps.

25-30% best case seems accurate and as predicted. It also puts the 2080 and 2070 in perspective, those are literally worthless now. It also means the Pascal line up will remain stagnant in value making our second hand sales that much more valuable. Thanks Nvidia ;)

I was the only 4k gamer (now 2k 144) in a group of 1080p screen users, and i could see and snipe people with iron sights they couldnt see with 4x scopes

There is a reason people lower their res in competitive shooters, much more than there is upping it so you can camp in the bushes. It feels to me you're grasping at straws here, sry...

That may have been an issue with my mouse or even a latency issue on the TV (it wasnt exactly made for PC gaming), but its still relevant

The main reason people use the lower res monitors is because thats what those older games were designed for... even on a 40" TV you should see how tiny 4k was, and thats the real reason 1440p is more popular - because UI scaling isnt prevalent yet, even at an operating system/common apps level its still immature tech.

This has nothing to do with UI scaling, in competitive shooters nobody looks at a UI, you just know what you have at all times (clip size, reload etc is all muscle memory). For immersive games and for strategy and isometric stuff, yes, high res and crappy UI visibility go hand in hand... I can imagine a higher res is nice to have for competitive games such as DOTA though.

I've got 1080p, 1440p and 4k in the room with me and direct experience with all of them, even at LAN parties dealing with retro games. Some games work amazing no matter the resolution (call of duty 2 runs with no issues in windows 10 in 4k for example) and others are very broken (supreme commander works great in 1440p 144hz despite its 100fps cap, but the UI is utterly broken at 4k)

No competitive gamer is going to choose a resolution, practise and learn it and then go to an event with the hardware provided and have all the muscle memory be out of whack

That was due to the difference in cores and transistors, not including clock speed. Of course, since I know I will be told this, yes, it’s really just theoretical, but, obviously it’s pretty damn close. Also, the fact that my numbers actually aren’t off compared to the released benchmark numbers, I’d say yes, if you want max quality, go RTX 2080 Ti. There won’t be any better GPUs coming in the next two years anyways, other than perhaps Titan versions, and nothing on the AMD side.

Most people don't notice much difference when going beyond either 4K or 60fps (nor are they willing to pay for it).

Of course there will be people who want to play on multiple screens or 144Hz.

And since there's demand, there should be a "halo" product that can do that for a premium. I'd imagine that by 2025 such GPUs will have no problem with 4K@144fps or triple 4K@60fps.

But wouldn't it make sense for the rest of GPU lineup to concentrate on 4K@60fps and differentiate by image quality (features, effects)?

I mean... Nvidia currently makes 10 different models per generation (some in two RAM variants). Do we really need that? :-)

But instead we get this inefficient modest upgrade so they can sell defective compute cards to oblivious gamers.

Looking forward to the TPU review though.

Leaving out percentage comparison for direct fps comparisons would be an absolutely awful idea.

The point is we do know AMD is bringing 7nm cards THIS year. But it's up in the air if it is Vega or Navi (or both), and if it will be a full or a paper launch. I just wouldn't bother with overpriced and soon outdated 12nm products.

www.techpowerup.com/forums/threads/amd-7nm-vega-by-december-not-a-die-shrink-of-vega-10.247006/

How much will 7nm and 4 stack hbm2 bring to vega anyway, it's 1080 performance. If 2080ti is 1.35-1.4x of 1080ti, that's 1.35-1.4 times 1.3x, so 1.8x of vega 64. 80% faster - don't think so.... 7nm Vega can probably beat 16nm 1080Ti,though not by much. 2080 performance in other words and 10-15% faster than 2070. worth waiting for months ? If you got money for a gpu now then I don't think so,might as well pick up 1080ti when they drop price, won't be much difference from 7nm Vega.