Friday, April 5th 2019

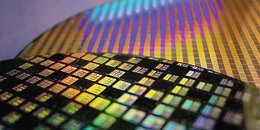

TSMC Completes 5 nm Design Infrastructure, Paving the Way for Silicon Advancement

TSMC announced they've completed the infrastructure design for the 5 nm process, which is the next step in silicon evolution when it comes to density and performance. TSMC's 5 nm process will leverage the company's second implementation of EUV (Extreme Ultra Violet) technology (after it's integrated in their 7 nm process first), allowing for improved yields and performance benefits.

According to TSMC, the 5 nm process will enable up to 1.8x the logic density of their 7 nm process, a 15% clock speed gain due to process improvements alone on an example Arm Cortex-A72 core, as well as SRAM and analog circuit area reduction, which means higher number of chips per wafer. The process is being geared for mobile, internet, and high performance computing applications. TSMC also provides online tools for silicon design flow scenarios that are optimized for their 5 nm process. Risk production is already ongoing.

Source:

TSMC

According to TSMC, the 5 nm process will enable up to 1.8x the logic density of their 7 nm process, a 15% clock speed gain due to process improvements alone on an example Arm Cortex-A72 core, as well as SRAM and analog circuit area reduction, which means higher number of chips per wafer. The process is being geared for mobile, internet, and high performance computing applications. TSMC also provides online tools for silicon design flow scenarios that are optimized for their 5 nm process. Risk production is already ongoing.

52 Comments on TSMC Completes 5 nm Design Infrastructure, Paving the Way for Silicon Advancement

I'll be frank but as polite as I can.

You seem like a nice guy (piracy stuff put aside). I know you're building/tuning PCs and you know a lot about it (more than me for sure).

You have absolutely no idea about enterprise computing. It's obvious to me and every forum member in this business will sense it as well.

It also seems you have limited understanding of how casual PC users do stuff today (you don't believe in cloud, you're not very enthusiastic about laptops etc).

Learn something about datacenters and cloud if you really want to. Or keep doing what you're good at. I don't care.

R7 perf per wat numbers aren't that much better than Vega,still behind 1080Ti

and it's mostly due to massive bandwidth increase,the process itself left most of us unimpressed.

I'm a simple guy,I look at the basics mostly and prefer numbers to intricate theories :)

I don't really jump on hype trains either.Like, I do understand the high expectaions for Zen 3000,but does early 7nm really produce that much better parts than refined 14/16nm ? And do we really need two ccx's and two dies for a six core cpu in 2019 ?

However, we're a bit off-topic here. Let's agree to disagree and rope it in a bit.While your point is easy to see, the jump to 7nm was and remains a difficult one. The performance improvements offered by 7nm are compelling enough and improve enough to be considerable. For Turing to jump to 7nm would be a serious advantage as it would offer that much more performance to that GPU platform, to say nothing of a jump to 5nm.

Intel's tablet/ultrabook platform is doing perfectly fine.I'd argue that Intel is at the forefront of WiFi, IoT, AI (car AI in particular) and non-volatile memory of all sorts.

They may not be first or second in some products, but they're in top3 of almost everything computer-related. That's not a bad position.Intel is a huge company, but they still want to grow. They're trying new things all the time. Some will fail, some will stick. You can't expect them to be best at everything they try.

By comparison, AMD has the "comfort" of focusing on CPUs. But if they ever reach those 30-50% they used to have some time ago, they'll need to look into other possibilities as well. In fact exactly that happened more than a decade ago, when they bought ATI.

Look at Samsung. They're a chaebol, they make countless things in very different industries.

At some point Samsung decided they'll make mirrorless cameras. And many agree that what they came up with was the most advanced gear at that time. And you know what? They quit. It didn't match their profit expectations.

The fact that Intel (or any other company) leaves a particular business doesn't mean they failed to deliver a good product. It means it doesn't provide the financial figures they require."Cloud" is not a paid service that Google, MS and Amazon sell you. Cloud is a concept. You can set your private cloud on your private server.

It's not necessarily more efficient than the current Non-EUV process.

And keep in mind Nvidia did gain a lot more performance by going from 28nm to 16nm than AMD did so Radeon VII might not be the best example.

Have fun with your 60 fps 4k on Google Stadia :) I will be doing 165hz 1440p thanks, take care now.

rumors are 3700x hits 5ghz boost. if true and I can OC that to be true for all 8 cores... Intel might even lose the IPC battle.

Risk Production for 7nm started around April 2017. It is now April 2019 and we still don't have meaningful 7nm. I don't know if I really count VII, it is 7nm but it may as well not have been.

So if we take that 7nm Ryzen launches at Computex and 5nm risk started in January, two and half more years puts us at June 2021. Do you think AMD is going to sit on the 3000 series until 2021? And of course 5nm is going to be perfect on the first try. I mean it isn't like Intel is having problems or anything.

I don't.Keep in mind that clocks aren't solely determined by node but also by architecture.

We feed them with Lamb Steak and Orange Juice Instead of electricity. :D

That's 9 months before we hear how good this is doing at risk production is'nt it?

Probably not going to rule the world this year , possibly for 2020 h2 phones of the top end variety , If it all goes well.

And personally i expect cloud uptake for gaming to be big but , the bells have tolled for pc gameing before and the cutting edge can still only be had one way ,PC.

it will shrug off this assault (cloud) for a great many years yet imho, since too many things are not quite there universally ie bandwidth.

Listen, you'll see how much of a 'real deal' TSMC's first gen of 7nm is when our fave Nvidia uses it for their next generation of graphics cards. It will blow Turing out the water believe me. Radeon 7 should not be used as a gauge of its merits, as that is basically a tweaked 7nm Pro card (M160) repurposed for gaming.

The new nodes will make it possible to squeeze more cores into small dies, but it will come at a cost. Firstly, the node efficiency gains alone are not enough to maintain clock speeds, and current Coffee Lake and Zen+ CPUs are already in throttle territory. The other problem with more cores is diminishing returns, as most non-server workloads are synchronized. This means that in reality adding many more cores doesn't really compensate for slower cores, in fact, with ever-increasing core count core speed becomes more important to maintain good performance scaling.

So, with clock speeds stalled once again, the future of performance scaling is pretty much depending on what we commonly refer to as "IPC". Intel's upcoming Sunny Cove/Ice Lake and Golden Cove(2021) will both feature IPC gains. The other big area of improvement is SIMD, namely AVX. Ice Lake will bring AVX-512 to the mainstream, and while Zen 2 will bring an appreciated doubling of AVX2 performance, it still lacks AVX-512 for now. AVX is in generally underutilized, which is sad, since AVX and multithreading combined scales incredible well for both vendors.EUV is expected to increase production speed and improve yields, perhaps even giving a small performance increase too.

Both Intel and TSMC has struggled a lot on their new nodes. It's been a year since Intel started shipping their first 10nm parts, and the volumes have been really low, and the yields I guess are embarrassingly low.

TSMC have been somewhat more successful with their "7nm" HPC node producing medium sized chips, but still unable to ship anything in volumes. I'm really curious how this is going to pan out for Zen 2, which luckily have their "chiplet" design, but I still wonder what kind of tricks TSMC may have up their sleeve, since shipping "hundreds" of chips this time is not going to cut it.I think the point was that Vega 64 => Radeon VII is the same architecture on two different nodes, and with a roughly comparable configuration.

Maxwell => Pascal featured some architectural improvements and a large increase in shader processors, which is something Radeon VII did not.

What we have today is not what our civilization is capable of, it is what is economically feasible. Totally different approach, but far more realistic if you ask me. You act like CPU development is carried along in great science endeavours like CERN - its not. Its a completely different ball game with a completely different dynamic. This is mostly true because CPU/GPU performance is scalable and its a mass consumer product.

Node shrinks are one primary example of an economical problem. Its only worth it if you can sell it. But we can do it no problem, it just takes a huge amount of time and resources. For GPU, the reason it developed as it did was because the demand changed over time.

However, I would say that these fabs have almost caught up with Intel.

However, all of that is purely academic, 7nm you can buy trumps 10nm you can't, no matter the actual density :D