Wednesday, March 4th 2020

Three Unknown NVIDIA GPUs GeekBench Compute Score Leaked, Possibly Ampere?

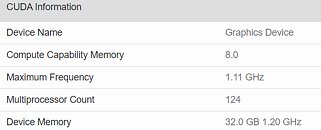

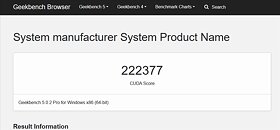

(Update, March 4th: Another NVIDIA graphics card has been discovered in the Geekbench database, this one featuring a total of 124 CUs. This could amount to some 7,936 CUDA cores, should NVIDIA keep the same 64 CUDA cores per CU - though this has changed in the past, as when NVIDIA halved the number of CUDA cores per CU from Pascal to Turing. The 124 CU graphics card is clocked at 1.1 GHz and features 32 GB of HBM2e, delivering a score of 222,377 points in the Geekbench benchmark. We again stress that these can be just engineering samples, with conservative clocks, and that final performance could be even higher).

NVIDIA is expected to launch its next-generation Ampere lineup of GPUs during the GPU Technology Conference (GTC) event happening from March 22nd to March 26th. Just a few weeks before the release of these new GPUs, a Geekbench 5 compute score measuring OpenCL performance of the unknown GPUs, which we assume are a part of the Ampere lineup, has appeared. Thanks to the twitter user "_rogame" (@_rogame) who obtained a Geekbench database entry, we have some information about the CUDA core configuration, memory, and performance of the upcoming cards.In the database, there are two unnamed GPUs. The first GPU is a version with 7552 CUDA cores running at 1.11 GHz frequency. Equipped with 24 GB of unknown VRAM type, the GPU is configured with 118 Compute Units (CUs) and it scores an incredible score of 184096 in the OpenCL test. Compared to something like a V100 which has a score of 142837 in the same test, we can see almost 30% improvement in performance. Next up, we have a GPU with 6912 CUDA cores running at 1.01 GHz and featuring 47 GB of VRAM. This GPU is a less powerful model as it has 108 CUs and scores 141654 in the OpenCL test. Some things to note are weird memory configurations in both models like 24 GB for the more powerful model and 47 GB (which should be 48 GB) for the weaker one. The results are not the latest, as they date back to October and November, so it may be that engineering samples are in question and the clock speed and memory configuration might change until the launch happens.

Sources:

@_rogame (Twitter), Geekbench

NVIDIA is expected to launch its next-generation Ampere lineup of GPUs during the GPU Technology Conference (GTC) event happening from March 22nd to March 26th. Just a few weeks before the release of these new GPUs, a Geekbench 5 compute score measuring OpenCL performance of the unknown GPUs, which we assume are a part of the Ampere lineup, has appeared. Thanks to the twitter user "_rogame" (@_rogame) who obtained a Geekbench database entry, we have some information about the CUDA core configuration, memory, and performance of the upcoming cards.In the database, there are two unnamed GPUs. The first GPU is a version with 7552 CUDA cores running at 1.11 GHz frequency. Equipped with 24 GB of unknown VRAM type, the GPU is configured with 118 Compute Units (CUs) and it scores an incredible score of 184096 in the OpenCL test. Compared to something like a V100 which has a score of 142837 in the same test, we can see almost 30% improvement in performance. Next up, we have a GPU with 6912 CUDA cores running at 1.01 GHz and featuring 47 GB of VRAM. This GPU is a less powerful model as it has 108 CUs and scores 141654 in the OpenCL test. Some things to note are weird memory configurations in both models like 24 GB for the more powerful model and 47 GB (which should be 48 GB) for the weaker one. The results are not the latest, as they date back to October and November, so it may be that engineering samples are in question and the clock speed and memory configuration might change until the launch happens.

62 Comments on Three Unknown NVIDIA GPUs GeekBench Compute Score Leaked, Possibly Ampere?

www.anandtech.com/show/13923/the-amd-radeon-vii-review/15

www.anandtech.com/show/14618/the-amd-radeon-rx-5700-xt-rx-5700-review/13

Or, you may be thinking VRAM mapping that could eat into your addressable RAM on 32bit systems. It's going to take a while till we hit that again on 64bit.

When I had 16GB installed it showed 8 GB shared. I just recently added another 16 GB, and now it shows 16 GB shared out of the 32 GB.

24 GB or more would be pretty pointless for games right now, as you would need memory bandwidth, computational power and assets to scale with it to make sense.

Take for instance RTX 2080 Ti with 616 GB/s memory bandwidth, if you're running at 120 FPS, it's only going to touch maximum 5.6 GB of those during a single frame, in practice though it's even much less than that, as memory traffic is not evenly distributed during frame rendering.

Games which actually needs more than 8 GB will not use it during a single frame, but use it for storing a larger world. This also means that some assets can be streamed.

While games are likely to slowly require more memory in the future, it will be a balancing act, and no one can accurately predict how much top games actually needs 3-5 years from now. So far Nvidia have been very good at balancing resources on their GPUs, despite many predicting their cards would flop.Even the CUDA compiler is open source, so if AMD (or Intel) wanted to, they could add support themselves.

CUDA is mostly used for custom software, which runs on specific machines. CUDA offers a better ecosystem, debugging tools, more features (which leverages more efficient implementations), so the choice is easy. The ones who keep complaining about CUDA seems to be the ones who don't know the first thing about it.This is not true today.

You should not care about numbers, you should care about solid benchmarks showing how much you actually need, because all resources will ultimately be pushed beyond the point of diminishing returns.The fact police have to correct you there, firstly, there are two misconceptions here;

1) 32-bit OS/hardware and the 4 GB memory limit;

There are no relation between register width(e.g. a "32-bit" CPU) and address width. It's just a coincidence that some consumer 32-it OS' at the time supported up to 4 GB RAM. You should read up on PAE. Windows Enterprise/Datacenter (2000/2003/2008), Linux, BSD and Mac OS (Pro) supported >4 GB on 32-bit systems, provided the CPU and BIOS supported it (e.g. Xeons).

2) VRAM address space;

Unless you're running integrated graphics, VRAM is never a part of RAM's address space.

Even with a system like Windows XP (32-bit) where the address space is limited to 32-bit, the size of VRAM will not affect it at all. The reserved upper part of the address space(typical 0.25-0.75 GB at the time) is reserved by the BIOS for use with IO with PCIe devices etc., while the VRAM address space is not directly addressable at all.

2) I think you're right, but I'm not 100% sure. It's been a while since I read about this.

11 > 24 ? Dream on. 16 is more likely, or some weirdness like 14.

These are quadros or teslas, I think that is clear. For the odd one out thinking 24GB is somehow useful for gaming... k buddy.

All we know now is that Nvidia will succeed V100. In other news, water is wet.