Wednesday, March 4th 2020

Three Unknown NVIDIA GPUs GeekBench Compute Score Leaked, Possibly Ampere?

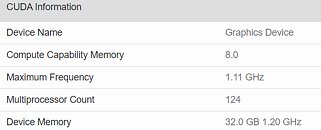

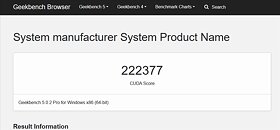

(Update, March 4th: Another NVIDIA graphics card has been discovered in the Geekbench database, this one featuring a total of 124 CUs. This could amount to some 7,936 CUDA cores, should NVIDIA keep the same 64 CUDA cores per CU - though this has changed in the past, as when NVIDIA halved the number of CUDA cores per CU from Pascal to Turing. The 124 CU graphics card is clocked at 1.1 GHz and features 32 GB of HBM2e, delivering a score of 222,377 points in the Geekbench benchmark. We again stress that these can be just engineering samples, with conservative clocks, and that final performance could be even higher).

NVIDIA is expected to launch its next-generation Ampere lineup of GPUs during the GPU Technology Conference (GTC) event happening from March 22nd to March 26th. Just a few weeks before the release of these new GPUs, a Geekbench 5 compute score measuring OpenCL performance of the unknown GPUs, which we assume are a part of the Ampere lineup, has appeared. Thanks to the twitter user "_rogame" (@_rogame) who obtained a Geekbench database entry, we have some information about the CUDA core configuration, memory, and performance of the upcoming cards.In the database, there are two unnamed GPUs. The first GPU is a version with 7552 CUDA cores running at 1.11 GHz frequency. Equipped with 24 GB of unknown VRAM type, the GPU is configured with 118 Compute Units (CUs) and it scores an incredible score of 184096 in the OpenCL test. Compared to something like a V100 which has a score of 142837 in the same test, we can see almost 30% improvement in performance. Next up, we have a GPU with 6912 CUDA cores running at 1.01 GHz and featuring 47 GB of VRAM. This GPU is a less powerful model as it has 108 CUs and scores 141654 in the OpenCL test. Some things to note are weird memory configurations in both models like 24 GB for the more powerful model and 47 GB (which should be 48 GB) for the weaker one. The results are not the latest, as they date back to October and November, so it may be that engineering samples are in question and the clock speed and memory configuration might change until the launch happens.

Sources:

@_rogame (Twitter), Geekbench

NVIDIA is expected to launch its next-generation Ampere lineup of GPUs during the GPU Technology Conference (GTC) event happening from March 22nd to March 26th. Just a few weeks before the release of these new GPUs, a Geekbench 5 compute score measuring OpenCL performance of the unknown GPUs, which we assume are a part of the Ampere lineup, has appeared. Thanks to the twitter user "_rogame" (@_rogame) who obtained a Geekbench database entry, we have some information about the CUDA core configuration, memory, and performance of the upcoming cards.In the database, there are two unnamed GPUs. The first GPU is a version with 7552 CUDA cores running at 1.11 GHz frequency. Equipped with 24 GB of unknown VRAM type, the GPU is configured with 118 Compute Units (CUs) and it scores an incredible score of 184096 in the OpenCL test. Compared to something like a V100 which has a score of 142837 in the same test, we can see almost 30% improvement in performance. Next up, we have a GPU with 6912 CUDA cores running at 1.01 GHz and featuring 47 GB of VRAM. This GPU is a less powerful model as it has 108 CUs and scores 141654 in the OpenCL test. Some things to note are weird memory configurations in both models like 24 GB for the more powerful model and 47 GB (which should be 48 GB) for the weaker one. The results are not the latest, as they date back to October and November, so it may be that engineering samples are in question and the clock speed and memory configuration might change until the launch happens.

62 Comments on Three Unknown NVIDIA GPUs GeekBench Compute Score Leaked, Possibly Ampere?

And here's Radeon VII "smacking around" the 2080Ti: www.phoronix.com/scan.php?page=article&item=radeon-vii-rocm24&num=2

Keep in mind 2080 Ti is only on OpenCL 1.2.

To put it simply, why would researchers devote their time, energy and resources into OpenCL when nobody will even cite and use their work afterwards?

You do not need 16GB of VRAM to run either of these games at 4k. If you are running a ton of graphical mods on Cities, then you could push past the framebuffer. But thats mods. You could mod the liek sof skyrim to use 2-3x the VRAM of cards at the time, but that was not the native game, and mods are not often optimized like the base game is.

About re2 remake showing memory size usage wrong, I wonder if gpu-z is also showing it wrong then, cause I used gpu-z the last time I saw just to check and windows taskbar manager just to see the usage.

edit: I didn't mean people use OpenCL if that is what it looks like. What I was saying is that Nvidia exposed CUDA to all gaming gpus so game developers can use it too and see how much more productive it is than other API's.

If you drop a turbo into your car and overheat it because the radiator didnt have enough capacity for the increased load, is that the fault of the radiator? No. You modded the application and ran out of capacity, for its designed use case it works perfectly.Citation needed, something that has been asked of you multiple times and you refuse to deliver. (here's a hint, a site with 0 benchmarks or proof of what you are claiming makes you look like a total mong).Except those games do not need that, that has been proven to you already in this very thread by @bug, and is readily disproven by casual googling of these very games being played in 4k for reviews and gameplay videos showing them running just fine.Good thing that isnt a problem with any game currently on the market, 8gb is currently sufficient for 4k.What did you think we were talking about? RE2R "consumes" large amounts of VRAM because it is reserving way more then it actually needs. Much of that VRAM is unused, as is evident by the fact that lower VRAM cards run the game fine without stuttering.

Let me help you here Metroid: you came here making claims that games need more then 8GB of VRAM to play sufficiently in 4k. That has been proven false by information posted by other users. You have yet to post anything that backs up your claims. The burden of proof is on the back of those making claims. That's you.

Since you seem so sure about this, how about you record video on your computer of the games you are talking about, show the settings you are using, run an FCAT test and FPS test for us, use MSI afterburner to verify VRAM usage and FPS results. Shouldnt take more then 10 minutes to run the benchmarks and a bit of time to post the resulting video to youtube. Doesnt need to be edited or anything, just as long as it contains proof of what you are claiming.

Both leaked cards look like next-gen top Quadro models. RTX 6000 and 8000 had 24 and 48GB RAM respectively.

And what do you mean by AFR, some multi card rendering method or did you mess it with VRR. VESA VRR and HDMI Forum VRR are different things, HDMI Forum VRR is supported currently by Console manufacturers and Nvidia, amd's support for it is still pending.

I don't think CUDA license have any fee, but nvidia can lock HW for them with CUDA.

CUDA is free to use (also commercially).

Furthermore, Nvidia cards obviously can run OpenCL programs, so it's not like anyone's forced to use CUDA.

I run OpenCL on my 7700K's UHD630 without any translation. I only need to have the drivers enabled. Intel also offers SDK support for their FPGAs to do OpenCL.

CUDA costs a bunch because you pay for the hardware. Only one supplier of hardware that can run CUDA. I'd actually be really interested in wrapping CUDA and running it on non nV hardware but they NV has never shied away from locking their software down as hard as possible.

I remember when I could have PhysX on while using a Radeon GPU to do the drawing.

I'm also very sure it would be very easy for NV to turn on OCL 2 support.

Edit: Isn't One API open? I thought it was basically OpenCL 3... I have to look into it more.

Edit 2: One API is basically a unified open standard that offers full cross platform use. According to Phoronix articles, porting it to AMD will be easy because Intel and AMD both use open source drivers, NV on the other hand locks the good stuff up with closed source drivers on Linux

Edit 3: Too many abbreviations in my head, I meant VRR instead of AFR. Somehow it was Adaptive Frame Rendering... LoL I meant the lovely piece of hardware that NV required for VRR rather than just supporting the Display port spec. I mean it's kinda awesome that the Xbox One X supports VRR if you plug it into a compatible display.Free to use on Nvidia hardware. AMD has to jump through hoops just to emulate some small parts.

Nvidia also completely gimps the GP-GPU performance of their more affordable GPUs. Want that performance, the cheapest option available to normal folk is the $3000 USD Titan V.

Yeah, you can use the older less functional and capable OpenCL 1.2. want those newer features... Well CUDA only on NV.

Yeah... Free... :rolleyes:

At least I'm a hater who uses GeForces and Quadros. :pimp:

Furthermore, you may or may not use it (since there are alternatives), so you actually have a choice (which you don't get with some other products).

And you can use it even when you don't own the hardware - this is not always the case.

In other words: there are no downsides. I honestly don't understand why people moan so much about CUDA (other than general hostility towards Nvidia).That's absolutely not true. What you mean is FP32. But some software uses it and some doesn't. It's just an instruction set.

One could say AMD gimps AVX-512 on all of their CPUs.

Many professional/scientific scenarios are fine with FP16.

Phoronix tested some GPUs in PlaidML, which is probably the most popular non-CUDA neural network framework.

www.phoronix.com/scan.php?page=article&item=plaidml-nvidia-amd&num=4

2 things to observe here: how multiple Nvidia GPUs perform in FP16 and as a tasty bonus - how they perform in OpenCL compared to Polaris.I don't understand why people raise this argument. It simply makes you a miserable hater.

The point is moot though, the world seems to be set on CUDA by now. More precisely, the world seems to be set on anything that's not OpenCL.

"A quick rule of thumb is that you should have twice as much system memory as your graphics card has VRAM, so a 4GB graphics card means you'd want 8GB or more system memory, and an 8GB card ideally would have 16GB of system memory "

www.pcgamer.com/best-gpu-2016/

www.guru3d.com/articles-pages/gpu-compute-performance-review-with-20-graphics-cards,1.html

OpenCL Indigo GPU render test

The entire line of Radeon got absolutely destroyed. 2060 beating R7 which was hailed as "GCN, king of compute" or something. Big oof.

Blender, OpenCL, Radeons got creamed hard again. Surprisingly even Navi beats out the R7.

From what I have seen so far, Radeon cards are good for mining crypto-kitties. For professional work or scientific research, their OpenCL based approach is just too weak or too buggy for day to day use.