Thursday, July 16th 2020

The Curious Case of the 12-pin Power Connector: It's Real and Coming with NVIDIA Ampere GPUs

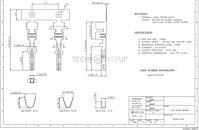

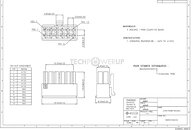

Over the past few days, we've heard chatter about a new 12-pin PCIe power connector for graphics cards being introduced, particularly from Chinese language publication FCPowerUp, including a picture of the connector itself. Igor's Lab also did an in-depth technical breakdown of the connector. TechPowerUp has some new information on this from a well placed industry source. The connector is real, and will be introduced with NVIDIA's next-generation "Ampere" graphics cards. The connector appears to be NVIDIA's brain-child, and not that of any other IP- or trading group, such as the PCI-SIG, Molex or Intel. The connector was designed in response to two market realities - that high-end graphics cards inevitably need two power connectors; and it would be neater for consumers to have a single cable than having to wrestle with two; and that lower-end (<225 W) graphics cards can make do with one 8-pin or 6-pin connector.

The new NVIDIA 12-pin connector has six 12 V and six ground pins. Its designers specify higher quality contacts both on the male and female ends, which can handle higher current than the pins on 8-pin/6-pin PCIe power connectors. Depending on the PSU vendor, the 12-pin connector can even split in the middle into two 6-pin, and could be marketed as "6+6 pin." The point of contact between the two 6-pin halves are kept leveled so they align seamlessly.As for the power delivery, we have learned that the designers will also specify the cable gauge, and with the right combination of wire gauge and pins, the connector should be capable of delivering 600 Watts of power (so it's not 2*75 W = 150 W), and not a scaling of 6-pin. Igor's Lab published an investigative report yesterday with some numbers on cable gauge that helps explain how the connector could deliver a lot more power than a combination of two common 6-pin PCIe connectors.

Looking at the keying, we can see that it will not be possible to connect two classic six-pins to it. For example pin 1 is square on the PCIe 6-pin, but on NVIDIA's 12-pin is has one corner angled. It also won't be possible to use weird combinations like 8-pin + EPS 4 pin, or similar—NVIDIA made sure people won't be able to connect their cables the wrong way.

On topic of the connector's proliferation, in addition to PSU manufacturers launching new generations of products with 12-pin connectors, most prominent manufacturers are expected to release aftermarket modular cables that can plug in to their existing PSUs. Graphics card vendors will include ketchup-and-mustard adapters that convert 2x 8-pin to 1x 12-pin; while most case/power manufacturers will release fancy aftermarket adapters with better aesthetics.

Update 08:37 UTC: I made an image in Photoshop to show the new connector layout, keying and voltage lines in a single, easy to understand graphic.

Sources:

FCPowerUp (photo), Igor's Lab

The new NVIDIA 12-pin connector has six 12 V and six ground pins. Its designers specify higher quality contacts both on the male and female ends, which can handle higher current than the pins on 8-pin/6-pin PCIe power connectors. Depending on the PSU vendor, the 12-pin connector can even split in the middle into two 6-pin, and could be marketed as "6+6 pin." The point of contact between the two 6-pin halves are kept leveled so they align seamlessly.As for the power delivery, we have learned that the designers will also specify the cable gauge, and with the right combination of wire gauge and pins, the connector should be capable of delivering 600 Watts of power (so it's not 2*75 W = 150 W), and not a scaling of 6-pin. Igor's Lab published an investigative report yesterday with some numbers on cable gauge that helps explain how the connector could deliver a lot more power than a combination of two common 6-pin PCIe connectors.

Looking at the keying, we can see that it will not be possible to connect two classic six-pins to it. For example pin 1 is square on the PCIe 6-pin, but on NVIDIA's 12-pin is has one corner angled. It also won't be possible to use weird combinations like 8-pin + EPS 4 pin, or similar—NVIDIA made sure people won't be able to connect their cables the wrong way.

On topic of the connector's proliferation, in addition to PSU manufacturers launching new generations of products with 12-pin connectors, most prominent manufacturers are expected to release aftermarket modular cables that can plug in to their existing PSUs. Graphics card vendors will include ketchup-and-mustard adapters that convert 2x 8-pin to 1x 12-pin; while most case/power manufacturers will release fancy aftermarket adapters with better aesthetics.

Update 08:37 UTC: I made an image in Photoshop to show the new connector layout, keying and voltage lines in a single, easy to understand graphic.

178 Comments on The Curious Case of the 12-pin Power Connector: It's Real and Coming with NVIDIA Ampere GPUs

There already exist a 12-circuit header housing (literally from the exact same connector series that the ATX24 pin, EPS 8 pin and PCIe 6 pin comes from, specifically, this part here: www.molex.com/molex/products/part-detail/crimp_housings/0469921210). I don't even buy the argument about insufficient power handling capability. Standard Minifit JR crimp terminals and connector housings are rated up to 9A per circuit pairs, so for the 12-circuit part, you're looking at 9A*12V*6 = 648W of power. This is around the same as the rough '600W' figure quoted in the article, not to mention, you can get higher . You don't need a new connector along with new crimp terminal design and non-standard keying for this. THE EXISTING ATX 12 PIN CONNECTOR WILL DO THE JOB JUST FINE. Not to mention, you can get Minifit-Jr terminals that are rated for even higher amperage (13A for copper/tin crimp terminals when used with 18AWG or thicker conductors, IIRC, in which case the standard ATX 12-pin cable will carry even more power). This is literally just Nvidia trying to reinvent the wheel for no apparent reason.

As someone who crimps their own PSU cables, this is a hecking pain in the butt, and I hope whoever at Nvidia came up with this idea gets 7 years of bad luck for this. Just use the industry-standard part that already exist FFS.

/rant

As i've said, an external power brick for a modern gpu would be a PC PSU in an external case(thus costing upwards of 100USD) as it would require 500W of output, plus you'd need a multitude of thick cables and connector occupying and entire slot on the back (there's no room for the power connector plus the regular video output), and that's another fan making noise and getting clogged.

2x8 pins may be barely enough for ampere, but not for the future, or even not enough for 3080ti(or whatever name it comes out), there are already 2080ti cards with 3x8 pin.

Yes a wire can push 10+A, but they have to take into account the voltage drop and heating on the wire and contact resistance of the connector, it's not that simple(otherwise we'd use one big thick cable instead of 2x8 pins for example), and also how flexible it is

Any way.. This isn't a news story. This is someone downloading a drawing from a connector supplier and posting it as "news". Real news would be seeing the connector on the card itself. Am I right?

Now look what's popping up in my Google ads!

See... Not a "made up" connector.

Most psu's have six 8 pin vga ports on them so I don't see this as an issue and just speculation needing a new psu when most likely just needs a new adapter or cable at worst.

30 series Titan rtx may need more but those aren't exactly mainstream cards either.

Guess we'll find out sooner or later :-)

This might be because of the fact that the improvement from moving to Samsung 8nm is less than they expected, or simply that they intend to leave less performance on the table and place their cards more towards the right side of the voltage/frequency curve, sacrificing some efficiency for more raw power.

To be fair, we know nothing of the TDPs from team red, they might've gone up too, in spite of using TSMC's 7nm EUV.

RDNA2 on TSMC's 7nm seems to be quite formidable.We know a little. PS5 RDNA2 GPU is 2.2Ghz in a ~100W package offering near 2080 performance.

A 350W RDNA2 GPU is going to give a 2080 Ti a run for its money.

And yes latest rumours from sources I trust state the top Big Navi will be around 40% faster than a 2080 Ti, so it will give that a run for its money as stated and challenge the 3080 Ti.

What I see is this.....2080 Ti(FE) currently leads 5700XT (Nitro+) by 42% (1440p). With all the rumors about the high power use, even with a significant node shrink (versus AMD who is tweaking) and a new architecture, you still think that is true?

I mean, I hope you're right, but from what we've seen so far, I don't understand how that kind of math even works out. You're assuming that with a die shrink and new arch from Ampre will only be around the same amount faster 2080Ti was over 1080Ti (~25-30%) while a new arch and node tweak will gain upwards of 70% performance? What am I missing here?

If NV Ampre comes in at 300W+, I don't see RDNA2 coming close (split the difference between 2080Ti and Ampre flagship)

Even if he's only half-right, that doesn't bode well for the 3000-series.

It will also mean that Nvidia play dirty (using DLSS and black-box dev tools that hinder AMD/Intel performance to get the unfair advantage their inferior hardware needs). In the case of this generation, I suspect that means that proprietary RTX-specific extensions are heavily pushed, rather than DX12's DXR API. Gawd, I hope I'm wrong....

That's always the marketing battle to be fought and AMD has been very bad at this in the past. And Nvidia are masters at this game, just ask 3DFX, S3 and Kyro...

But this time there are AMD APU's in all the consoles that matter, and the whole Radeon marketing team has been changed. So I reckon they might have something resembling a strategy.

If he's right, it means AMD will have the superior hardware.

I hope you realise this is a speculation thread though and there's no hard evidence on Ampere or Big Navi yet.

Me? I'm sitting on the fence and waiting for real-world testing and independent reviews. I'm old enough to have seen this game between AMD/ATi and Nvidia played out over and over again for 20+ years. It would not be the first time that either company had intentionally leaked misleading performance numbers to throw off the other team.

www.techpowerup.com/gpu-specs/geforce-gtx-1080-ti.c2877

and use the relative performance graph to find top of the line cards launched in the same year. I found this for you:

www.techpowerup.com/gpu-specs/geforce-gtx-780.c1701

www.techpowerup.com/gpu-specs/radeon-hd-7990.c2126

www.techpowerup.com/gpu-specs/geforce-gtx-780-ti.c2512

In terms of performance per dollar or performance per transistor?

[INDENT]Currently they do. AMD's Navi10 is a reasonable competitor for TU106 but it is faster and cheaper than either the 2060S or the 2070 for a lower transistor count. It can't do raytracing, but arguably, TU106 is too weak to do it too. I've been wholly disappointed by my 2060S's raytracing performance, even with DLSS trying desperately to hide the fact it's only rendering at 720p. Heck, my 2060S can barely run Quake II or Minecraft ;)[/INDENT]

In terms of halo/flagships?

- In 2008, Terascale architecture (HD 4000 series) ended a few years of rubbish from ATi/AMD and was better than the 9800GTX in every way.

- In 2010, Fermi (GTX 480) was a disaster that memes were born from.

- In 2012 Kepler (GTX680) had an edge over the first iteration of GCN (HD7970) because DX11 was too common. As DX12 games appeared, Kepler fell apart badly.

- In 2014 Kepler on steroids (GTX780Ti and Titan) tried to make up the difference but AMD just made Hawaii (290X) which was like an HD7970 on steroids, to match.

Nvidia has pretty much held the flagship position since Maxwell (900-series), and generally offered better performance/Watt and performance/Transistor even before you consider that they made consumer versions of their huge enterprise silicon (980Ti, 1080Ti, 2080Ti). The Radeon VII was a poor attempt to do the same and it wasn't a very good product even if you ignore the price - it was just Vega's failures but clocked a bit higher and with more VRAM that games (even 4K games) didn't really need.So yeah, if you don't remember that the performance crown has been traded back and forth a lot over the years, then you need to take off your special Jensen-Huang spectacles and revisit some nostaligic youtube comparisons of real games frequently being better on AMD/ATi hardware. Edit, or just look at BoBoOOZ's links

I don't take sides, If Nvidia puts out rubbish, I'll diss it.

If AMD puts out rubbish, I'll diss that too.

I just like great hardware, ideally at a reasonable price and power consumption.

And later this year :p

Moore's law is dead might as well have quoted me verbatim, though I can't remember where on here I called it.

And if the many rumours are as true as usual, ie bits are but 50% balls then it still doesn't look Rosey for Nvidia this generation regardless.