Friday, August 14th 2020

NVIDIA GeForce RTX 3090 "Ampere" Alleged PCB Picture Surfaces

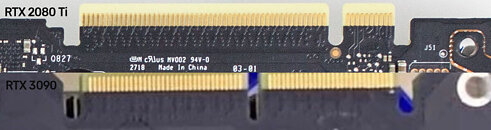

As we are getting close to September 1st, the day NVIDIA launches its upcoming GeForce RTX graphics cards based on Ampere architecture, we are getting even more leaks. Today, an alleged PCB of the NVIDIA's upcoming GeForce RTX 3090 has been pictured and posted on social media. The PCB appears to be a 3rd party design coming from one of NVIDIA's add-in board (AIB) partners - Colorful. The picture is blurred out on the most of the PCB and has Intel CPU covering the GPU die area to hide the information. There are 11 GDDR6X memory modules covering the surrounding of the GPU and being very near it. Another notable difference is the NVLink finger change, as there seems to be the new design present. Check out the screenshot of the Reddit thread and PCB pictures below:More pictures follow:

Source:

VideoCardz

72 Comments on NVIDIA GeForce RTX 3090 "Ampere" Alleged PCB Picture Surfaces

Edit: Typed wrong module number.

But as you say, we'll see in a few weeks' time. I'll be happy to be proven wrong - that would mean a series of very interesting and ambitious/silly design decisions, which will be very interesting to see play out. But until then, or until more conclusive evidence shows up, I'll remain skeptical.

I'm gonna be butthurt when I get the top tier card and then the next week there's one slightly better.

I guess expectations from the next gen GPU cards are already set in the right circles.

Can we reintroduce some common sense here and consider the fact Nvidia has always had a pretty tight lid on their actual releases? It gets out when they want it, generally. Its not AMD, guys. For some recent events, remember how SUPER surfaced for us. A little teaser and we were all left guessing. Almost nobody nailed it, and many people thought we would get a step above 2080ti. That never happened.

We are looking at a PCB with some logos and a bloody full sized Intel IHS and imagination runs wild... Most of what we've seen doesn't really, actually line up all that well. Relax, sit back, and just wait it out ;)

Obviously.

Whatever amount of VRAM Nvidia has chosen for their upcoming cards, it's very unlikely to change right before the release, as this can't change after the final design goes into mass production.

I would agree to some common sense about rumors and "leaks", most of them don't pass the sniff test. As of right now there are many rumors floating about like; "Ampere will be very power efficient", "Ampere will be a power hog", "Ampere will have xx GB VRAM", "Nvidia will move to Samsung 8nm", etc. Contradiction usually indicates people are guessing. Especially when it comes to details which are known internally 1.5-2 years ahead, like die configurations, memory buses, production node etc. If "leakers" gets things like this wrong, then they are making stuff up. Perhaps we should start to make a timeline of various "leaks", that would make it obvious which of these "sources" which are BS.

I'd argue reading your comments is a waste of time by your definition.