Thursday, September 6th 2018

NVIDIA TU106 Chip Support Added to HWiNFO, Could Power GeForce RTX 2060

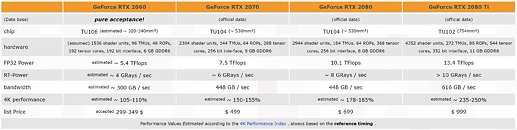

We are all still awaiting how NVIDIA's RTX 2000 series of GPUs will fare in independent reviews, but that has not stopped the rumor mill from extrapolating. There have been alleged leaks of the RTX 2080 Ti's performance and now we see HWiNFO add support to an unannounced NVIDIA Turing microarchitecture chip, the TU106. As a reminder, the currently announced members in RTX series are based off TU102 (RTX 2080 Ti), and TU104 (RTX 2080, RTX 2070). It is logical to expect a smaller die for upcoming RTX cards based on NVIDIA's history, and we may well see an RTX 2060 using the TU106 chip.

This addition to HWiNFO is to be taken with a grain of salt, however, as they have been wrong before. Even recently, they had added support for what, at the time, was speculated to be NVIDIA Volta microarchitecture which we now know as Turing. This has not stopped others from speculating further, however, as we see 3DCenter.org give their best estimates on how TU106 may fare in terms of die size, shader and TMU count, and more. Given that TSMC's 7 nm node will likely be preoccupied with Apple iPhone production through the end of this year, NVIDIA may well be using the same 12 nm FinFET process that TU102 and TU104 are being manufactured on. This mainstream GPU segment is NVIDIA's bread-and-butter for gross revenue, and so it is possible we may see an announcement with even retail availability towards the end of Q4 2018 to target holiday shoppers.

Source:

HWiNFO Changelog

This addition to HWiNFO is to be taken with a grain of salt, however, as they have been wrong before. Even recently, they had added support for what, at the time, was speculated to be NVIDIA Volta microarchitecture which we now know as Turing. This has not stopped others from speculating further, however, as we see 3DCenter.org give their best estimates on how TU106 may fare in terms of die size, shader and TMU count, and more. Given that TSMC's 7 nm node will likely be preoccupied with Apple iPhone production through the end of this year, NVIDIA may well be using the same 12 nm FinFET process that TU102 and TU104 are being manufactured on. This mainstream GPU segment is NVIDIA's bread-and-butter for gross revenue, and so it is possible we may see an announcement with even retail availability towards the end of Q4 2018 to target holiday shoppers.

56 Comments on NVIDIA TU106 Chip Support Added to HWiNFO, Could Power GeForce RTX 2060

Turing have the largest SM change since Kepler. The throughput is theoretically double, >50% in compute workloads and probably somewhat less in gaming.

Hype sells.

And picking out edge cases such as you have there when hot clock shaders came in , they also brought hot chips that you neglected to mention and their own drama too ,it doesn't help.

People don't guess too wide of the mark here imho.

Anyhow, slides will be out on Friday and reviews next Monday. We will see how things fare.

Games and RT-wise, we will probably have to wait for a couple months until first games with DXR support are out.Nope. It is a balancing act though. RTX cards are prices into enthusiast range and beyond, this is the target market that traditionally is willing to pay more for state of the art hardware. Whether Nvidia's gamble will pay off in this generation remains to be seen.40% is optimistic. No doubt Nvidia chose the best performers to put onto their slides. Generational speed increases have been around 25% for a while and it is likely to be around that this time as well, across a large number of titles at least.

DLSS is a real unknown here. In theory, it is free-ish AA (due to being run on Tensor cores) that would be awesome considering how problematic AA is in contemporary renderers. Whether it will pan out as well as current marketing blabber makes it out to be. The potential is there.