Thursday, September 6th 2018

NVIDIA TU106 Chip Support Added to HWiNFO, Could Power GeForce RTX 2060

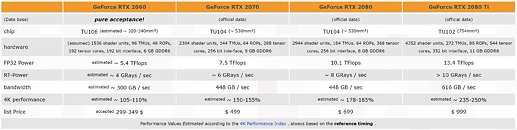

We are all still awaiting how NVIDIA's RTX 2000 series of GPUs will fare in independent reviews, but that has not stopped the rumor mill from extrapolating. There have been alleged leaks of the RTX 2080 Ti's performance and now we see HWiNFO add support to an unannounced NVIDIA Turing microarchitecture chip, the TU106. As a reminder, the currently announced members in RTX series are based off TU102 (RTX 2080 Ti), and TU104 (RTX 2080, RTX 2070). It is logical to expect a smaller die for upcoming RTX cards based on NVIDIA's history, and we may well see an RTX 2060 using the TU106 chip.

This addition to HWiNFO is to be taken with a grain of salt, however, as they have been wrong before. Even recently, they had added support for what, at the time, was speculated to be NVIDIA Volta microarchitecture which we now know as Turing. This has not stopped others from speculating further, however, as we see 3DCenter.org give their best estimates on how TU106 may fare in terms of die size, shader and TMU count, and more. Given that TSMC's 7 nm node will likely be preoccupied with Apple iPhone production through the end of this year, NVIDIA may well be using the same 12 nm FinFET process that TU102 and TU104 are being manufactured on. This mainstream GPU segment is NVIDIA's bread-and-butter for gross revenue, and so it is possible we may see an announcement with even retail availability towards the end of Q4 2018 to target holiday shoppers.

Source:

HWiNFO Changelog

This addition to HWiNFO is to be taken with a grain of salt, however, as they have been wrong before. Even recently, they had added support for what, at the time, was speculated to be NVIDIA Volta microarchitecture which we now know as Turing. This has not stopped others from speculating further, however, as we see 3DCenter.org give their best estimates on how TU106 may fare in terms of die size, shader and TMU count, and more. Given that TSMC's 7 nm node will likely be preoccupied with Apple iPhone production through the end of this year, NVIDIA may well be using the same 12 nm FinFET process that TU102 and TU104 are being manufactured on. This mainstream GPU segment is NVIDIA's bread-and-butter for gross revenue, and so it is possible we may see an announcement with even retail availability towards the end of Q4 2018 to target holiday shoppers.

56 Comments on NVIDIA TU106 Chip Support Added to HWiNFO, Could Power GeForce RTX 2060

TU104 would have not existed if this generation was built on 7nm see.

In case 2070 is TU106 that is. TU106 would be TU206/192bit/7nm, and TU102 becomes TU204/256 bit with 2x core count.

TU104 only exists to counter possible VEGA64 on 7nm and 1.2Ghz HBM2.

Given this is NV though, it would not surprise me if they do this even if it doesn't have the h/w and does the RT via the regular cores and as a result has terrible RT performance. This could be "3.5GB" all over again if they do that.

I'm really hoping AMD will put DXR etc. support in a driver release soon (maybe the big EoY one) for Vega and it will be interesting to see how that performs and if it supports more than one card, e.g. rasterising done on one card and RT overlay effects on the 2nd or similar.

It would be quite funny if the next driver had it and is released over the next week or so just before the 20th launch.

It's the chicken and the egg problem, you need wide hardware support to get games to use it, even if it means most cards will have limited to no real use for it.

Useful or not, the support in the Turing GPUs is not going to hurt you. Even if you never use the raytracing, Turing is still by far the most efficient and high-performing GPU design. The only real disadvantage is the larger dies and very marginal wasted power consumption.

Full scene real time raytracing at a high detail level are probably several GPU generations away, but that doesn't mean it wouldn't have some usefulness before that. We've seen people do the "Cornell box" with realtime voxelised raytracing with CUDA, not as advanced as Nvidia did it with RTX of course, but still enough to get realistic soft shadows and reflections. With RTX, some games should be able to do good soft shadows and reflections in full scenes, while retaining good performance.

I don't get why the tech demos from Nvidia focus so much on sharp reflections. Very few things in the real world have sharp reflection, and even my car with newly applied "HD Wax" from AutoGlym achieves the glossiness of that car in the Battlefield V video. I do understand that shiny things sell, but I wish they focused more on realistic use cases.How could that happen? R9 Fury doesn't have hardware accelerated raytracing, it only has general compute.

Plus, you should know that's physically impossible or did the die become 4x larger? What? The dies are the same just cut down from Volta (with some scam cores sprinkled in)? Well, you don't say.

Four V100s would be able to run the game at 4k...not 1080p 40 fps LOLOLOLOL

What did you think RT cores are?

it is 256 bit at least. so 2060 is the cut down version of 2070. similar to GTX 760/770.

GP106 (GTX 1060) uses a 192-bit bus, and features 3/6 GB of memory.

192-bit memory bus actually means 3×64-bit memory controllers, each are actually dual 32-bit controllers. Chips can in fact be designed with any multiple of 32-bit memory controllers.

Still, the xx60 cards are only the entry product for gaming, if you want more, you got to pay more.

Vega was a flop; primarily because it fell further behind Nvidia in performance and efficiency, but also because AMD failed to make good profit on them. Most people have long forgotten that AMD actually tried to sell them at $100 over MSRP, but changed after a lot of bad press.

Now that there is more money going into R&D (it looks like over 50% more than in 2014) that should help move things along.

Realistically, looking at the numbers, historically we have always been very accurate at making assumptions on performance. Simply because the low hanging fruit in CUDA really is gone now. We won't see tremendous IPC jumps and if we do, they will cost something else that also provides performance (such as clockspeeds). Its really not rocket science and the fact is, if you can't predict Turing performance with some accuracy, you just don't know enough about GPU.

So let's leave it at that, okay? This has nothing to do with triggered immature kids - in fact, that is the group spouting massive performance gains based on keynote shouts and powerpoint slides. The idiots who believe everything Nvidia feeds them. The Pascal card owners have nothing to be unhappy about - with Turing's release, the resale value of their cards will remain stagnant even though they become older. That is a unique event and one that will cause a lot of profitable used Pascal card sales. I'm not complaining!

If you don't like it, don't visit these threads or tech forum in general...