Wednesday, October 17th 2018

NVIDIA Readies TU104-based GeForce RTX 2070 Ti

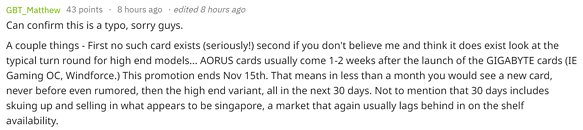

Update: Gigabyte themselves have come out of the gates dismissing this as a typo on their part, which is disappointing, if not unexpected, considering that there is no real reason for NVIDIA to launch a new SKU to a market virtually absent of competition. Increased tiers of graphics card just give more options for the consumer, and why give an option that might drive customers away from more expensive graphics card options?

NVIDIA designed the $500 GeForce RTX 2070 based on its third largest silicon based on "Turing," the TU106. Reviews posted late Tuesday summarize the RTX 2070 to offer roughly the the same performance level as the GTX 1080 from the previous generation, at the same price. Generation-to-generation, the RTX 2070 offers roughly 30% more performance than the GTX 1070, but at 30% higher price, in stark contrast to the GTX 1070 offering 65% more performance than the GTX 970, at just 25% more price. NVIDIA's RTX varnish is still nowhere in sight. That said, NVIDIA is not on solid-ground with the RTX 2070, and there's a vast price gap between the RTX 2070 and the $800 RTX 2080. GIGABYTE all but confirmed the existence of an SKU in between.Called the GeForce RTX 2070 Ti, this SKU will likely be carved out of the TU104 silicon, since NVIDIA has already maxed out the TU106 with the RTX 2070. The TU104 features 48 streaming multiprocessors in comparison to the 36 featured by TU106. NVIDIA has the opportunity to carve out the RTX 2070 Ti by cutting down SM count of the TU104 to somewhere in the middle of those of the RTX 2070 and the RTX 2080, while leaving the memory subsystem untouched. With these, it could come up with an SKU that's sufficiently faster than the GTX 1070 Ti and GTX 1080 from the previous generation, and rake in sales around the $600-650 mark, or exactly half that of RTX 2080 Ti.

Source:

Reddit

NVIDIA designed the $500 GeForce RTX 2070 based on its third largest silicon based on "Turing," the TU106. Reviews posted late Tuesday summarize the RTX 2070 to offer roughly the the same performance level as the GTX 1080 from the previous generation, at the same price. Generation-to-generation, the RTX 2070 offers roughly 30% more performance than the GTX 1070, but at 30% higher price, in stark contrast to the GTX 1070 offering 65% more performance than the GTX 970, at just 25% more price. NVIDIA's RTX varnish is still nowhere in sight. That said, NVIDIA is not on solid-ground with the RTX 2070, and there's a vast price gap between the RTX 2070 and the $800 RTX 2080. GIGABYTE all but confirmed the existence of an SKU in between.Called the GeForce RTX 2070 Ti, this SKU will likely be carved out of the TU104 silicon, since NVIDIA has already maxed out the TU106 with the RTX 2070. The TU104 features 48 streaming multiprocessors in comparison to the 36 featured by TU106. NVIDIA has the opportunity to carve out the RTX 2070 Ti by cutting down SM count of the TU104 to somewhere in the middle of those of the RTX 2070 and the RTX 2080, while leaving the memory subsystem untouched. With these, it could come up with an SKU that's sufficiently faster than the GTX 1070 Ti and GTX 1080 from the previous generation, and rake in sales around the $600-650 mark, or exactly half that of RTX 2080 Ti.

29 Comments on NVIDIA Readies TU104-based GeForce RTX 2070 Ti

Price gap is also not so big between the 500-600$ RTX2070 and 750-900$ RTX2080. This basically leaves a 700$ slot. Just bizarre stuff.

Nvidia, what about the sub 400$ market instead of shoving another overpriced Turing to this stack?

The only thing scary this Halloween is going to be RTX prices

What are they thinking? I hope RTG pulls their act together and crushes Nvidia's windpipe. Because ever since the launch of Turing, everything is just a ****show in terms of pricing. Come to think of it a lot is going on a downwards spiral since the last mining craze.

It's that time again where I am just not impressed by what has happened in the past 1.5 years of the tech world.

I think they are trying to prove us right, that since they don't have competition they're just gonna price the cards up the bum and that's fair.

It consumes the same amount of power and is barely faster then a 2 year old GTX 1080, while the TU106 core will be probably close to useless for RTX as far the demos we have seen so far. So apart for DLSS which is basically ML assisted upscaling what incentive would one have to go for an RTX 2070 instead of a GTX 1080 right now?

Is it intentionally they have done the 2070 slower than the 1080ti, but pricier, so that the 2070ti will bee justified.

And for what....there are no games out there to support the fanzy ray-tracing......

I share others sentiments that if AMD can release a gpu with 2080ti performance for $600-$700 minus the currently useless ray tracing, I would buy my first Radeon card since the HD5870.

Not a 06 chip

Yeah on 7 nm the nonexistent 1000mm2 chip with 6144 CUDa gets shrinked to 300mm2 and then to 250mm2 on 7N+ and that is the 70/80 104-class chip. So everything we see today is pretty much obsoleted. Im not buying until 40 series it seems. Not unless I see 2060 (half 2080 no RT), at 200$.

Has everyone got too much free time, so that they can spend it getting upset about speculation by our editor in chief (who sourced exactly nothing)?