Friday, March 22nd 2019

Several Gen11 GPU Variants Referenced in Latest Intel Drivers

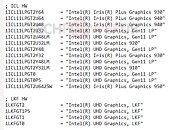

The latest version of Intel Graphics drivers which introduce the company's latest UWP-based Graphics Command Center app, hide another secret in their INF. The file has pointers to dozens of variants and implementations of the company's next-generation Gen11 integrated graphics architecture, which we detailed in a recent article. Intel will implement Gen11 on two key processor microarchitectures, "Ice Lake" and "Lakefield," although later down the line, the graphics technology could trickle down to low-power Pentium Silver and Celeron SoC lines, too, with chips based on the "Elkhart Lake" silicon.

There are 13 variants of Gen11 on "Ice Lake," carved using execution unit (EU) count, and LP (low-power) aggressive power management. The mainstream desktop processors based on "Ice Lake," which are least restrained in power-management, get the most powerful variants of Gen11 under the Iris Plus brand. Iris Plus Graphics 950 is the most powerful implementation, with all 64 EUs enabled, and the highest GPU clock speeds. This variant could feature on Core i7 and Core i9 brands derived from "Ice Lake." Next up, is the Iris Plus Graphics 940, with the same EU count, but likely lower clock speeds, which could feature across the vast lineup of Core i5 SKUs. The Iris Plus 930 comes in two trims based on EU count, of 64 and 48, and could likely be spread across the Core i3 lineup. Lastly, there's the Iris Plus 920 with 32 EUs, which could be found in Pentium Gold SKUs. There are various SKUs branded "UHD Graphics Gen11 LP," with EU counts ranging from 32 to 64.Intel's "Lakefield" heterogeneous SoC comes with four models of Gen11 graphics, based on two key variants of Gen11, GT1 and GT2, which are physically different in size. The GT1 variant of this iGPU is smaller than GT2, and likely has 32 EUs going by past trends of GT1 configurations. All four models are labelled "UHD Graphics LKF," with no further model numbering. Future releases of Intel drivers could reference "Elkhart Lake," the upcoming low-power SoC that incorporates Gen11 graphics architecture.

There are 13 variants of Gen11 on "Ice Lake," carved using execution unit (EU) count, and LP (low-power) aggressive power management. The mainstream desktop processors based on "Ice Lake," which are least restrained in power-management, get the most powerful variants of Gen11 under the Iris Plus brand. Iris Plus Graphics 950 is the most powerful implementation, with all 64 EUs enabled, and the highest GPU clock speeds. This variant could feature on Core i7 and Core i9 brands derived from "Ice Lake." Next up, is the Iris Plus Graphics 940, with the same EU count, but likely lower clock speeds, which could feature across the vast lineup of Core i5 SKUs. The Iris Plus 930 comes in two trims based on EU count, of 64 and 48, and could likely be spread across the Core i3 lineup. Lastly, there's the Iris Plus 920 with 32 EUs, which could be found in Pentium Gold SKUs. There are various SKUs branded "UHD Graphics Gen11 LP," with EU counts ranging from 32 to 64.Intel's "Lakefield" heterogeneous SoC comes with four models of Gen11 graphics, based on two key variants of Gen11, GT1 and GT2, which are physically different in size. The GT1 variant of this iGPU is smaller than GT2, and likely has 32 EUs going by past trends of GT1 configurations. All four models are labelled "UHD Graphics LKF," with no further model numbering. Future releases of Intel drivers could reference "Elkhart Lake," the upcoming low-power SoC that incorporates Gen11 graphics architecture.

27 Comments on Several Gen11 GPU Variants Referenced in Latest Intel Drivers

AMD has nothing to worry about against a puny 48 eu's.

But the top gen11 variant will match 2400G APU or the GT 1030, which most likely was the target.

But sure, for general purpose a basic igpu will suffice.

But now Intel has very good reasons to boost performance as well. Many apps started to utilize GPGPU. Sometimes it's for GPU-friendly computations, sometimes just for rendering the UI (like Firefox does).

GPU boost could potentially give AMD APUs an advantage - despite the CPU part being weaker.

Y series:

GT2Y64 - Iris Plus 930 (64EU?)

GT2Y48 - UHD Gen 11 LP (48EU)

GT2Y48LM - UHD Gen 11 LP

GT2Y32 - Iris Plus 930 (32EU)

GT2Y32LM - UHD 910 (clearly LM means something, since it's enough to change the designation)

U series:

GT2U64 - Iris Plus 940

GT2U6425W - Iris Plus 950 (25W version, I think. Gets a different billing!)

GT2U48 - Iris Plus 940

GT2U48LM - UHD Gen11 LP (again, LM has to mean something)

GT2U32 - UHD Gen11 LP

GT2U32LM - UHD 920

?? F series?:

GT0 - UHD Gen11 LP (traditionally, GT0 has been "no graphics," afaik, but that no longer seems to be the case)

GT0P5 - UHD Gen11 LP (what is P5 for?)

Lakefield:

GT2 - UHD LKF

GT1P5 - UHD LKF

GT1 - UHD LKF

GT0 - UHD LKF (in the past, I think GT0 would have meant to GPU, but it means something else now [afaik])

As for the LM deal, last time Intel used "LM" for CPUs was in the 1st gen mobile Core i series chips. back then, it meant it was one step below the fullpower "M" series, but one step above the "UM" series. That being said, it probably has no relation to the LM name here, though casually glancing about, the LM designation seems to imply a weaker version (or at least a lower name, for most of the "LM" designated GPUs).

There is more clear segmentation this time around than previous Intel IGPs (not that there was enough power in them to create lower end versions that weren't horrible). GT2 now seems to encompass (potentially) a wide range of available EUs.

It also seems the Y series may get a full GT2 (unlike the current and previous gens), but it still has a lower name, indicating lower clocks.

The most interesting SKU to me is the GT2U6425W, since it implies the higher performance at a TDP-Up of 25W (and gets a different name, too). Of course, this will depend on Intel's willingness to enforce better cooling schemes.

Intel's recent market share by month = 9.3 / 10.5 / 10.6 / 10.6 / 10.15

AMD's recent market share by month = 13.9 / 15.2 / 15.1 / 15.3 / 14.69

Nvidia's recent market share by month = 75.6 / 74.1 / 74,2 / 74.0 / 75.1

While by no means indicative of a long term trend, since the recent price drops, two things are evident

AMD gained market share gains against nVidia over 3 previous months have now begun to slide since recent price adjustments. Expect AMD to adjust pricing, will nVidia follow suit.

Intel's fortunes have also reversed, will we continue to see more folks opt for discreet cards in upcoming months ?

While this is just something that **may** turn into somewhat of a trend, it does offer some hope that card prices will continue to drop.

Guess what on the picture is the CPU and what is the GPU. It is an i7 6785R CPU

And as you can see, Intel has released it in 2016.

Oh, and could you give me the source of the information you have, to the setup, or what is where in the dies?

I always assumed the smaller Die is the CPU and the bigger die is the GPU with the 128MB of eDRam.

PS With "killed" and "death" I mean that companies don't sell the huge numbers they used to sell, not that they don't sell at all.

That is why i want source material. But it is quite hard to get any useful documentation with google. It was hard enough to get this cpu (i7 6785R).

Here are some Fire Strike Benchmarks with different APU / CPUs with intigrated Graphics. But Some CPUs have issues. The A12-9800 throttles down to 1.4 GHz with heavy GPU-Load is applied. The 6785R throttles to 2.5 / 2.6 GHz when under heavy CPU-Load. (have to find the right flag to set)

So be careful with the results.

www.3dmark.com/compare/fs/18529321/fs/18141498/fs/15053139/fs/18026073

I think the i7 6785R can do better with higher clocked DDR4 Memory. The "computer" i have with it only runs DDR3 1600. But i am not even sure if the board can handle faster DDR3 Ram (SoDimm). And i was not able to find any board with the 6785R and DDR4

Either way, not a big deal. Intel doesn't need to make the fastest IGP around. They haven't for a long time and it didn't harm them.

They only need their IGP to stay relevant. Not in context of AMD, but in context of next gen systems.

Intel has to provide a solution that will power single 6/8K screens or multiple 4K screens.

And this has to be a frugal chip that can be put into ultrabooks as well.

Improved gaming performance will be just a welcome side-effect.

What i find always funny, when the GPU market shares are compared. You mostly see discrete GPUs. But when you take all GPUs in account (intigrated as well) Intel is far ahead of Nvidia and AMD. The most recent thing i could find was 66% Intel as of Q1 2018.

www.ultragamerz.com/new-gpu-market-share-2018-q2-nvidia-down-amd-down-and-intel-gpu-shipments-were-up/

Q3 2018

www.statista.com/statistics/754557/worldwide-gpu-shipments-market-share-by-vendor/

Those millions that play Fortnite don't need a thousand dollar 2080ti, a 150$ APU is enough. The reason why this didn't happened yet is thanks to the years of atrociously crappy GPUs Intel stuffed inside their chips. Most people aren't even aware of the fact that video cards are no longer an absolute necessity even when it comes to light gaming.

Slowly but surely these systems will get integrated over time.

I believe the only party "unaware" here is you... or maybe it's just your hatred towards Intel. ;-)

Millions that play Fortnite and countless other games are already fine using their Intel IGP. It forces some compromises: low settings or resolution. But it's certainly feasible. And when you play on a 14" notebook, there's hardly any difference between 1080p and 720p.

If we wanted to point a competing product that could have pushed Intel into boosting the performance now, it is not the Ryzen APU, but the sudden popularity of MX chips in laptops.

Almost every mid/high-end ultrabook is available with an MX130 or MX150. This has to be one of the more popular product lines they have.

I have no idea how much Nvidia makes from each chip. $20 maybe?

This is a clear sign for Intel: if they made a more powerful SoC or a separate GPU chip, these extra $20 would have gone to them, not their main competitor.

I really assumed the smaller die was just a die shrink from the haswell die with small upgrades.

Do you know by any chance a GT3 or GT4 without eDRAM? I only know 128MB and 64MB ones.

The reason why it haven't happened yet, is because GPUs are really complex chips. So manufacturing had to really evolve to make them fit on a CPU socket and at the same time offer enough performance to make them useful. And of course system RAM had to become much faster to offer enough bandwidth. Even if Intel was at Nvidia's level in GPU performance, we wouldn't have been much better than what we are today with AMD's APUs. In my opinion, probably +30-50% more performance from an Intel APU with Nvidia Turing architecture for the GPU part, compared to a 2400G.