Tuesday, April 30th 2019

Backblaze Releases Hard Drive Stats for Q1 2019

As of March 31, 2019, Backblaze had 106,238 spinning hard drives in our cloud storage ecosystem spread across three data centers. Of that number, there were 1,913 boot drives and 104,325 data drives. This review looks at the Q1 2019 and lifetime hard drive failure rates of the data drive models currently in operation in our data centers and provides a handful of insights and observations along the way.

Hard Drive Failure Stats for Q1 2019

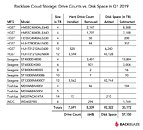

At the end of Q1 2019, Backblaze was using 104,325 hard drives to store data. For our evaluation we remove from consideration those drives that were used for testing purposes and those drive models for which we did not have at least 45 drives (see why below). This leaves us with 104,130 hard drives. The table below covers what happened in Q1 2019.Notes and Observations

If a drive model has a failure rate of 0%, it means there were no drive failures of that model during Q1 2019. The two drives listed with zero failures in Q1 were the 4 TB and 5 TB Toshiba models. Neither has a large enough number of drive days to be statistically significant, but in the case of the 5 TB model, you have to go back to Q2 2016 to find the last drive failure we had of that model.

There were 195 drives (104,325 minus 104,130) that were not included in the list above because they were used as testing drives or we did not have at least 45 of a given drive model. We use 45 drives of the same model as the minimum number when we report quarterly, yearly, and lifetime drive statistics. The use of 45 drives is historical in nature as that was the number of drives in our original Storage Pods. Beginning next quarter that threshold will change; we'll get to that shortly.

The Annualized Failure Rate (AFR) for Q1 is 1.56%. That's as high as the quarterly rate has been since Q4 2017 and its part of an overall upward trend we've seen in the quarterly failure rates over the last few quarters. Let's take a closer look.

Quarterly Trends

We noted in previous reports that using the quarterly reports is useful in spotting trends about a particular drive or even a manufacturer. Still, you need to have enough data (drive count and drive days) in each observed period (quarter) to make any analysis valid. To that end the chart below uses quarterly data from Seagate and HGST drives while leaving out Toshiba and WDC drives as we don't have enough drives from those manufacturers over the course of the last three years.Over the last three years, the trend for both Seagate and HGST annualized failure rates had improved, i.e. gone down. While Seagate has reduced their failure rate over 50% during that time, the upward trend over the last three quarters requires some consideration. We'll take a look at this and let you know if we find anything interesting in a future post.

Changing the Qualification Threshold

As reported over the last several quarters, we've been migrating from lower density drives, 2, 3, and 4 TB drives, to larger 10, 12, and 14 TB hard drives. At the same time, we have been replacing our stand-alone 45-drive Storage Pods with 60-drive Storage Pods arranged into the Backblaze Vault configuration of 20 Storage Pods per vault. In Q1, the last stand-alone 45-drive Storage Pod was retired. Therefore, using 45 drives as the threshold for qualification to our quarterly report seems antiquated. This is a good time to switch to using Drive Days as the qualification criteria. In reviewing our data, we have decided to use 5,000 Drive Days as the threshold going forward. The exception, any current drives we are reporting, such as the Toshiba 5 TB model with about 4,000 hours each quarter, will continue to be included in our Hard Drive Stats reports.

Fewer Drives = More Data

Those of you who follow our quarterly reports might have observed that the total number of hard drives in service decreased in Q1 by 648 drives compared to Q4 2018, yet we added nearly 60 petabytes of storage. You can see what changed in the chart below.Lifetime Hard Drive Stats

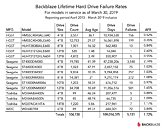

The table below shows the lifetime failure rates for the hard drive models we had in service as of March 31, 2019. This is over the period beginning in April 2013 and ending March 31, 2019.

Source:

Backblaze

Hard Drive Failure Stats for Q1 2019

At the end of Q1 2019, Backblaze was using 104,325 hard drives to store data. For our evaluation we remove from consideration those drives that were used for testing purposes and those drive models for which we did not have at least 45 drives (see why below). This leaves us with 104,130 hard drives. The table below covers what happened in Q1 2019.Notes and Observations

If a drive model has a failure rate of 0%, it means there were no drive failures of that model during Q1 2019. The two drives listed with zero failures in Q1 were the 4 TB and 5 TB Toshiba models. Neither has a large enough number of drive days to be statistically significant, but in the case of the 5 TB model, you have to go back to Q2 2016 to find the last drive failure we had of that model.

There were 195 drives (104,325 minus 104,130) that were not included in the list above because they were used as testing drives or we did not have at least 45 of a given drive model. We use 45 drives of the same model as the minimum number when we report quarterly, yearly, and lifetime drive statistics. The use of 45 drives is historical in nature as that was the number of drives in our original Storage Pods. Beginning next quarter that threshold will change; we'll get to that shortly.

The Annualized Failure Rate (AFR) for Q1 is 1.56%. That's as high as the quarterly rate has been since Q4 2017 and its part of an overall upward trend we've seen in the quarterly failure rates over the last few quarters. Let's take a closer look.

Quarterly Trends

We noted in previous reports that using the quarterly reports is useful in spotting trends about a particular drive or even a manufacturer. Still, you need to have enough data (drive count and drive days) in each observed period (quarter) to make any analysis valid. To that end the chart below uses quarterly data from Seagate and HGST drives while leaving out Toshiba and WDC drives as we don't have enough drives from those manufacturers over the course of the last three years.Over the last three years, the trend for both Seagate and HGST annualized failure rates had improved, i.e. gone down. While Seagate has reduced their failure rate over 50% during that time, the upward trend over the last three quarters requires some consideration. We'll take a look at this and let you know if we find anything interesting in a future post.

Changing the Qualification Threshold

As reported over the last several quarters, we've been migrating from lower density drives, 2, 3, and 4 TB drives, to larger 10, 12, and 14 TB hard drives. At the same time, we have been replacing our stand-alone 45-drive Storage Pods with 60-drive Storage Pods arranged into the Backblaze Vault configuration of 20 Storage Pods per vault. In Q1, the last stand-alone 45-drive Storage Pod was retired. Therefore, using 45 drives as the threshold for qualification to our quarterly report seems antiquated. This is a good time to switch to using Drive Days as the qualification criteria. In reviewing our data, we have decided to use 5,000 Drive Days as the threshold going forward. The exception, any current drives we are reporting, such as the Toshiba 5 TB model with about 4,000 hours each quarter, will continue to be included in our Hard Drive Stats reports.

Fewer Drives = More Data

Those of you who follow our quarterly reports might have observed that the total number of hard drives in service decreased in Q1 by 648 drives compared to Q4 2018, yet we added nearly 60 petabytes of storage. You can see what changed in the chart below.Lifetime Hard Drive Stats

The table below shows the lifetime failure rates for the hard drive models we had in service as of March 31, 2019. This is over the period beginning in April 2013 and ending March 31, 2019.

30 Comments on Backblaze Releases Hard Drive Stats for Q1 2019

if they used server HDDs the data MIGHT be vaguely useful.

Seagate is the winner of the losers.

Toshiba MG07ACA14TA - 9-disk He-Sealed 7200RPM Enterprise HDD

HGST HUH721212ALE600/ALN604 - HC520 He-Sealed 7200RPM Enterprise HDD

Seagate ST12000NM0007 - Exos x12 He-Sealed 7200RPM Enterprise HDD

Seagate ST10000NM0086 - Exos x10 He-Sealed 7200RPM Enterprise HDD

I think you get the picture. The lower capacity Seagate drives are desktop class and have been used continually by Backblaze for a few years. They offer a consumer baseline to compare against. The other vendor's drives are long-service NAS or Surveillance drives, which some might argue should have a similar life span to an Enterprise drive.

Funny that.

Ah, nvm. Their chart is from Q4 2018. Looks like they pulled them from service somewhere last year, and wouldn't have explained it again. Thought it was Q1 to Q1.

They certainly are at least trying this time around to address some longstanding concerns: Good. They still have some major statistical representation issues, but at least it's more useful than nothing now.

HMS5C4040ALE640 MegaScale DC

HMS5C4040BLE640 MegaScale DC

HUH728080ALE600 Ultrastar He8

HUH721212ALN604 Ultrastar DC HC520 (aka He12)

ST4000DM000 Desktop HDD

ST6000DX000 Desktop HDD

ST8000DM002 Desktop HDD

ST8000NM0055 Exos 7E8

ST10000NM0086 Exos X10

ST12000NM0007 Exos X12

MD04ABA400V Surveillance HDD

MD04ABA500V Surveillance HDD

MG07ACA14TA Enterprise HDD

WD60EFRX Red NAS

So, not really a fair comparison. HGST is all enterprise drives. Seagate and Toshiba is a mix. WD is for SOHO NAS (i.e. they're not Red Pro).

These stats just mean that I wouldn'd be buying these or recommending to my friends.

www.pugetsystems.com/labs/articles/Most-Reliable-PC-Hardware-of-2018-1322/#Storage(HardDrive)

That said, AWS isn't really a backup joint, primarily, so there's that.

But yes, I would imagine it is cost prohibitive still to run all high capacity SSDs. It takes almost no time for a tech to replace a platter/HDD and get the array rebuilt, versus the HUGE cost difference between comparable capacity platters/SSD.

The best you have may be Puget Systems I referenced earlier.

I love WD drives myself, damn that Samsung sold its HDD business. They were also awesome.

Some even have a small SSD for the OS to boot from (If it isn't PXE booted and an image dumped on it).

I know too many people who think that you can just use consumer drives in servers, well, you can, but.... They exist for a reason. Server hardware, I mean.

You can easily use consumer grade hardware in servers. There isn't a terrible amount of difference in a lot of the hardware. Obviously enough for it to be 'worth it' to the enterprise, but, yeah, they in data centers and a large part of them. :)