Monday, August 31st 2020

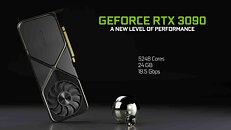

Performance Slide of RTX 3090 Ampere Leaks, 100% RTX Performance Gain Over Turing

NVIDIA's performance expectations from the upcoming GeForce RTX 3090 "Ampere" flagship graphics card underline a massive RTX performance gain generation-over-generation. Measured at 4K UHD with DLSS enabled on both cards, the RTX 3090 is shown offering a 100% performance gain over the RTX 2080 Ti in "Minecraft RTX," greater than 100% gain in "Control," and close to 80% gain in "Wolfenstein: Young Blood." NVIDIA's GeForce "Ampere" architecture introduces second generation RTX, according to leaked Gainward specs sheets. This could entail not just higher numbers of ray-tracing machinery, but also higher IPC for the RT cores. The specs sheets also refer to third generation tensor cores, which could enhance DLSS performance.

Source:

yuten0x (Twitter)

131 Comments on Performance Slide of RTX 3090 Ampere Leaks, 100% RTX Performance Gain Over Turing

Think about how much those RT and Tensor cores add to the price of these Nvidia GPUs. Think about what they actually do for the game play experience. As a 2080 Ti owner I wish I could have just got a version of the card minus the Ray Tracing supported tech.

I really hope AMD implements RT functionality in a way that doesn't drive the cost of the card up and have useless hardware when RT is off.

Lastly, DLSS would mean so much more if it wasn't in such a limited amount of titles. DLSS needs to work in all games if consumers are going to pay for it.

I'm saying 10GB on a $800 graphics card is a joke. With that kind of money you should expect to use it for 3-4 years, 10gb wont be enough when both new consoles have 16gb, RDNA2 will have 16gb cards for the same price or likely cheaper, and Nvidia will nickle and dime everyone with a 20gb model 3080 next month. The only people buying a 10gb $800 graphics card are pretty clueless ones.

Control is a marketing stunt. TAA nasty looking game with no proper reflections without RT, then they turn it on and go "look how great this looks". Compare vs Spiderman on PS4 to see what an actual game should look like not using RT "features".

It's been sad gaming on PC since the 1080 ti came out and showed us what an actual video card should be, but now nVidia is majority not gaming focused.

Fake.

However I'll wait for reviews first.

And, frankly, I don't get what "oddities" of jpeg compression are supposed to indicate.

This kind of "leak" does not need to modify an existing image, it's plain text and bars.

Try harder next time.

According to a Eurogamer interviewSo the Unreal Engine 5 has figured out a good way to do fast and and beautiful indirect lighting via a new "software" ray tracing technique. But this technique appears to be limited to indirect lighting; it may not do reflections, hard shadows with it (or else Epic would have wanted to show that off too).

Dunno where this site got it's DLSS 2.0

Even them, the site, clearly state they don't know in the comment of the comparaison video they've uploaded. ->

This site is just a clickbait ffs.

With Microsoft Flight Sim remaining below 8GBs of VRAM, I find it highly unlikely that any other video game in the next few years will come close to those capabilities. The 10+ GBs of VRAM is useful in many scientific and/or niche (ex: V-Ray) use cases, but I don't think its a serious issue for any video gamer.

And you can already push past 8GB today, it's going to get much worse once the new consoles are out and they drop PS4/XB1 for multiplatform titles.