Monday, August 31st 2020

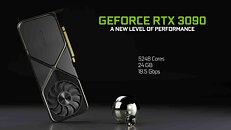

Performance Slide of RTX 3090 Ampere Leaks, 100% RTX Performance Gain Over Turing

NVIDIA's performance expectations from the upcoming GeForce RTX 3090 "Ampere" flagship graphics card underline a massive RTX performance gain generation-over-generation. Measured at 4K UHD with DLSS enabled on both cards, the RTX 3090 is shown offering a 100% performance gain over the RTX 2080 Ti in "Minecraft RTX," greater than 100% gain in "Control," and close to 80% gain in "Wolfenstein: Young Blood." NVIDIA's GeForce "Ampere" architecture introduces second generation RTX, according to leaked Gainward specs sheets. This could entail not just higher numbers of ray-tracing machinery, but also higher IPC for the RT cores. The specs sheets also refer to third generation tensor cores, which could enhance DLSS performance.

Source:

yuten0x (Twitter)

131 Comments on Performance Slide of RTX 3090 Ampere Leaks, 100% RTX Performance Gain Over Turing

Also this is running with DLSS on, and no idea if Ampere has any performance gains in DLSS over Turing either.

Also, that 2x will surely now mean that some titles will be playable (fps-wise) with all the RTX features enabled.... others, still not so much.

www.techpowerup.com/review/control-benchmark-test-performance-nvidia-rtx/4.html

Without DLSS, 2080Ti gets about 100FPS avg at 1080p, 50fps avg at RTX 1080P.

So now RTX3090 gets about 100 fps on RTX 1080p (>2X from this chart)

That means RTX3090 needs at least 200FPS on regulation 1080p, that is without RTX. Of course this is assuming no RT efficiency improvement. Let's assume there is some major RT effieceny improvement so instead of 0.5X performance penalty we have 0.7X performance penalty. Then RTX3090 would be running 133FPS to 150FPS without RTX. So a 30% to 50% performance uplift in non-RTX games. Also we have 5248 versus 4352 CUDA core. So CUDA core increase by itself should give at least 20% performance in nonRTX

That is just some quick napkin math. I am more leaning towards 30%~35% performance increase. But there is a good chance that I am wrong

ok.... and...that is a reliable source? and not just some random person on the internet?

And there are other examples there too.

So it could be fake.

EDIT: look at every intersecting bar. It isn't fake, it's due to low resolution.

It's rumours so there's that but it wasn't 4x smaller hit in performance , I don't recall ever seeing that said.

Pitchfork at the ready :p

So I could totally believe that WITH RTX ON, that the boost of performance will be double between the flagship cards as they improve tensor cores and tweak the implementation so that it doesnt take a nearly as much of performance hit + the 60% rumored performance uplift, and it's very possible to hit those numbers.

Let's wait&see, but I think that 50% of that performance gain comes from purely rasterized performance, so only ~50% RT performance gain sounds a bit disappointing, indeed, at least if you care a lot about RT and you plan on buying a 3090. I'm neither of those 2, so I'm keeping calm atm.