Thursday, December 3rd 2020

AMD Radeon RX 6800 XT Tested on Z490 Platform With Resizable BAR (AMD's SAM) Enabled

AMD's recently-introduced SAM (Smart Access memory) feature enables users pairing an RX 6000 series graphics card with a Ryzen 5000 series CPU to take advantage of a long-lost PCIe feature in the form of its Resizable Bar. However, AMD currently only markets this technology for that particular component combination, even though the base technology isn't AMD's own, but is rather included in the PCIe specification. It's only a matter of time until NVIDIA enables the feature for its graphics cards, and there shouldn't be any technical problem on enabling it within Intel's platform as well. Now, we have results (coming from ASCII.jp) from an Intel Z490 motherboard (ASUS ROG Maximus XII EXTREME) with firmware 1002, from November 27th, paired with AMD's RX 6800 XT. And SAM does work independently of actual platform.

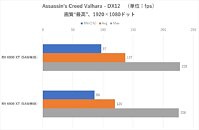

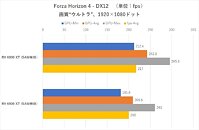

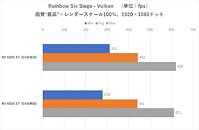

Paired with an Intel Core i9-10900K, AMD's RX 6800 XT shows performance increases across the board throughout the test games - which are games AMD themselves have confirmed SAM is working with. This means testing was done with Assassin's Creed Valhalla, Forza Horizon 4, Red Dead Redemption 2, and Rainbow Six Siege. The results speak for themselves (SAM results are the top ones in the charts). There are sometimes massive improvements in minimum framerates, considerable gains in average framerates, and almost no change in the maximum framerates reported for these games on this given system. Do note that the chart for Forza Horizon 4 has an error, and the tested resolution is actually 1440p, not 1080p.

Source:

ASCII.jp

Paired with an Intel Core i9-10900K, AMD's RX 6800 XT shows performance increases across the board throughout the test games - which are games AMD themselves have confirmed SAM is working with. This means testing was done with Assassin's Creed Valhalla, Forza Horizon 4, Red Dead Redemption 2, and Rainbow Six Siege. The results speak for themselves (SAM results are the top ones in the charts). There are sometimes massive improvements in minimum framerates, considerable gains in average framerates, and almost no change in the maximum framerates reported for these games on this given system. Do note that the chart for Forza Horizon 4 has an error, and the tested resolution is actually 1440p, not 1080p.

59 Comments on AMD Radeon RX 6800 XT Tested on Z490 Platform With Resizable BAR (AMD's SAM) Enabled

Even if the improvement was only in Minimum frame rates that matters the most as you feel that over avg and the high fps.

Hopefully this will "encourage" AMD to unlock the feature on relevant motherboards, regardless of CPU installed.

All I can find on PCIe 4.0 is that as quoted from pretty much the same source across the board that "The increased power limit instead refers to the total power draw of expansion cards. PCIe 3.0 cards were limited to a total power draw of 300 watts (75 watts from the motherboard slot, and 225 watts from external PCIe power cables). The PCIe 4.0 specification will raise this limit above 300 watts, allowing expansion cards to draw more than 225 watts from external cables."

I can't find any real evidence if PCIe 4.0 allows you to pull more than 75 watts from the slot or not. Anyone please prove me I'm wrong and people aren't just remaining things in order to avoid being sued.

Probably the test was only performed once per graph, which is a big "no no" when benchmarking ...// edit: yeah, I should definitely had read the source - they performed the test 3 times. The last sentence is incorrect, but I'm standing behind everything else I have written.

"did you see my keys anywhere?"

This works by allowing the CPU to utilize the full amount of memory on the GPU, rather than just having access to a limited part of it. As such, more data can be shuffled between the two, which leads to increased performance.

It's minimum FPS we're talking her, so say there's some kind of bug in the game, that gets a work around by enabling a wider "memory interface" between the CPU and GPU, why wouldn't we see a huge jump in performance for the minimum FPS?

Obviously I'm just speculating here, but you didn't even do that, you just said it's impossible or that the tester made a mistake. The latter is highly unlikely, as ASCii Japan doesn't do blunders like that, the journalists working there are not some n00bs.

Also, the average FPS is only up around 9fps, which seems to be in line with the other games tested. As such, I still believe that we're seeing som game related issues that got a workaround by enabling the resizable bar option.

Also, I don't know what this has to do with anything, as the test that was performed was on PCIe 3.0 and that's what we're discussing here, if I'm not mistaken?

1) SAM and PCIe 3 or4 spec has piss all to do with what you are suggesting.

2) SAM does NOT increase the power the GPU can use.

3) SAM DOES allow the CPU to push more data to the GPU at once instead of being limited by the prior frame buffer "window"

Imagine if the GPU needed 2Gb of data from the system memory, previously it had to be sent in lets say 32MB chunks, then the GPU had to manage moving it all around after it was there and mapped into the GPU memory address space. While the GPU waits for texture data that doesn't arrive in the first load its waiting, causing lag, lower frame rates, stutters.

PCIe spec allows for larger data chunks to be pushed, but no one implemented it, maybe it was overlooked, maybe drivers for GPUs and other devices would have faults if it were enabled, maybe the timing choice was made to ease CPU load and prevent buffer overflows.

Modern GPU's and CPU's are able to handle DMA transfers, and with the increased core counts maybe we have finally reached the point where the performance loss from larger transfers or GPU's being able to directly access the system memory and the driver running on the CPU managing it is providing the increased performance.

Maybe the balance between the driver checking to see if its faster meant that more coding for every scenario out there made it more difficult and could lead to unpredictable results.

Also, you don't just publish min FPS results for the obvious outlier, as this will raise questions (everyone that has ever done benchmarking will be skeptical of the results ...). You publish frametime graphs, which explain in detail what changed when Resizable BAR got turned on. Isn't it obvious? It doesn't seem to be for them ...

This isn't complicated.