Monday, December 14th 2020

Intel Core i9-11900K "Rocket Lake" Boosts Up To 5.30 GHz, Say Rumored Specs

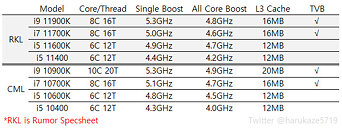

Intel's upcoming 11th Generation Core i9-11900K processor boosts up to 5.30 GHz, according to rumored specs of various 11th Gen Core "Rocket Lake-S" desktop processors, sourced by Harukaze5719. According to this specs-sheet, both the Core i9-11900K and the Core i7-11700K (i7-10700K successor) are 8-core/16-thread parts, and clock-speeds appear to be the only apparent product segmentation between the two. The i9-11900K has a maximum single-core boost frequency of 5.30 GHz, and 4.80 GHz all-core boost. The i7-11700K, on the other hand, has an all-core boost of 4.60 GHz, and 5.00 GHz single-core boost. This time around, even the Core i7 part gets Thermal Velocity Boost.

11th Gen Core i5 continues to be 6-core/12-thread, with Intel allegedly readying an unlocked Core i5-11600K, and a locked i5-11400. Both parts lack TVB. The i5-11600K ticks up to 4.90 GHz single-core, and 4.70 GHz all-core; while the i5-11400 does 4.20 GHz single-core, and 4.40 GHz all-core. The secret-sauce with "Rocket Lake-S" is the introduction of the new "Cypress Cove" CPU cores, which Intel claims offer a double-digit percent IPC gain over the current-gen "Comet Lake," an improved dual-channel DDR4 memory controller with native support for DDR4-3200, a PCI-Express Gen 4 root-complex, and a Gen12 Xe-LP iGPU. The "Cypress Cove" CPU cores also feature VNNI and DLBoost, which accelerate AI DNN; as well as limited AVX-512 instructions. The 11th Gen core processors will also introduce a CPU-attached M.2 NVMe slot, similar to AMD Ryzen. Intel is expected to launch its first "Rocket Lake-S" processors before Q2-2021.

Sources:

harukaze5719 (Twitter), VideoCardz

11th Gen Core i5 continues to be 6-core/12-thread, with Intel allegedly readying an unlocked Core i5-11600K, and a locked i5-11400. Both parts lack TVB. The i5-11600K ticks up to 4.90 GHz single-core, and 4.70 GHz all-core; while the i5-11400 does 4.20 GHz single-core, and 4.40 GHz all-core. The secret-sauce with "Rocket Lake-S" is the introduction of the new "Cypress Cove" CPU cores, which Intel claims offer a double-digit percent IPC gain over the current-gen "Comet Lake," an improved dual-channel DDR4 memory controller with native support for DDR4-3200, a PCI-Express Gen 4 root-complex, and a Gen12 Xe-LP iGPU. The "Cypress Cove" CPU cores also feature VNNI and DLBoost, which accelerate AI DNN; as well as limited AVX-512 instructions. The 11th Gen core processors will also introduce a CPU-attached M.2 NVMe slot, similar to AMD Ryzen. Intel is expected to launch its first "Rocket Lake-S" processors before Q2-2021.

52 Comments on Intel Core i9-11900K "Rocket Lake" Boosts Up To 5.30 GHz, Say Rumored Specs

Yeah if it hits it's boost for a couple seconds it's not false advertising lol

Trouble is always how long it stays at boost

10900k is pretty disappointing at default settings throttles bad just on a short test like R20 but it hit it's boost just not very long lol :roll:

I mean you need look no further than these 2 reviews - 10320 4.6Ghz 4C/8T and the 3300X 4C/8T, noting that the 3300X is more like a Zen 3 4C/8T since it does not suffer the chiplet cache context switching penatly common to higher core count Zen 2. Even on AAA titles these 4 core chips in aggregate are only a percent or two behind their 6 core peers. And I would bet that if they had higher clocks like the higher SKU chips (10320 below caps at 4.6Ghz vs 5.1 and 5.3 for 10700K and 10900K) they could well be faster than the higher core chips in many situations, as the extra cores also result in more frequent context switching and heavier memory bus usage - which is bad for gaming.

To wit, the 10400 is sold out at my local MicroCenter, and their most popular in-stock chips right now are the 9700K, 9900K, and 10700K.

lots in Houston all except 10900k & KFC

As for 5% faster, I think it depends on what metrics you are looking at. If you are looking at purely just gaming, then yeah I agree the improvement is not great when compared to Intel's Comet Lake. However you do need to know that the Intel chip is running with a higher boost speed. So 5% with a clockspeed disadvantage shows that the IPC gained is actually higher. In any case, I am looking forward to see what Rocket Lake will bring to the table, though I am also expecting an incredible amount of power required and heat output for the high end models.

Just exactly how many plus signs (10, 15, 20 ?) can we look forward to at the end of this pitiful pathetic semi-simulated uptick of yet anutha 14nm cpu ???????

Nobody GAF about the process node. The only way most people do is by power consumption... and most dont GAF about that either. ;)

And what's going to be "plenty" for a long time is irrelevant. Nobody's buying enthusiast level performance CPUs for "good enough" performance, let alone for their garbage throwaway GPUs and this launch (if true) is a complete embarrassment from Intel and their useless bean-counter CEO. We could argue about whether the iGPU would be useful or not if Intel were at least somewhat serious about driver support -- which has been basically non-existent since forever. AMD and Nvidia provide dozens of driver release and around 5-10 years of support for their GPUs compared to Intel who at best release one or two updates per year for around 2 years (with the exception of their re-hashed iGPUs that stayed the same for multiple generations, since they haven't bothered to change barely anything in them for around half a decade now besides some minor tweaks). I honestly think it's time for this dinosaur company to go out of business and wouldn't miss them one bit if they did -- they have been a joke for over half a decade now and are refusing to compete on either price or performance in any meaningful way, despite being massively behind their direct competitor whilst outside competitors are already knocking on their door and about to blow them out of the water (and I'm not even talking about AMD -- Apple Silicon and RISCV competitors at a fraction of their size are going to mop the floor with Intel in the next year or two in both efficiency and performance). If they do not get their act together and go through a complete management re-structure, I'm 100% certain we will be looking at another has-been dinosaur patent troll (a.k.a. IBM 2.0).

And please stop giving any more awful suggestions to either AMD or Intel about handicapping their mainstream platforms to 8 cores. Not everyone who needs more than 8 CPU cores needs the extra PCI-E lanes, nor has the space for a HEDT socket (which is too large for those of us looking for a small but future-proof ITX build, myself included). The fact that AMD's 12 and 16 core parts have been selling out consistently on the mainstream platform only proves you wrong.

Even in a perfect environment, two cores at half speed would not catch up with a single core at full speed.

The more cores you divide a workload between, the more overhead you'll get. There will always be diminishing returns with multithreaded scaling.What kind of reasoning is this?

And your phone probably have 6-8+ cores as well. Performance matters, not cores.Just a kind reminder, Rocket Lake (and AM4) are mainstream platforms. ;)Nonsense. There were 8-core prototypes of Cannon Lake (cancelled shrink of Skylake), and planned and cancelled 6-core variants of Kaby Lake, long before Zen. 14nm might be mature now, but it took a very long time to get there.A well known architecture is likely to have less problems than an untested one. The more time passes by, the greater the chance of finding a large problem.

And don't pretend like most of these vulnerabilities didn't affect basically every modern CPU microarchitecture in one way or another.So people should buy CPUs they don't need, just in case?

The reality is, unless you're a serious content-creator, 3D-modeller, developer or someone else who runs a workload which significantly benefits from more than 8 cores, you're better off with the fastest 6 or 8 core you can find, for the most responsive and smooth user experience. Not to mention the money saved can be put into other things, including a better GPU, or future upgrades etc.The factual incorrections here are too severe to even cover in this discussion thread.

You are just biased against Intel, and don't even care about the facts.

We need more competition, not less.You don't even have a faint idea about what these things are.It's not about handicapping, it's about driving up the costs with unnecessary features when there is already a workstation/enthusiast platform to cover this. And BTW, HEDT motherboards in mini-ITX form factor does exist, not that it makes any sense, but anyway.

And how many of those buying these 12 and 16 core models are doing it just to brag about benchmark scores? Probably a good portion.

Gaming performance is about the same...ST performance is slightly faster and MT performance, with SMT, is notably faster. It's not a reach at all to think they'll take the IPC and single threaded performance crown. Where I do believe they will have trouble is with MT and improving the efficiency of HT vs SMT. But clocks and IPC can overcome that difference. I fully believe RL will be as performant or more performant than Zen2/5000 series. The biggest issue will be price and power use IMO.

As I said above, nobody gives a hoot about the process node... half the PC users can't even spell it, nonetheless know what relevance that has over their CPU. I also believe less people care about power use than people believe. In the enthusiast realm, I feel it's simply a talking point to hold over your competitor more than it has real world consequences.

Just be sure to bitch about those as much as others bitch about "super" and "Ti" cards coming out in response... Goose/Gander....I find that hilarious (not that you do it... but plenty here had no issues with their precious' incremental response but did with others)... :p

And at this point, I think Zhaoxin and VIA are more likely to provide better competition to AMD than Intel.Couldn't think of a more worthless reply. You sure got me there, professor...There are no unnecessary features. You suggesting to people wanting more than 8 cores to jump on HEDT platforms is beyond stupid. ITX sized HEDT motherboards practically don't exist outside of the handful made by Asrock and are both impossible to get and cost a fortune due to the extra VRMs/power components alone that would be capable of handling higher end CPUs. You're so clueless that you're contradicting your own point directly, since people would be paying for extra CPU PCIE lanes that they would never use. And what people's uses are -- whether for benchmarking or not, is none of your business.