Monday, August 7th 2017

Intel "Coffee Lake" Platform Detailed - 24 PCIe Lanes from the Chipset

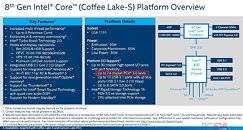

Intel seems to be addressing key platform limitations with its 8th generation Core "Coffee Lake" mainstream desktop platform. The first Core i7 and Core i5 "Coffee Lake" processors will launch later this year, alongside motherboards based on the Intel Z370 Express chipset. Leaked company slides detailing this chipset make an interesting revelation, that the chipset itself puts out 24 PCI-Express gen 3.0 lanes, that's not counting the 16 lanes the processor puts out for up to two PEG (PCI-Express Graphics) slots.

The PCI-Express lane budget of "Coffee Lake" platform is a huge step-up from the 8-12 general purpose lanes put out by previous-generation Intel chipsets, and will enable motherboard designers to cram their products with multiple M.2 and U.2 storage options, besides bandwidth-heavy onboard devices such as additional USB 3.1 and Thunderbolt controllers. The chipset itself integrates a multitude of bandwidth-hungry connectivity options. It integrates a 10-port USB 3.1 controller, from which six ports run at 10 Gbps, and four at 5 Gbps.Other onboard controllers includes a SATA AHCI/RAID controller with six SATA 6 Gbps ports. The platform also introduces PCIe storage options (either an M.2 slot or a U.2 port), which is wired directly to the processor. This is drawing inspiration from AMD AM4 platform, in which an M.2/U.2 option is wired directly to the SoC, besides two SATA 6 Gbps ports. The chipset also integrates a WLAN interface with 802.11ac and Bluetooth 5.0, though we think only the controller logic is integrated, and not the PHY itself (which needs to be isolated for signal integrity).

Intel is also making the biggest change to onboard audio standards since the 15-year old Azalia (HD Audio) specification. The new Intel SmartSound Technology sees the integration of a "quad-core" DSP directly into the chipset, with a reduced-function CODEC sitting elsewhere on the motherboard, probably wired using I2S instead of PCIe (as in the case of Azalia). This could still very much be a software-accelerated technology, where the CPU does the heavy lifting with DA/AD conversion.

According to leaked roadmap slides, Intel will launch its first 8th generation Core "Coffee Lake" processors along with motherboards based on the Z370 chipset within Q3-2017. Mainstream and value variants of this chipset will launch only in 2018.

Sources:

VideoCardz, PCEVA Forums

The PCI-Express lane budget of "Coffee Lake" platform is a huge step-up from the 8-12 general purpose lanes put out by previous-generation Intel chipsets, and will enable motherboard designers to cram their products with multiple M.2 and U.2 storage options, besides bandwidth-heavy onboard devices such as additional USB 3.1 and Thunderbolt controllers. The chipset itself integrates a multitude of bandwidth-hungry connectivity options. It integrates a 10-port USB 3.1 controller, from which six ports run at 10 Gbps, and four at 5 Gbps.Other onboard controllers includes a SATA AHCI/RAID controller with six SATA 6 Gbps ports. The platform also introduces PCIe storage options (either an M.2 slot or a U.2 port), which is wired directly to the processor. This is drawing inspiration from AMD AM4 platform, in which an M.2/U.2 option is wired directly to the SoC, besides two SATA 6 Gbps ports. The chipset also integrates a WLAN interface with 802.11ac and Bluetooth 5.0, though we think only the controller logic is integrated, and not the PHY itself (which needs to be isolated for signal integrity).

Intel is also making the biggest change to onboard audio standards since the 15-year old Azalia (HD Audio) specification. The new Intel SmartSound Technology sees the integration of a "quad-core" DSP directly into the chipset, with a reduced-function CODEC sitting elsewhere on the motherboard, probably wired using I2S instead of PCIe (as in the case of Azalia). This could still very much be a software-accelerated technology, where the CPU does the heavy lifting with DA/AD conversion.

According to leaked roadmap slides, Intel will launch its first 8th generation Core "Coffee Lake" processors along with motherboards based on the Z370 chipset within Q3-2017. Mainstream and value variants of this chipset will launch only in 2018.

119 Comments on Intel "Coffee Lake" Platform Detailed - 24 PCIe Lanes from the Chipset

More lanes is nice to have though and Threadripper is really the only game in town at that level/price.

Most manufacturers make it pretty clear where the PCI-E lanes are coming from, or it is pretty easy to figure it out. The 16 lanes from the CPU are supposed to only be used for graphics. The first PCI-E x16 slot is almost always connected to the CPU. If there is a second slot PCI-E x16 slot, then almost always the first slot will become an x8 and the second will be an x8 as well, because they are sharing the 16 lanes from the CPU. The specs of the motherboard will tell you this. You'll see something in the specs like "single at x16 ; dual at x8 / x8" Some even say "single at x16 ; dual at x8 / x8 ; triple at x8 / x4 / x4". In that case, all 3 PCI-E x16 slots are actually connected to the CPU, but when multiple are used, they run at x8 or x4 speed.

Any other slot that doesn't share bandwidth like this, is pretty much guaranteed to be using the chipset lanes and not the ones directly connected to the CPU.I remember back in the days when the CPU had to handle data transfers, it was so slow. Does anyone else remember the days when burning a CD would max out your CPU, and if you tried to open anything else on the computer, the burn would fail? That was because DMA wasn't a thing(and buffer underrun protection wasn't a thing yet either).Threadripper isn't a perfect solution either. In fact, it introduces a new set of issues. The fact that Threadripper is really a MCM, and the PCI-E lanes coming from the CPU are actually split up like they are on two different CPUs, leads to issues. If a device connected to one CPU wants to talk to another, it has to be done over the Infinity Fabric, which introduces latency. And it really isn't that much better than going over Intel's DMI link from the PCH to the CPU. It also had issues with RAID, due to the drives essentially being connected to two different storage controllers, but I think AMD has finally worked that one out.

I appreciate your help on clarifying this as I'd really like to know. I can pick a processor and specific motherboard if it helps you to give me an answer that helps me go in the right direction. I use my PC for gaming, work and as a HTPC. I have 150TB of storage and often transfer it to my other PC which has 100TB of storage so I hammer my nic and raid controller about once per week -usually about 4TB of transfer with updates and changes.

Getting a clean PCIe answer has been as challenging for me as finding out when I can actually use my frequent flyer miles.

Some of the Asrock boards have an Aquantia 5 or 10Gbe as well as a 1Gbe (or two) on them.

Once the NIC saturates it doesn't matter how fast the drives on either end are or what lanes they are connected to.

What CPU and board are you looking at?

I'm definitely going with the

NVidia 1180 Video card (probably asus strix)

X710-T4 network card

9460-16i raid controller

I'm also using a butt load of usb devices and the onboard nvme if it matters.

I will occasionally use wifi just for testing - not frequently.In my new machine I'll have quad port that I'll dual port trunk to two machines. In my old machines I have the old intel dual 10g nics -ports (x540). I only use them for backups so I'll have 20gbit which is more than enough as my raid will max out well before that. It exceeds 10gbit but I don't know by how much as currently I'm only connected at 10g.

What NICs are you using?

On the Z370 AORUS Gaming 7, the top two PCI-E x16 slots run off the CPU, the bottom one runs off lanes from the chipsets. So your GPU will get an x8 link, then whatever card you plug into the second slot will get an x8 link as well, and the card in the bottom slot will get an x4 link.

At the end of the day, beyond 3D rendering and video editing few applications need tons of threads. I believe game engines only recently (past two years or so) have broken the 4 core barrier. While I can't speak for others, I know my quad core will easily get the job done for a few more years.

It also takes around 10% faster to notice a significant difference and smoothness (consistency) is also important, the "headline" benchmark charts often to not report "perceived gameplay". If you do things like file transfers in the background while gaming, extra cores should help. IMO if you get to the point of a 10% difference but are seeing 100+fps with consistent frame times you'd be hard pressed to feel a difference outside competitive FPS gaming.

More cores is the future IMO.

If I was buying a machine now to last for up to 5 years with maybe only a graphics card swap or 3 I'd go for the better multi-threaded performance. Look what has happened with the 7700k, a year ago it was the gaming king, today I think it's "not so much" versus the more-cored CPUs. Then there are the platform considerations ...