Tuesday, October 30th 2018

AMD Radeon RX 590 Built on 12nm FinFET Process, Benchmarked in Final Fantasy XV

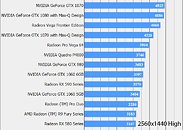

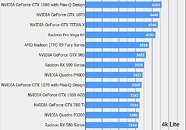

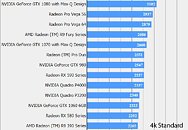

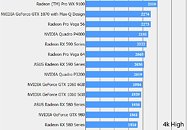

Thanks to some photographs by Andreas Schilling, of HardwareLuxx, it is now confirmed that AMD's Radeon RX 590 will make use of the 12 nm FinFET process. The change from 14 nm to 12 nm FinFET for the RX 590 brings with it the possibility of both higher clock speeds and better power efficiency. That said, considering it is based on the same Polaris architecture used in the Radeon RX 580 and 570, it remains to be seen how it will impact AMDs pricing in regards to the product stack. Will there be a price drop to compensate, or will the RX 590 be more expensive? Since AMD has already made things confusing enough with its cut down 2048SP version of RX 580 in China, anything goes at this point.Thanks to the Final Fantasy XV Benchmark being updated with results for the RX 590, we at least have an idea as to the performance on offer. In the 2560x1440 resolution tests, performance has improved by roughly 10% compared to the RX 580. Looking at the 3840x2160 resolution results shows a performance improvement of roughly 10 to 15%. This uplift in performance allows the RX 590 to put some serious pressure on NVIDIA's GeForce GTX 1060 6 GB. Keep in mind that while the RX 580 was always close, AMD's latest Polaris offering eliminates the gap and is far more competitive in a title that has always favored its competition, at least when it comes to comparing reference cards.When manual overclocking is taken into account, AMD's RX 580 can typically see a performance increase of around 5 to 10%. While this is speculation, in theory, it would put it on par or very near the RX 590 in terms of performance. However, no overclock is guaranteed so keeping that in mind, the move to the 12 nm FinFET process delivers a guaranteed performance boost with the possibility of further overclocking headroom. This should allow the RX 590 to further increase its performance advantage over the RX 580 and 570. Only time will tell how things shake out, but at the very least users can expect the AMD Radeon RX 590 to deliver on average 10% more performance over the current crop of RX 580 graphics cards.

Sources:

Twitter, FFXV Benchmark, via Videocardz

70 Comments on AMD Radeon RX 590 Built on 12nm FinFET Process, Benchmarked in Final Fantasy XV

A RX 570 4Gb is already the 1080p "darling", as finally now seeing good deals (seen them as low as $140) . A RX 575 4Gb will great option, especially if they give the memory a bump to 2000Mhz (8000MHz effective) and keep the MSRP at $170.

I think GloFo had to have this happen to streamline on one process (I might say they helped AMD not have a reason not to), while AMD can differentiate the product against the magnitude of mining cards being dumped now in the used market.

If this truly is a refined Polaris 30 on "12 nm", and not just cherry-picked golden samples of Polaris 20, then it means AMD have decided to extend the lifespan of Polaris very late in the 500-series lifecycle. This could be a strong indicator that AMD is preparing for either delays or supply issues on Navi. I would not be surprised if we hear something about a delay in ~5 months.

And yes, if this is Polaris 30 on "12 nm", there should be potential for a refresh of RX 570 too, but who knows if it will have a distinct name?Yes. I believe there was plans for this in the roadmaps, but they never materialized. I don't know if they were using HBM or GDDR, but there have obviously been some problems with cost or production.

First off, there's no Navi delay necessary to provide a market opportunity for a refreshed Polaris - Navi isn't due out until H2 2019 (or is that Q3/Q4? Can't remember.), which means it's still likely a year away - and that's the initial launch, which might be a couple of models for all we know. It makes sense to have a somewhat updated portfolio to begin with. While Nvidia hasn't launched anything remotely affordable yet from Turing, it's coming at some point, and definitely within a year. Waiting it out would be a bit silly, seeing how a refresh on a slightly improved node like this is likely rather cheap (at least compared to making a brand-new cut-down Vega) and would still give some benefits. If they're on par with the 1060 now, why not make a minor investment and make sure they're in a slightly better position when the 2060 comes along (or, with Nvidia pricing, the 2050 Ti, more likely)? Sounds like a no-brainer. What doesn't make sense is the naming, not the refresh itself.

As for this confirming there's no mid-range Vega coming, it might only be my opinion, but that's been pretty clear ever since its launch. AMD has never spoken about any such product (except for the Vega Mobile that Apple just implemented), and looking at the architecture (which is amazing for compute, but struggles in gaming performance (particularly compared to die size and power draw - and die size becomes increasingly important as margins shrink outside of high-end products)), making a cut-down gaming Vega doesn't make much sense. HBM2 is too expensive for the mid-range, and while it'd be nice to implement some of Vega's architectural improvements there too, they're not that dramatic. Polaris does essentially the same job for cheaper. I'm pretty certain AMD saw that Vega had the most potential as a compute card early on, and from then on deliberately focused on that while maintaining Polaris until they could bring out something more suited to gaming - which is Navi.

I'd still rather buy a R9 Fury over all of these, its cheaper and faster

Just check W1zzard’s latest review, the MSI RTX 2070 and look at those two cards currently. They have become firmly 1080p cards only in just 3 short years.

Even if the RX 590 costs $250, thats still a little bit more than a 1070/980 ti off eBay, so your statement would be incorrect.

Coming from a Fury X, around 15-10% slower than a 1070, I can easily get 1080p ultra-high at around 80-100fps for 90% of my games, I'd rather have quality/FPS over increased resolution, as would a majority of people here

www.tweaktown.com/articles/8331/definitive-ethereum-mining-performance-article/index7.htmlI don't think the majority here don't want to gamble on Ebay, either.

Im still very happy with my Fury X at 1440p though, for those of you claiming that it's a 1080p card now. Can't always max out the details, but who cares?

I too am very happy with my Fury X, it's still a wonderful GPU, and I'm glad I paid the $240 for it back in the mining craze.

When I got my 1080Ti and a 2560x1440 monitor 2 months ago, just for giggles I tried it out for one last run on the 980Ti. It wasn’t pretty. I had been lulled to sleep by the fact that the 980Ti handled almost everything maxed out at 60fps on the 1920x1080 monitor, although there were already games at the end it had to work really hard to not even make it to 60fps.

1440 was a joke. It made me glad I got the 1080Ti.

Still, I don't count either the RX 580 or any 590 that aligns with these rumors as a 1440p card. They're not that far off, but not quite there.

www.techpowerup.com/reviews/MSI/RX_580_Mech_2/31.html

as an R9 290 was a missive die compared to other cards then and had 64 ROPs...512bit bus...

now if the RX 590 had 64 ROPS instead of the RX 480 & RX 580's 32 rops and also.... doubled the...

rx 580's Unified Shaders from 2304 shader units, to 4608 Shader units and on texture units from, 144 TMU's to 288+ TMUs on a RX 590/or/590X to..something like.... 2-4 x this 288 TMUs or higher!

so RX 590X Should be...on the Render config....

Render Config

590 - 590X*

-

Shading Units

4608 - 6912*

TMUs

288 - 432*

ROPs

64 - 96*

Compute Units

72 - 108*

(* would indicate an AMD RADEON RX 590X Model if ever existed....)

as my spec list the Rx 590X (Resembling the R9 290X several years ago)

R9 290X ( 512 Bit Ram Bus Width)

RX 590 ( 384 Bit Ram Bus Width)

RX 590X (512 Bit Ram Bus Width)

Rx 590X if wit specs i stated @ 12nm , it would be a decent card , maybe even a monster if done right!!!

The improvement was dismal and they were louder than the 290Xs were.

I felt ripped-off.

Fury X

64 ROPS ( was rumored to be and should have been 128 ROPS,, basically 2x R9 290 Dies AS one Large monolithic die! they never listen to me @amd

4096 Shaders (Should have been 5120, as it would as i stated above as 2 290 Dies as one...)

256 TMU's (was decent but needed more Should have been @ minimum 320 TMUs (as 2x 290 dies as two above...or....(384 to 512)

4096 Bit Ram Interface (needed More than 4GB Vram)

R9 290 (NON X MODEL)

64 ROPS Provided exactly the same Pixel Fillrates as a Fury X @ same 1050MHZ CORE CLOCK/

2560 Shaders

160 TMU's

512 Bit Ram Interface

as this the fury x only had higher Texture fill rates which WAS NOT ENOUGH to be a high end card as i was portrayed it to be :( so yeah i went to GTX 1080 after that..