Raevenlord

News Editor

- Joined

- Aug 12, 2016

- Messages

- 3,755 (1.18/day)

- Location

- Portugal

| System Name | The Ryzening |

|---|---|

| Processor | AMD Ryzen 9 5900X |

| Motherboard | MSI X570 MAG TOMAHAWK |

| Cooling | Lian Li Galahad 360mm AIO |

| Memory | 32 GB G.Skill Trident Z F4-3733 (4x 8 GB) |

| Video Card(s) | Gigabyte RTX 3070 Ti |

| Storage | Boot: Transcend MTE220S 2TB, Kintson A2000 1TB, Seagate Firewolf Pro 14 TB |

| Display(s) | Acer Nitro VG270UP (1440p 144 Hz IPS) |

| Case | Lian Li O11DX Dynamic White |

| Audio Device(s) | iFi Audio Zen DAC |

| Power Supply | Seasonic Focus+ 750 W |

| Mouse | Cooler Master Masterkeys Lite L |

| Keyboard | Cooler Master Masterkeys Lite L |

| Software | Windows 10 x64 |

In an interesting report that would give some credence to reports of AMD's take on the HEDT market, it would seem that some Ryzen chips with 12 Cores and 24 Threads are making the rounds. Having an entire platform built for a single processor would have always been ludicrous; now, AMD seems to be readying a true competitor to Intel's X99 and its supposed successor, X299 (though AMD does have an advantage in naming, if its upcoming X399 platform really does ship with that naming scheme.)

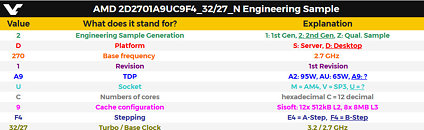

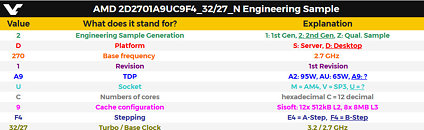

The CPU itself is an engineering sample, coded 2D2701A9UC9F4_32/27_N. Videocardz did a pretty god job on explaining what the nomenclature means, but for now, we do know this sample seems to be running at 2.7 GHz Base, and 3.2 GHz Boost clocks (not too shabby for a 12-core part, but a little on the anemic side when compared to previous reports on a 16-Core chip from AMD that would run at 3.1 GHz Base and 3.6 GHz Boost clocks.) What seems strange is the program's report on the available cache. 8x 8 MB is more than double what we would be expecting, considering that these 12-core parts probably make use of a die with 3 CCX's with 4x cores each, which feature 8 MB per CCX. So, 3 CCX's = 3x 8 MB, not 8x 8 MB, but this can probably be attributed to a software bug, considering the engineering-sample status of the chip.

View at TechPowerUp Main Site

The CPU itself is an engineering sample, coded 2D2701A9UC9F4_32/27_N. Videocardz did a pretty god job on explaining what the nomenclature means, but for now, we do know this sample seems to be running at 2.7 GHz Base, and 3.2 GHz Boost clocks (not too shabby for a 12-core part, but a little on the anemic side when compared to previous reports on a 16-Core chip from AMD that would run at 3.1 GHz Base and 3.6 GHz Boost clocks.) What seems strange is the program's report on the available cache. 8x 8 MB is more than double what we would be expecting, considering that these 12-core parts probably make use of a die with 3 CCX's with 4x cores each, which feature 8 MB per CCX. So, 3 CCX's = 3x 8 MB, not 8x 8 MB, but this can probably be attributed to a software bug, considering the engineering-sample status of the chip.

View at TechPowerUp Main Site