Steam Deck Gets the new OS 3.4.6 Update

Valve has rolled out the new Steam Deck 3.4.6 OS stable update, which brings the Mesa 23.1 graphics driver update, adds support for Vulkan ray tracing and fixes some previous issues. The latest update was anxiously awaited by those playing either Forza Horizon 5, Resident Evil 4, or the new Wo Long: Fallen Dynasty game.

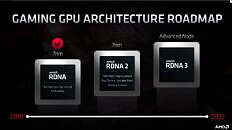

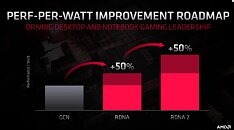

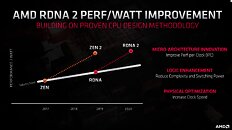

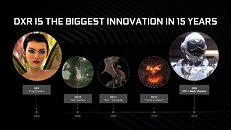

According to the release notes, the new update fixes a significant focus issue with Forza Horizon 5. The aforementioned Mesa 23.1 graphics driver update is bringing both functional as well as performance fixes. Mesa 23.1 fixes graphical corruption issues in both Wo Long: Fallen Dynasty and Resident Evil 4. The new driver also adds Vulkan ray tracing to the DOOM Eternal game. Unfortunately, there is no word on DXR ray tracing support, although the RDNA 2 GPU certainly has hardware support for it.

According to the release notes, the new update fixes a significant focus issue with Forza Horizon 5. The aforementioned Mesa 23.1 graphics driver update is bringing both functional as well as performance fixes. Mesa 23.1 fixes graphical corruption issues in both Wo Long: Fallen Dynasty and Resident Evil 4. The new driver also adds Vulkan ray tracing to the DOOM Eternal game. Unfortunately, there is no word on DXR ray tracing support, although the RDNA 2 GPU certainly has hardware support for it.