Jul 20th, 2025 11:03 CDT

change timezone

Latest GPU Drivers

New Forum Posts

- What would you buy? (94)

- Windows 11 General Discussion (6157)

- Are UPS lithium LiFePO4 batteries finally as cheap as lead-acid? (43)

- Have you got pie today? (16799)

- What is the latest game you finished or 100% (62)

- What are you playing? (23978)

- No offense, here are some things that bother me about your understanding of fans. (176)

- HELP! Monitor won't turn on after installing new RAM! Now it won't turn on even with the old RAM in place... (14)

- 3DMARK "LEGENDARY" (334)

- The Filthy, Rotten, Nasty, Helpdesk-Nightmare picture clubhouse (2738)

Popular Reviews

- Razer Blade 16 (2025) Review - Thin, Light, Punchy, and Efficient

- Thermal Grizzly WireView Pro Review

- Pulsar X2 Crazylight Review

- AVerMedia Live Gamer Ultra S (GC553Pro) Review

- MSI GeForce RTX 5060 Gaming OC Review

- SilverStone SETA H2 Review

- Upcoming Hardware Launches 2025 (Updated May 2025)

- Sapphire Radeon RX 9060 XT Pulse OC 16 GB Review - An Excellent Choice

- AMD Ryzen 7 9800X3D Review - The Best Gaming Processor

- NVIDIA GeForce RTX 5050 8 GB Review

TPU on YouTube

Controversial News Posts

- Some Intel Nova Lake CPUs Rumored to Challenge AMD's 3D V-Cache in Desktop Gaming (140)

- AMD Radeon RX 9070 XT Gains 9% Performance at 1440p with Latest Driver, Beats RTX 5070 Ti (131)

- NVIDIA Launches GeForce RTX 5050 for Desktops and Laptops, Starts at $249 (127)

- NVIDIA GeForce RTX 5080 SUPER Could Feature 24 GB Memory, Increased Power Limits (115)

- NVIDIA DLSS Transformer Cuts VRAM Usage by 20% (99)

- AMD Sampling Next-Gen Ryzen Desktop "Medusa Ridge," Sees Incremental IPC Upgrade, New cIOD (97)

- NVIDIA Becomes First Company Ever to Hit $4 Trillion Market-Cap (94)

- Windows 12 Delayed as Microsoft Prepares Windows 11 25H2 Update (92)

Friday, February 12th 2016

AMD "Zen" Processors to Feature SMT, Support up to 8 DDR4 Memory Channels

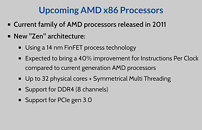

CERN engineer Liviu Valsan, in a recent presentation on datacenter hardware trends, presented a curious looking slide that highlights some of the key features of AMD's upcoming "Zen" CPU architecture. We know from a recent story that the architecture is scalable up to 32 cores per socket, and that AMD is building these chips on the 14 nanometer FinFET process.

Among the other key features detailed on the slide are symmetric multi-threading (SMT). Implemented for over a decade by Intel as HyperThreading Technology, SMT exposes a physical core as two logical CPUs to the software, letting it make better use of the hardware resources. Another feature is talk of up to eight DDR4 memory channels. This could mean that AMD is readying a product to compete with the Xeon E7 series. Lastly, the slide mentions that "Zen" could bring about IPC improvements that are 40 percent higher than the current architecture.

Source:

HotHardware

Among the other key features detailed on the slide are symmetric multi-threading (SMT). Implemented for over a decade by Intel as HyperThreading Technology, SMT exposes a physical core as two logical CPUs to the software, letting it make better use of the hardware resources. Another feature is talk of up to eight DDR4 memory channels. This could mean that AMD is readying a product to compete with the Xeon E7 series. Lastly, the slide mentions that "Zen" could bring about IPC improvements that are 40 percent higher than the current architecture.

Jul 20th, 2025 11:03 CDT

change timezone

Latest GPU Drivers

New Forum Posts

- What would you buy? (94)

- Windows 11 General Discussion (6157)

- Are UPS lithium LiFePO4 batteries finally as cheap as lead-acid? (43)

- Have you got pie today? (16799)

- What is the latest game you finished or 100% (62)

- What are you playing? (23978)

- No offense, here are some things that bother me about your understanding of fans. (176)

- HELP! Monitor won't turn on after installing new RAM! Now it won't turn on even with the old RAM in place... (14)

- 3DMARK "LEGENDARY" (334)

- The Filthy, Rotten, Nasty, Helpdesk-Nightmare picture clubhouse (2738)

Popular Reviews

- Razer Blade 16 (2025) Review - Thin, Light, Punchy, and Efficient

- Thermal Grizzly WireView Pro Review

- Pulsar X2 Crazylight Review

- AVerMedia Live Gamer Ultra S (GC553Pro) Review

- MSI GeForce RTX 5060 Gaming OC Review

- SilverStone SETA H2 Review

- Upcoming Hardware Launches 2025 (Updated May 2025)

- Sapphire Radeon RX 9060 XT Pulse OC 16 GB Review - An Excellent Choice

- AMD Ryzen 7 9800X3D Review - The Best Gaming Processor

- NVIDIA GeForce RTX 5050 8 GB Review

TPU on YouTube

Controversial News Posts

- Some Intel Nova Lake CPUs Rumored to Challenge AMD's 3D V-Cache in Desktop Gaming (140)

- AMD Radeon RX 9070 XT Gains 9% Performance at 1440p with Latest Driver, Beats RTX 5070 Ti (131)

- NVIDIA Launches GeForce RTX 5050 for Desktops and Laptops, Starts at $249 (127)

- NVIDIA GeForce RTX 5080 SUPER Could Feature 24 GB Memory, Increased Power Limits (115)

- NVIDIA DLSS Transformer Cuts VRAM Usage by 20% (99)

- AMD Sampling Next-Gen Ryzen Desktop "Medusa Ridge," Sees Incremental IPC Upgrade, New cIOD (97)

- NVIDIA Becomes First Company Ever to Hit $4 Trillion Market-Cap (94)

- Windows 12 Delayed as Microsoft Prepares Windows 11 25H2 Update (92)

130 Comments on AMD "Zen" Processors to Feature SMT, Support up to 8 DDR4 Memory Channels

The fact of the matter is that the companies AMD competes with have a bigger budget and more resources at their disposal. This means that most of the time they will have the upper hand in price, performance, marketing, business deals, partnerships, etc.

This does not mean that AMD can't have their niche and survive if not flourish in their segments. It's just that they have been in the negative for so long, at this point they really are struggling to survive. If Jim Keller has done a miracle on the design of his ZEN, it will be a success on that front. If it fails, it will be 100% AMDs fault. Once the engine gets revved up to start getting these chips out on shelves, they need to do some HEAVY marketing to appeal to the masses otherwise I'm afraid it won't look good on that balance sheet of theirs.

If you want to be really specific in performance the and dual gpu card from 2 generations ago is still the fastest single "card" solution. Of you look past 1080P the fury series beats everything but the titan and does beat the titan in some games. Again it's exactly what I already posted.

And again if you would look just a little closer into dx12. You will notice somethings.

I am no fanboy I have run both sets of cards and buy based off value/feature set. Last set of cards was three water cooled gtx470's. Those are to this day my favorite cards followed by my original ti4200, which currently holds some of the highest clock speed records ever recorded.

Whatever. Like I said, it's not my point, and I'm absolutely tired of fanboyisms. Don't expect me to reply to any more of them. The same I say to anyone else.

DX12 right now doesn't matter, and probably isn't going to matter for this generation of cards. Tomb Raider is the first AAA game we are likely to see utilize it, maybe, and it is likely the nVidia GPUs will support every feature necessary.

HBM shouldn't be a marketing bullet when HBM cards are still losing in performance to GDDR5 cards. So does it really make a difference? Are people with the Fury cards going "Hey look, my card is so much better because it has HBM...even though it still performs worse...but it is so much better because HBM!"? And what happens when the next generation of nVidia cards come out with HBM2 and AMD is still using HBM1? Are you going to say the nVidia card is now better because it is using the better form of HBM, the next generation of HBM? Somehow I doubt it. HBM isn't a value added feature.

The Nano doesn't offer better performance per watt. It is worse than the 980 and ties the 980Ti at 4K, it is worse than the 980Ti and 980 at 1440p, and is worse than the 970, 980, and 980Ti at 1080p.

GCN only manages to close the gap at higher resolutions, it doesn't offer better performance. The Fury X is the best GCN card available, and it merely ties with the 980Ti at 4K, and the pre-overclocked 980Ti's are actually crushing the Fury X with 15%+ better performance at 4k. And since the Fury X's overclocking is lackluster, to say the least, there is no making that difference up by overclocking the Fury X.

And when you look at the Steam survey, you've to almost 60% of the users running Windows 7 or 8 and only 35% running Windows 10. So is DX12 going to be a game changer this year?(pun not intended) No, I don't think so.

AMD has dug such a deep hole in the last 10 years, it would take a miracle for them to crawl out of it. Even if they manage to put out something that *beats* Intel and Nvidia on price/performance, Intel and Nvidia can just drop the price on competing products to retain market share, and keep AMD where they are. That would be nice for consumers while it lasts, but it probably isn't going to make AMD profitable. They'd need to keep that going for years.

The only way out that I see, is AMD hitting homeruns for the next few years to demonstrate that the company has potential, and then they merge with a company that has cash to invest. Or is that even possible with the licenses?

Anyway, I never denied that there's no market for them.

I'm sorry if you're disappointed that yours and my desires don't count for anything with either AMD or Intel.

I also haven't really seen any benchmark showing gddr5 beating hbm?

Just look at the latest 980Ti Matrix benchmark here on TPU. It beats the Fury X by 17% at 4K. Sure, HBM provides more memory bandwidth than GDDR5, but when the overall card is still slower what's the point?

about the article...

it looks like some serious architecture backing up those shiny new 14nm cores. not just low latency but high bandwidth.

along with dx12 its like turning a 4 lane highway into a 20 lane and making the speed limit 100% faster. the async highway

What I think we will see on the desktop market is:

Up-To 8 Zen Cores with SMT for 16 threads

Up-To 4 DDR4 memory channels

But I don't think they are going to break the market up into the mainstream and HEDT like Intel does. Instead I think they will go with some kind of middle ground. So we'll likely see:

2-Core w/ SMT

4-Core w/out SMT

4-Core w/ SMT

8-Core w/out SMT

8-Core w/ SMT

I also think we'll see the motherboards that look more like the standard ATX boards we are used to with 115X, with only 4 RAM slots. Even if the boards support 4-Channel DDR4. You just have to populate all 4 slots if you want 4-Channel, if you only populate 2 slots, you get dual-channel(with a not so big performance hit, I'm guessing). Of course I'm sure we'll see the big players release HEDT motherboards with 8 RAM slots too, like the HEDT 2011, the difference will be they will still be using the same socket.

And I think that is the key for AMD, no matter what, they have to keep their desktop market all on the same socket. They can't try to break it up like Intel and AMD have been doing in the past. They tried to break it up with bulldozer, and have the HEDT market on AM3+ and the APU/Mainstream desktop market on FM2/+, and it didn't work. AMD has marketed on upgradability in the past. That is part of what made them a good choice. You would buy an AM2+ or even AM2 motherboard, and when AM3 processor came out you didn't have to replace your entire motherboard. When AM3+ came out, you could replace your motherboard and keep your AM3 processor. This allowed people to upgrade in steps instead of needing to replace the motherboard and processor all at once. You can buy a low end Zen computer, and stick one of the cheap processors in it to start, then when you save up a little more funds, you upgrade to the 8-Core monster.