Tuesday, October 20th 2020

AMD Radeon RX 6000 Series "Big Navi" GPU Features 320 W TGP, 16 Gbps GDDR6 Memory

AMD is preparing to launch its Radeon RX 6000 series of graphics cards codenamed "Big Navi", and it seems like we are getting more and more leaks about the upcoming cards. Set for October 28th launch, the Big Navi GPU is based on Navi 21 revision, which comes in two variants. Thanks to the sources over at Igor's Lab, Igor Wallossek has published a handful of information regarding the upcoming graphics cards release. More specifically, there are more details about the Total Graphics Power (TGP) of the cards and how it is used across the board (pun intended). To clarify, TDP (Thermal Design Power) is a measurement only used to the chip, or die of the GPU and how much thermal headroom it has, it doesn't measure the whole GPU power as there are more heat-producing components.

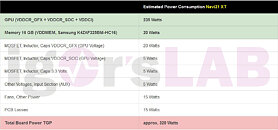

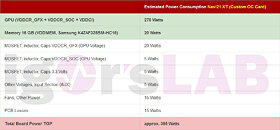

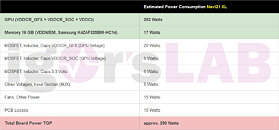

So the break down of the Navi 21 XT graphics card goes as follows: 235 Watts for the GPU alone, 20 Watts for Samsung's 16 Gbps GDDR6 memory, 35 Watts for voltage regulation (MOSFETs, Inductors, Caps), 15 Watts for Fans and other stuff, and 15 Watts that are used up by PCB and the losses found there. This puts the combined TGP to 320 Watts, showing just how much power is used by the non-GPU element. For custom OC AIB cards, the TGP is boosted to 355 Watts, as the GPU alone is using 270 Watts. When it comes to the Navi 21 XL GPU variant, the cards based on it are using 290 Watts of TGP, as the GPU sees a reduction to 203 Watts, and GDDR6 memory uses 17 Watts. The non-GPU components found on the board use the same amount of power.When it comes to the selection of memory, AMD uses Samsung's 16 Gbps GDDR6 modules (K4ZAF325BM-HC16). The bundle AMD ships to its AIBs contains 16 GB of this memory paired with GPU core, however, AIBs are free to put different memory if they want to, as long as it is a 16 Gbps module. You can see the tables below and see the breakdown of the TGP of each card for yourself.

Sources:

Igor's Lab, via VideoCardz

So the break down of the Navi 21 XT graphics card goes as follows: 235 Watts for the GPU alone, 20 Watts for Samsung's 16 Gbps GDDR6 memory, 35 Watts for voltage regulation (MOSFETs, Inductors, Caps), 15 Watts for Fans and other stuff, and 15 Watts that are used up by PCB and the losses found there. This puts the combined TGP to 320 Watts, showing just how much power is used by the non-GPU element. For custom OC AIB cards, the TGP is boosted to 355 Watts, as the GPU alone is using 270 Watts. When it comes to the Navi 21 XL GPU variant, the cards based on it are using 290 Watts of TGP, as the GPU sees a reduction to 203 Watts, and GDDR6 memory uses 17 Watts. The non-GPU components found on the board use the same amount of power.When it comes to the selection of memory, AMD uses Samsung's 16 Gbps GDDR6 modules (K4ZAF325BM-HC16). The bundle AMD ships to its AIBs contains 16 GB of this memory paired with GPU core, however, AIBs are free to put different memory if they want to, as long as it is a 16 Gbps module. You can see the tables below and see the breakdown of the TGP of each card for yourself.

153 Comments on AMD Radeon RX 6000 Series "Big Navi" GPU Features 320 W TGP, 16 Gbps GDDR6 Memory

And of course some also want their cards to be quieter, which is nice.

If you're running on a tight budget then you buy a lower-tier card and overclock the snot out of it. AMD's cards have always typically been very close to their overclock limits at factory stocks, much like Nvidia's 3000-series are now.

I chose to run my 5700XT at 1750MHz and 120W (TGP probably about 150W board power) and I could afford to leave the fans on minimum speed for silent 4K gaming. At default clocks it would initially boost to about 1950MHz, get hot and then stabilise at about an 1850 game clock over a longer period. 1750MHz instead of 1850MHz is a small performance drop, basically negligible - but it was the difference between loud and silent - expecially important for an HTPC in a quiet living room using an SFF case with relatively low airflow.

Look, people didn't question when Nvidia started gpuboost, or AMD started ulps, but today nobody knows what the hell these cards are doing. Buildzoid undervolted Pascal to 0.6v, it still kept going. I'm attributing it to voltage pumps - the gpu is buffering power to counteract vdroops.

The end result is XXX power and people are reducing voltage and at times clocks and performance to do it. I don't get it (the losing performance part), especially if it's several percent/card tier.

I even see it on my Pascal 1080. 100% power target gets me just as far as 110%, but it still runs cooler, quieter and maintains a stable boost clock better. At the same time, the FPS gain isn't very linear with the clockspeed gain especially if that clock fluctuates all the time. Boost is great for the extra few hundred Mhz it gives, and you keep letting it do that, but the minor gains above that come at a high noise/power cost. This is technically not an undervolt of course, but it illustrates the point. The bar has been pushed further with the generations past Pascal, closer to the edge of what GPUs can do - note the 3080 2Ghz clock issue, and the general power draw increase across the board. Turing was also more hungry already while boosting a bit lower.

I'm sure the next big thing they will try is full on shader-pipeline interlocking. Look at it this way: they were just decoding serially, then they introduced per-lane intrinsics and lane-switching, now it will instantiate when and where to power up and down.

Yes, I think it will come to that. Gpus are getting fully customizable and there is zero benefit to leaving it to the customer. I mean the workloads are the same, the pipeline is the same, they have to do something... what better way than to split the instruction pipeline from the shader pipelines and just power them when it covers their cost to run. Every watt saved from static losses is one more available to faster switching.

The vrms don't even care how much you pull, they just work until temperature kills them.

That would probably be cheap to produce yet powerful solution. It would be a 3070 killer if equipped with 10/12 GB of VRAM and priced at 350/400 bucks (like 5700 series).

Is there any possibility of that happening?

The entire "RGBLED" industry is a waste of cash. It doesn't add any performance at all but it costs quite a bit more whilst adding additional software bloat and cable spaghetti.

As you can tell from the current retail market - both of those segments are so successful that they utterly dominate the market and leave almost nothing else available.

Underclocking and undervolting a graphics card is exactly what every laptop manufacturer has ever done. Nvidia went one step further with their Max-Q models and gave people the option to buy far more expensive GPUs than their laptop cooling is capable of, but dialled back to heavily-reduced clocks and TDPs. They sold in their millions, Max-Q was a huge success in the laptop world, despite the high cost.

I think we can agree to disagree because having options on the market is good and more consumer choice is always better than less. At least AMD's graphics driver is an excellent tuning tool for undervolting and underclocking.