Tuesday, October 20th 2020

AMD Radeon RX 6000 Series "Big Navi" GPU Features 320 W TGP, 16 Gbps GDDR6 Memory

AMD is preparing to launch its Radeon RX 6000 series of graphics cards codenamed "Big Navi", and it seems like we are getting more and more leaks about the upcoming cards. Set for October 28th launch, the Big Navi GPU is based on Navi 21 revision, which comes in two variants. Thanks to the sources over at Igor's Lab, Igor Wallossek has published a handful of information regarding the upcoming graphics cards release. More specifically, there are more details about the Total Graphics Power (TGP) of the cards and how it is used across the board (pun intended). To clarify, TDP (Thermal Design Power) is a measurement only used to the chip, or die of the GPU and how much thermal headroom it has, it doesn't measure the whole GPU power as there are more heat-producing components.

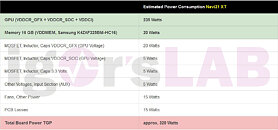

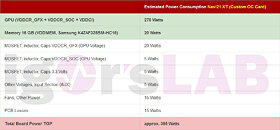

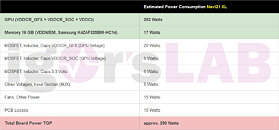

So the break down of the Navi 21 XT graphics card goes as follows: 235 Watts for the GPU alone, 20 Watts for Samsung's 16 Gbps GDDR6 memory, 35 Watts for voltage regulation (MOSFETs, Inductors, Caps), 15 Watts for Fans and other stuff, and 15 Watts that are used up by PCB and the losses found there. This puts the combined TGP to 320 Watts, showing just how much power is used by the non-GPU element. For custom OC AIB cards, the TGP is boosted to 355 Watts, as the GPU alone is using 270 Watts. When it comes to the Navi 21 XL GPU variant, the cards based on it are using 290 Watts of TGP, as the GPU sees a reduction to 203 Watts, and GDDR6 memory uses 17 Watts. The non-GPU components found on the board use the same amount of power.When it comes to the selection of memory, AMD uses Samsung's 16 Gbps GDDR6 modules (K4ZAF325BM-HC16). The bundle AMD ships to its AIBs contains 16 GB of this memory paired with GPU core, however, AIBs are free to put different memory if they want to, as long as it is a 16 Gbps module. You can see the tables below and see the breakdown of the TGP of each card for yourself.

Sources:

Igor's Lab, via VideoCardz

So the break down of the Navi 21 XT graphics card goes as follows: 235 Watts for the GPU alone, 20 Watts for Samsung's 16 Gbps GDDR6 memory, 35 Watts for voltage regulation (MOSFETs, Inductors, Caps), 15 Watts for Fans and other stuff, and 15 Watts that are used up by PCB and the losses found there. This puts the combined TGP to 320 Watts, showing just how much power is used by the non-GPU element. For custom OC AIB cards, the TGP is boosted to 355 Watts, as the GPU alone is using 270 Watts. When it comes to the Navi 21 XL GPU variant, the cards based on it are using 290 Watts of TGP, as the GPU sees a reduction to 203 Watts, and GDDR6 memory uses 17 Watts. The non-GPU components found on the board use the same amount of power.When it comes to the selection of memory, AMD uses Samsung's 16 Gbps GDDR6 modules (K4ZAF325BM-HC16). The bundle AMD ships to its AIBs contains 16 GB of this memory paired with GPU core, however, AIBs are free to put different memory if they want to, as long as it is a 16 Gbps module. You can see the tables below and see the breakdown of the TGP of each card for yourself.

153 Comments on AMD Radeon RX 6000 Series "Big Navi" GPU Features 320 W TGP, 16 Gbps GDDR6 Memory

Power does result in more heat, and more heat is always an issue, inside any case.

Worth considering is that CPU TDPs have been all over the place as well. The net result is you'll be taking a lot more measures than before just to keep a nice temp equilibrium. More fans, higher fan speeds, higher airflow requirements. Current day case design is of no real help either, in that sense. In that way, power increases directly translate to increased purchase price of the complete setup. And that is on top of the mild increase to a monthly (!) energy bill. 3,5 pounds per month... is another 42 pounds per year. Three years of high power GPU versus the same tier of past gen... +126 pounds sterling. 700 just became 826. Its not nothing. Its a structural increase of TCO. And not even considering the power/money used for that first 250W we always did.

Also worth considering is the fact that people desire smaller cases. ITX builds are gaining in popularity. Laptops are a growth market and a larger one than consumer desktops.

So... is power truly a non issue... not entirely then?You can rest assured a common use case for GPU is to run it at a100% utilization. Even if that doesn't always translate to 100% of power budget... its still going to be close.

I'll have to give this a try at the weekend. The 5700XT is faster AND significantly quieter than the 2060S.

Or rather, I should say that the 5700XT I have is quieter than the 2060S I have. I shouldn't make sweeping generalisations since both cards have a wide variety of performance and acoustics depending on which exact model. Still, though, the 5700XT undervolts more gracefully than the 2060S, I guess that's 7nm vs 12nm for you....

But for gamers you are rarely sitting at 100% utilization.

Different games will be mostly at high utilization (because the command queues have something in them constantly), but if you watch the power-usage, it will vary.

To put it another way, i dont want a 400W screamer to run CSGO - i'd rather it become a 200W card in that situation.

Edit: with my 1080, its almost always 100% load, except for the instances i'm CPU limited. Even if its not an issue NOW, it WILL be as the cards age.

Much like CPU's, TDP's will start becoming irrelevant, and I believe this is the first move as such, with boosting becoming much more deterministic and transitory.

I don't wanna fight, since I haven't sorted which type of internet Overlord you are, but it is wildly apparent that open-bench type cases do not constitute the bulk of pc users. I agree it doesn't matter in some cases, but they aren't in the majority of cases.

Here's a 5700XT of mine, graphed for various things, but at the lowest stable OCCT voltages for each clock:

You buy the product and can run it at any clock and power level you choose as long as it's stable. You can see that in an ideal world, best performance/Watt for this card was ~1375MHz.

AMD sold it at 1850MHz and had a much higher TDP and subsequent heat/noise levels than the 12nm TU106 it competed against. That's taking the efficiency advantage of TSMC's 7nm node and throwing it away, and then throwing away even more just to get fractionally higher benchmark scores.

You literally get a slider in the driver where you can undo this dumb decision. What you do with that slider is entirely up to you, it's not going to change how much you paid for the card, only how much you want to trade peace and quiet for performance. Clearly noise is a big problem because quiet GPUs are a big selling point for all AIB vendors, all trying to compete with larger fans at lower RPMs, features like idle fan stop etc. If you have a huge case with tons of low-noise airflow you can afford to buy a gargantuan graphics card that'll dissipate 300W quietly and let the case deal with that 300W problem seperately.

If you don't have a high airflow case, or loads of room, such cards may not even physically fit and the card's own fan noise is irrelevant because it'll dump so much heat into a smaller case that all the other fans in the case ramp up in their attempt to compensate with the additional 300W burden of the graphics card.

I haven't even mentioned electricity cost or the unwanted effect of heating up the room. Those are also valid arguments but not necessary as the noise level created by higher power consumption is enough for me to justify undervolting (and minor underclocking) all by itself.