Tuesday, October 20th 2020

AMD Radeon RX 6000 Series "Big Navi" GPU Features 320 W TGP, 16 Gbps GDDR6 Memory

AMD is preparing to launch its Radeon RX 6000 series of graphics cards codenamed "Big Navi", and it seems like we are getting more and more leaks about the upcoming cards. Set for October 28th launch, the Big Navi GPU is based on Navi 21 revision, which comes in two variants. Thanks to the sources over at Igor's Lab, Igor Wallossek has published a handful of information regarding the upcoming graphics cards release. More specifically, there are more details about the Total Graphics Power (TGP) of the cards and how it is used across the board (pun intended). To clarify, TDP (Thermal Design Power) is a measurement only used to the chip, or die of the GPU and how much thermal headroom it has, it doesn't measure the whole GPU power as there are more heat-producing components.

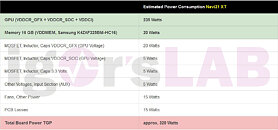

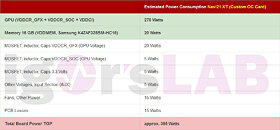

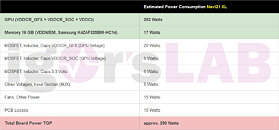

So the break down of the Navi 21 XT graphics card goes as follows: 235 Watts for the GPU alone, 20 Watts for Samsung's 16 Gbps GDDR6 memory, 35 Watts for voltage regulation (MOSFETs, Inductors, Caps), 15 Watts for Fans and other stuff, and 15 Watts that are used up by PCB and the losses found there. This puts the combined TGP to 320 Watts, showing just how much power is used by the non-GPU element. For custom OC AIB cards, the TGP is boosted to 355 Watts, as the GPU alone is using 270 Watts. When it comes to the Navi 21 XL GPU variant, the cards based on it are using 290 Watts of TGP, as the GPU sees a reduction to 203 Watts, and GDDR6 memory uses 17 Watts. The non-GPU components found on the board use the same amount of power.When it comes to the selection of memory, AMD uses Samsung's 16 Gbps GDDR6 modules (K4ZAF325BM-HC16). The bundle AMD ships to its AIBs contains 16 GB of this memory paired with GPU core, however, AIBs are free to put different memory if they want to, as long as it is a 16 Gbps module. You can see the tables below and see the breakdown of the TGP of each card for yourself.

Sources:

Igor's Lab, via VideoCardz

So the break down of the Navi 21 XT graphics card goes as follows: 235 Watts for the GPU alone, 20 Watts for Samsung's 16 Gbps GDDR6 memory, 35 Watts for voltage regulation (MOSFETs, Inductors, Caps), 15 Watts for Fans and other stuff, and 15 Watts that are used up by PCB and the losses found there. This puts the combined TGP to 320 Watts, showing just how much power is used by the non-GPU element. For custom OC AIB cards, the TGP is boosted to 355 Watts, as the GPU alone is using 270 Watts. When it comes to the Navi 21 XL GPU variant, the cards based on it are using 290 Watts of TGP, as the GPU sees a reduction to 203 Watts, and GDDR6 memory uses 17 Watts. The non-GPU components found on the board use the same amount of power.When it comes to the selection of memory, AMD uses Samsung's 16 Gbps GDDR6 modules (K4ZAF325BM-HC16). The bundle AMD ships to its AIBs contains 16 GB of this memory paired with GPU core, however, AIBs are free to put different memory if they want to, as long as it is a 16 Gbps module. You can see the tables below and see the breakdown of the TGP of each card for yourself.

153 Comments on AMD Radeon RX 6000 Series "Big Navi" GPU Features 320 W TGP, 16 Gbps GDDR6 Memory

But, I agree with you... I wish they could have made something that less hungrier. Imagine how amazing it would be if we could get <200W card that can beat 2080 Ti.

NVIDIA's RTX 3080 is a much more power-efficient design than anything that came before (at 1440p and 4K), as our review clearly demonstrates.

One other metric for discussion, however, is power envelope. So, for anyone who has concerns regarding energy consumption, and wants to reduce overall power consumption for environmental or other concerns, one can always just drop a few rungs in the product stack for an RTX 3070, or the (virtual) RTX 3060 or AMD equivalents, which will certainly deliver even higher power efficiency, within a smaller envelope.

We'll have to wait for reviews on other cards in NVIDIA's product stack (not to mention all of AMD's offerings, such as this one leaked card), but it seems clear that this generation will deliver higher performance at the same power level as older generations. You may have to drop in the product stack, yes - but if performance is higher on the same envelope, you will have a better-performing RTX 2080 in a 3070 at the same power envelope, or a better performing RTX 2070 in a RTX 3060 at the same power envelope, and so on.

These are two different concepts, and I can't agree with anyone talking about inefficiency. The numbers, in a frame/watt basis, don't lie.

For me it doesn't matter as electricity is quite cheap. 1 kWh (so 4x 250W per hour) is equivalent to one or two cents over in the States, so 70W? We're talking maybe one or two bucks more over the course of a year...

Really tiring to see all the posts complaining about Ampere high power consumption.

High power consumption is so easy to fix, just drag the Power Limit slider down to where you want it to be (no undervolt/overclock). From TPU numbers, one can expect the 3080 to be 20-25% faster than 2080 Ti when limited to 260W TGP.

It seems like AMD is also clocking the shit outta Big Navi to catch up with Nvidia :roll: , people can just forget about RDNA2 achieve higher efficiency than Ampere.

That means with a little undervolting I can get similar experience to custom watercooling regarding thermal/noise.

Well if only there are any 3080/3090 available :roll: .