Wednesday, July 4th 2018

AMD Beats NVIDIA's Performance in the Battlefield V Closed Alpha

A report via PCGamesN points to some... interesting performance positioning when it comes to NVIDIA and AMD offerings. Battlefield V is being developed by DICE in collaboration with NVIDIA, but it seems there's some sand in the gears of performance improvements as of now. I say this because according to the report, AMD's RX 580 8 GB graphics card (the only red GPU to be tested) bests NVIDIA's GTX 1060 6GB... by quite a considerable margin at that.

The performance difference across both 1080p and 1440p scenarios (with Ultra settings) ranges in the 30% mark, and as has been usually the case, AMD's offerings are bettering NVIDIA's when a change of render - to DX12 - is made - AMD's cards teeter between consistency or worsening performance under DX 12, but NVIDIA's GTX 1060 consistently delivers worse performance levels. Perhaps we're witnessing some bits of AMD's old collaboration efforts with DICE? Still, It's too early to cry wolf right now - performance will only likely improve between now and the October 19th release date.

Source:

PCGamesN

The performance difference across both 1080p and 1440p scenarios (with Ultra settings) ranges in the 30% mark, and as has been usually the case, AMD's offerings are bettering NVIDIA's when a change of render - to DX12 - is made - AMD's cards teeter between consistency or worsening performance under DX 12, but NVIDIA's GTX 1060 consistently delivers worse performance levels. Perhaps we're witnessing some bits of AMD's old collaboration efforts with DICE? Still, It's too early to cry wolf right now - performance will only likely improve between now and the October 19th release date.

219 Comments on AMD Beats NVIDIA's Performance in the Battlefield V Closed Alpha

Bare in mind that even though consoles tend to sell more copies of a game, publishers make more money per sale on PC because profit margins are much better (no qualifying, distributors take a smaller cut, don't need to produce and ship physical media to stores, patches are free to push, etc.).Unreal Engine 4 nor Unity officially support D3D12 nor Vulkan yet. The majority of PC games are built on those engines. The reason why Vulkan/D3D12 support is sparse is because they are a huge paradigm shift from OpenGL/D3D11: the entire renderer has to be rewritten. I'd argue most games out today that support Vulkan/D3D12 are half-assed implementations of it. It'll be years yet before we see games that fully exploit the technology.

Unity will probably never benefit properly from Direct3D 12 or Vulkan, since the rendering pipeline has to be tailored to the game to fully utilize the potential in the new APIs. Support will probably arrive, but it will suck as much as before.Yes, we are always promised that AMD hardware are "better", you just don't see it yet. Well, most people waiting for their 2xx/3xx series to unveil their benefits have already moved on or will be when the next Nvidia cards arrive shortly.:rolleyes:

This is also the problem with purely synthetic benchmarks, even more so, benchmarks with only measures one tiny aspect of rendering. And these benchmarks are just misleading to buyers; what does the average buyer know about "draw calls"? And displaying and edge case is just ridiculous, especially since dummy draw calls have little to do with what the hardware can do in an actual scene.

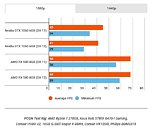

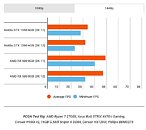

See, PCGamesN and Hardwareluxx were both using processors less powerful than the one used by Sweclockers. PCGamesN was using a Ryzen 2700X while Hardwareluxx used an AMD Threadripper 1950X processor – which is decidedly not clocked for gaming purposes. Sweclockers, on the other hand, used the king of all gaming CPUs: the Core i7-8700K. They even benchmarked the processors to further elaborate on this reasoning:

As you can see, the difference between the Ryzen 7 2700X (which PCGamesN used) and the Core i7-8700k is very significant. In fact, this is probably the sole reason why we see AMD cards pushing ahead of the GTX 1080 Ti against all odds and why we see the 1080 Ti maintaining a clear lead in the Sweclocker results of the same settings and same resolution. In other words, once you remove the CPU bottleneck from the equation, it looks like the GTX 1080 Ti is still king.

wccftech.com/battlefield-v-closed-alpha-benchmarks/

Why does a minor alpha game benchmark force you to start spitting nonsense, pretty please? Why butheart for Huang at all?

techreport.com/review/32766/nvidia-geforce-gtx-1070-ti-graphics-card-reviewed/7

www.hardware.fr/articles/971-9/benchmark-hitman.html

It must be a shock to you doesn't it? Here is another shock, remember Ashes of Singularity?

www.hardware.fr/articles/971-11/benchmark-ashes-of-the-singularity.html

www.anandtech.com/show/11987/the-nvidia-geforce-gtx-1070-ti-founders-edition-review/5

Too bad for you right? Remember Forza 7?

techreport.com/review/32766/nvidia-geforce-gtx-1070-ti-graphics-card-reviewed/3

What's that? 1060 is 25% faster than RX580 in a DX12 game? No way! Let'ss make this a news piece!

Stay in your lands of denial please ..

Mic dropped ..

www.purepc.pl/karty_graficzne/test_colorful_igame_geforce_gtx_1070u_chinski_przepis_na_pascala?page=0,15

although it's splitting hairs about which one is faster.

Muhhamed is on point here. First and foremost there are a lot of dx12 games that run really well on nvidia. Can't tell specifically, but I'd be surprised if you gathered up all dx12 games on the market and 1080 to V64 was more than +/- 5%. Second of all, nvidia did make a reasonable progress optimizing typically AMD games like hitman and doom, to the point that amd no longer wins clealy in any of them,if they win at all. Most of it came from reducing cpu overhead,cause geforce cards used to get worse performance on launch in games that use dx12 features. since amd has hardware implementation,it ran fine on launch. Nvidia had to take time for optimizations, took longer in the past, but nowadays they can deal with it pretty quickly.

Though move up to 1440p and the 1080 is ahead of the Vega 64 by a small margin, after driver upgrades:

www.purepc.pl/karty_graficzne/test_nvidia_geforce_gtx_1070_ti_nemezis_amd_radeon_rx_vega_56?page=0,15

Use a newer review and you will find the 1080 is 7% faster at 1080p:

www.purepc.pl/karty_graficzne/test_sapphire_radeon_rx_vega_64_nitro_niereferencyjna_vega?page=0,15

www.anandtech.com/show/11987/the-nvidia-geforce-gtx-1070-ti-founders-edition-review/5

<iframe src="giphy.com/embed/bfGK4ihDc2rDi" width="480" height="269" frameBorder="0" class="giphy-embed" allowFullScreen></iframe><p><a href="">via GIPHY</a></p>

People see a GTX 1060 review. They see it getting 60+fps in many games. Then they put it in their PC and they encounter framedrops to 40fps. Because all reviewers test these cards on one of the fastest CPU's.

No developer implements Async Compute because it's a pain in the ass to get it working and supported on most architectures, and the gains are limited most of the time anyway.