Friday, March 6th 2020

AMD RDNA2 Graphics Architecture Detailed, Offers +50% Perf-per-Watt over RDNA

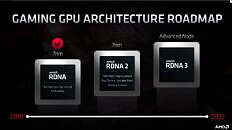

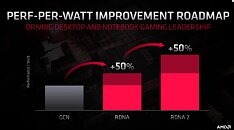

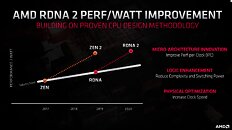

With its 7 nm RDNA architecture that debuted in July 2019, AMD achieved a nearly 50% gain in performance/Watt over the previous "Vega" architecture. At its 2020 Financial Analyst Day event, AMD made a big disclosure: that its upcoming RDNA2 architecture will offer a similar 50% performance/Watt jump over RDNA. The new RDNA2 graphics architecture is expected to leverage 7 nm+ (7 nm EUV), which offers up to 18% transistor-density increase over 7 nm DUV, among other process-level improvements. AMD could tap into this to increase price-performance by serving up more compute units at existing price-points, running at higher clock speeds.

AMD has two key design goals with RDNA2 that helps it close the feature-set gap with NVIDIA: real-time ray-tracing, and variable-rate shading, both of which have been standardized by Microsoft under DirectX 12 DXR and VRS APIs. AMD announced that RDNA2 will feature dedicated ray-tracing hardware on die. On the software side, the hardware will leverage industry-standard DXR 1.1 API. The company is supplying RDNA2 to next-generation game console manufacturers such as Sony and Microsoft, so it's highly likely that AMD's approach to standardized ray-tracing will have more takers than NVIDIA's RTX ecosystem that tops up DXR feature-sets with its own RTX feature-set.Variable-rate shading is another key feature that has been missing on AMD GPUs. The feature allows a graphics application to apply different rates of shading detail to different areas of the 3D scene being rendered, to conserve system resources. NVIDIA and Intel already implement VRS tier-1 standardized by Microsoft, and NVIDIA "Turing" goes a step further in supporting even VRS tier-2. AMD didn't detail its VRS tier support.

AMD hopes to deploy RDNA2 on everything from desktop discrete client graphics, to professional graphics for creators, to mobile (notebook/tablet) graphics, and lastly cloud graphics (for cloud-based gaming platforms such as Stadia). Its biggest takers, however, will be the next-generation Xbox and PlayStation game consoles, who will also shepherd game developers toward standardized ray-tracing and VRS implementations.

AMD also briefly touched upon the next-generation RDNA3 graphics architecture without revealing any features. All we know about RDNA3 for now, is that it will leverage a process node more advanced than 7 nm (likely 6 nm or 5 nm, AMD won't say); and that it will come out some time between 2021 and 2022. RDNA2 will extensively power AMD client graphics products over the next 5-6 calendar quarters, at least.

AMD has two key design goals with RDNA2 that helps it close the feature-set gap with NVIDIA: real-time ray-tracing, and variable-rate shading, both of which have been standardized by Microsoft under DirectX 12 DXR and VRS APIs. AMD announced that RDNA2 will feature dedicated ray-tracing hardware on die. On the software side, the hardware will leverage industry-standard DXR 1.1 API. The company is supplying RDNA2 to next-generation game console manufacturers such as Sony and Microsoft, so it's highly likely that AMD's approach to standardized ray-tracing will have more takers than NVIDIA's RTX ecosystem that tops up DXR feature-sets with its own RTX feature-set.Variable-rate shading is another key feature that has been missing on AMD GPUs. The feature allows a graphics application to apply different rates of shading detail to different areas of the 3D scene being rendered, to conserve system resources. NVIDIA and Intel already implement VRS tier-1 standardized by Microsoft, and NVIDIA "Turing" goes a step further in supporting even VRS tier-2. AMD didn't detail its VRS tier support.

AMD hopes to deploy RDNA2 on everything from desktop discrete client graphics, to professional graphics for creators, to mobile (notebook/tablet) graphics, and lastly cloud graphics (for cloud-based gaming platforms such as Stadia). Its biggest takers, however, will be the next-generation Xbox and PlayStation game consoles, who will also shepherd game developers toward standardized ray-tracing and VRS implementations.

AMD also briefly touched upon the next-generation RDNA3 graphics architecture without revealing any features. All we know about RDNA3 for now, is that it will leverage a process node more advanced than 7 nm (likely 6 nm or 5 nm, AMD won't say); and that it will come out some time between 2021 and 2022. RDNA2 will extensively power AMD client graphics products over the next 5-6 calendar quarters, at least.

306 Comments on AMD RDNA2 Graphics Architecture Detailed, Offers +50% Perf-per-Watt over RDNA

....especially in light of the link I just provided?

If we know their Navi/RDNA/7nm is less efficient than Nvidiz now...assuming both of those articles are true.... why would they be worried about maintaining their efficiency over AMD gpus?

Which is more realistic to you for the 50% increase? An new arch with a die shrink, or an update arch on the same process? I think both will get there, however nvidia isnt worried about this..

I realllllly want to see AMD pull the rabbit out of the hat on this on, I want the competition to be richer and I am craving a meaningful upgrade to my GTX1080 that has RTRT and VRS. I will buy the most compelling offering from either camp, it just has to be compelling. Really not in the mood for another hot, loud card, with coil whine and driver issues. If I can buy a 2080Ti perf or higher card for ~$750 USD or less that ticks those boxes, happy days.

Truly AMD, I am rooting for you, do what you did with Zen!

To achieve a 50% efficiency gain in average between Navi 1x and Navi 2x would be a huge achievement, and is fairly unlikely. It's hard to predict the gains from a refined node, but we have seen in the past that refinements can do good improvements, like Intel's 14nm+/14nm++, but still far away from reaching 50%.

And as always, any node advancements will be available to Nvidia as well.

Moore's Law Is Dead

www.youtube.com/channel/UCRPdsCVuH53rcbTcEkuY4uQ

Don't get me wrong though, I hope RDNA2 is as good as possible. But please don't spread the nonsense these losers on YouTube are pulling out of their behinds. ;)It's also a 250mm² chip that draws ~225W ;)

Building big chips is not the problem, but doing big chips with high clocks though, that would require a much more efficient architecture.

Would you?I've also heard RedTagGaming and Gamer Meld YouTube channels that seem quite exited about RDNA2 based on what there sources have hinted. I'm keeping my expectations conservative. Though, I have a strong gut feeling RDNA2 is the real deal and not just another Vega like GPU.

I don't need to write anything down. Thanks for the info and bread crumb trail. :)

Speculation is of course fine, and many of us enjoy discussing potential hardware, myself included, but speculation should be labeled as such, not be labeled as "leaks" when it's not. Whenever we see leaks we should always check if it passes some basic "smell tests";

- Who is the source and does it have a good track record? Always see where the leak originates; if it's from WCCFTech, VideoCardz, FudZilla or somewhere random, then it's fairly certainly fake, random twitter/forum posts often is fake, but can occasionally be true, etc. "Leaks" from official drivers, compilers, official papers etc. are pretty solid. Some sources are also know to have a certain bias, even though they can have elements of truth to their claims.

- Is the nature of the "leak" something which can be known, or is likely to be known outside a few core engineers? Example: Clock speeds are never set in stone until they have the final stepping shortly ahead of a release, so when someone posts a table of clock speeds of CPUs/GPUs 6-12 monts ahead, you can know it's BS.

- Is the specificity of the leak something that is sensitive? If the details is only known to a few people under NDA, then those leaking it will risk losing their job and potential lawsuits, how many are willing to do that to serve a random YouTube channel or webpage? What is their motivation?

- Is the scope of the leak(s) likely at all? Some of these channels claims to have dozens of sources inside Intel/AMD/Nvidia, seriously a random guy in his basement have such good sources? Some of these claims to even have single sources who provides sensitive NDA'ed information from both Intel and AMD about products 1+ years away, there is virtually no chance this claim is true, and is an immediate red flag to me.

Unfortunately, most "leaks" are either qualified guesses or pure BS, sometimes an accumulation of both (either intentionally or not). Perhaps sometime you should look back after a product release and evaluate the accuracy and the timeline of the leaks. The general trend is usually that early leaks are usually only true about "big" features, early "specific"(clocks, TDP, shader count(GPUs)) leaks are usually fake. Then usually there is a spike in leaks around the time the first engineering samples arrives, various leaked benchmarks, etc. but clocks are still all over the place. Then there is another spike when board partners get their hands on it, then the accuracy increases a lot, but there is still some variance. Then usually a few weeks ahead of release, we get pretty much precise details.Edit:

Rumors about Polaris, Vega, Vega 2x and Navi 1x have pretty much started out the same way; very unrealistic initially, and then pessimistic close to the actual release. Let's hope Navi 2x delivers, but please don't drive the hype too high.

As for Fudzilla, I would take them a lot more serious over the 2 mentioned. Fudzilla used to be part of Mike Magee's group which wrote for The Inquirer.net (No longer around). Also Charlie Demerjian of SemiAccurate was also part of Mike Magee's group. My point was Mike had real industry sources and was well respected in the computer tech industry. I believe he's been retired for years now. So Fudzilla & SemiAccurate may not get it right all the time, they get pretty close to to the actual truth, because nothing in rumor ever comes 100% accurate. Companies always make last minute changes to products.RDNA1 was just to get a new 7nm hybrid graphics chip that competes well out the door. Testing the waters of RDNA1 design. One example is for GCN, 1 instruction is issued every 4 cycles. With this RDNA hybrid, 1 instruction is issued every 1 cycle, making it much more efficient.

RDNA2 is the real deal according to AMD. I believe they will release a 280W max version, where they will still be able to achieve at least 25%-40% performance improvement over the RTX 2080-Ti. RDNA2 is an Ampere competitor.

Anyway, just to get to 2080ti FE speeds from their current 5700 xt flagship is 46%. To go another 25-40% faster that would be a 71-86% increase. Have we ever seen that in the history of gpus? A 71% increase from previous gen flagship to current gen flagship?

You've sure got a lot of faith in this architecture with about the only thing going for it is AMD marketing...

If ampre comes in like Turing did over kepler (25%) that's the bottom end of your goal with their new gpu performing 71% faster than it's current flagship. That's a ton, period, not to mention on the same node.

With regards to the 3080-Ti and Big Navi performance numbers, it's all up in the air speculation. Some think RDNA2 (Big Navi) is going to compete with the 2080-TI and others believe AMD is targeting the 3080-Ti. In order for AMD to target Nvidia's speculative 3080-Ti, they are probably going to compare Nvidia's performance improvements per generation to have an idea on how fast RDNA2 needs to be. I don't think AMD will push it to the limits, I think they are working more on power efficiency and performance efficiency when they designed RDNA2. I know this is marketing, but Micro-Architecture Innovation = Improved Per-per-Clock (IPC), Logic Enhancement = Reduce Complexity and Switching Power & Physical Optimizations = Increase Clock Speed.

What does all these enhancements have in common? Gaming ConsolesAgreed.

I have a suspicion, what ZEN2 did to the market, RDNA2 will also have a similar effect. And it's a much needed effect, as we need better competition to help drive resonable GPU pricing once again.

The improvements they need to make to match ampre, both in raw performance and ppw (note hat is matching ampre using last generation's paltry 25% gain - remember they added ray tracing and tensor core hardware), is 71%. That's a ton. Only time will tell, and I hope your glass half full attitude pans out to reality, but I'm not holding my breath. I think they will close the gap, but will fall well short of ampre's consumer flagship. At best I see it splitting the difference between 2080ti and ampre. I think it will end up a lot closer to 2080ti than ampre. They have a lot of work to do.

Remember, both amd and nvidia touted 50% ppw gains... if both are true, how can they catch up?

Edit: ah, I see you edited in the 2070 as the comparison. Your power draw number is still a full 20W too low though.