Monday, March 18th 2019

NVIDIA to Enable DXR Ray Tracing on GTX (10- and 16-series) GPUs in April Drivers Update

NVIDIA had their customary GTC keynote ending mere minutes ago, and it was one of the longer keynotes clocking in at nearly three hours in length. There were some fascinating demos and features shown off, especially in the realm of robotics and machine learning, as well as new hardware as it pertains to AI and cars with the all-new Jetson Nano. It would be fair to say, however, that the vast majority of the keynote was targeting developers and researchers, as usually is the case at GTC. However, something came up in between which caught us by surprise, and no doubt is a pleasant update to most of us here on TechPowerUp.

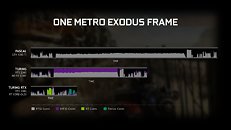

Following AMD's claims on software-based real-time ray tracing in games, and Crytek's Neon Noir real-time ray tracing demo for both AMD and NVIDIA GPUs, it makes sense in hindsight that NVIDIA would allow rudimentary DXR ray tracing support to older hardware that do not support RT cores. In particular, an upcoming drivers update next month will allow DXR support for 10-series Pascal-microarchitecture graphics cards (GTX 1060 6 GB and higher), as well as the newly announced GTX 16-series Turing-microarchitecture GPUs (GTX 1660, GTX 1660 Ti). The announcement comes with a caveat letting people know to not expect RTX support (think lower number of ray traces, and possibly no secondary/tertiary effects), and this DXR mode will only be supported in Unity and Unreal game engines for now. More to come, with details past the break.NVIDIA claims that DXR mode will not run the same on Pascal GPUs relative to the new Turing GTX cards, primarily given how the Pascal microarchitecture can only run ray tracing calculations in FP32 mode, which is slower than what the Turing GTX cards can do via a combination of FP32 and INT32 calculations. Both will still be slower and less capable of hardware with RT cores, which is to be expected. An example of this performance deficit is seen below for a single frame from Metro Exodus, showing the three different feature sets in action.Dragon Hound, an MMO title shown off before by NVIDIA at CES, will be among the first game titles, if not the very first, to enable DXR support for the aforementioned GTX cards. This will be followed by other game titles that already have NVIDIA RTX support, including Battlefield V and Shadow of the Tomb Raider, as well as synthetic benchmarks including Port Royal. General incorporation into Unity and Unreal engines will help a lot coming forward, and NVIDIA then mentioned that they are working with more partners across the board to get DXR support going. Time will tell how well these implementations go, and the performance deficit coming with them, and we will be sure to examine DXR in the usual level of detail TechPowerUp is trusted for. But for now, we can all agree that this is a welcome move in greatly increasing the number of compatible devices capable for some form of real-time ray tracing, which in turn will enable game developers to focus on implementation as well now that there is a larger market.

Following AMD's claims on software-based real-time ray tracing in games, and Crytek's Neon Noir real-time ray tracing demo for both AMD and NVIDIA GPUs, it makes sense in hindsight that NVIDIA would allow rudimentary DXR ray tracing support to older hardware that do not support RT cores. In particular, an upcoming drivers update next month will allow DXR support for 10-series Pascal-microarchitecture graphics cards (GTX 1060 6 GB and higher), as well as the newly announced GTX 16-series Turing-microarchitecture GPUs (GTX 1660, GTX 1660 Ti). The announcement comes with a caveat letting people know to not expect RTX support (think lower number of ray traces, and possibly no secondary/tertiary effects), and this DXR mode will only be supported in Unity and Unreal game engines for now. More to come, with details past the break.NVIDIA claims that DXR mode will not run the same on Pascal GPUs relative to the new Turing GTX cards, primarily given how the Pascal microarchitecture can only run ray tracing calculations in FP32 mode, which is slower than what the Turing GTX cards can do via a combination of FP32 and INT32 calculations. Both will still be slower and less capable of hardware with RT cores, which is to be expected. An example of this performance deficit is seen below for a single frame from Metro Exodus, showing the three different feature sets in action.Dragon Hound, an MMO title shown off before by NVIDIA at CES, will be among the first game titles, if not the very first, to enable DXR support for the aforementioned GTX cards. This will be followed by other game titles that already have NVIDIA RTX support, including Battlefield V and Shadow of the Tomb Raider, as well as synthetic benchmarks including Port Royal. General incorporation into Unity and Unreal engines will help a lot coming forward, and NVIDIA then mentioned that they are working with more partners across the board to get DXR support going. Time will tell how well these implementations go, and the performance deficit coming with them, and we will be sure to examine DXR in the usual level of detail TechPowerUp is trusted for. But for now, we can all agree that this is a welcome move in greatly increasing the number of compatible devices capable for some form of real-time ray tracing, which in turn will enable game developers to focus on implementation as well now that there is a larger market.

113 Comments on NVIDIA to Enable DXR Ray Tracing on GTX (10- and 16-series) GPUs in April Drivers Update

The current real-time push that Nvidia (somewhat confusingly) branded as RTX is not a thing in itself, it is an evolution on all the previous work. RT cores and the operations they are able to perform are not chosen randomly, they have extensive experience in the field. While you keep lambasting Nvidia for RTX, APIs for RTRT seem to take a pretty open route. DXR is in DX12, open for anyone to implement. Vulkan has Nvidia RTX specific extensions but there is a discussion whether something more generic is needed.

Nobody has claimed RT or RTRT cannot be done on anything else. Any GPU or CPU can, it is a simple question of performance. So far, including DXR and Optix tests done on Pascal, Volta and Turing suggest RT Cores do provide a considerable boost to RT performance.

The best thing in RT ever produced.

Brought to us, by the #leatherman.

Thank you so much for your insight!

Unfortunately it is somewhat misplaced. The context of the argument was RTX for GTX cards being something nVidia likely prepared for GDC as opposed to something that nVidia likely had to pull out from the back pocket, to address thunder from the Crytek demo.Development at NVDA is not done randomly, got it.

Not least, because they are lead by, you know, Lisa's Uncle.Because it is not obvious that "more generic" (if that's what we call open standards these days) Ray tracing related standard is needed, or for some even more sophisticated reason?

The bait is so obvious, the only reason he's not on my ignore list is for entertainment purposes.

However, I completely agree with the rest of that assessment.

And I don't agree. RTX is here to stay. We don't know for how long: 2, 3, 5 years? For few years it'll cement Nvidia supremacy. That's all they want - they already won the race for market share and profits.

By 2025 GPUs will be twice as fast, mainstream PCs will have PCIe 5.0 and 10-16 cores. At that point CPUs could take some of the RTRT workload (and they're much better at it).

RTX makes another 2 big wins for Nvidia probable. Imagine RTRT sticks - people will learn to like it. Imagine AMD doesn't make a competing ASIC.

Datacenter and console makers will think twice before they choose AMD as the GPU supplier for next gen streaming/console gaming.

Ehm, just by transitioning to D3D12 and abandoning D3D11, they can spend far less time optimizing for that 10%+ down the road. Time is money.

What does DXR offer that saves money? Nothing? Because it isn't fully real-time raytraced which would save them money. Because so little hardware can even do it, not doing D3D11/D3D12/Vulkan is out of the question in DXR games.In a decade or two, sure. Not until then. Gamers want 1440p, 4K, higher framerate, HDR, and finally raytracing. Until an ASIC is developed and integrated into GPUs to do raytracing at 4K in realtime with negligible framerate cost, it will not become mainstream and not for a decade after those products start launching.Using RadeonRays/OpenCL? Makes sense seeing how AMD doesn't technically support DXR yet.

IMO, that period after next gen consoles come out and after devs stop supporting current gen consoles is when we will see a shift over.

Crytek shows Ray Tracing running on Vega - NV allow Ray Tracing on Pascal cards.

So pathetic. :D

My opinion/prediction/hope is that Crytek showed this tech in order to try and be more relevant in the console market. They can now offer a way for the next console to be able to run ray tracing on AMD hardware (hint hint) and make it a huge selling point (the new 4k if you will). Now Unreal Engine has also added ray tracing. RT was never going to take off on the PC until consoles could also adopt it. Several people have declared it dead because a console wouldn't be able to ray trace for another 10 years, but apparently, all it needs is a Vega 56 equivalent gpu to get 4k/30 at what looks to be slightly less than current gen console quality geometry and textures. This should definitely be obtainable next gen release. And if this ends up being the case, then as a pc enthusiast I benefit.

Raytracing is better suited for professional software than games and that's not going to change for a long time. If the change of substrates leads to a huge jump in performance (e.g. graphene and terahertz processors) at little cost, then we could see raytracing flood in to take advantage of all that untapped potential...and that's at least a decade out too.

The devs should be better focusing on the gameplay, story and atmosphere of the game instead of wasting time with this nonsense.

AMD also have the option of integrating RTRT via a CPU ASIC, perhaps a chiplet dedicated to that. Maybe part of the reason for the "more cores" push is for this kind of thing, making the CPU + GPU more balanced in graphics workloads. A 7nm (and lower) chiplet would be far more economical than having to add the same die size to a monolithic GPU die. With e.g IF chiplets could also be put on graphics cards.

IMO this is the way things will start to go, the only down-side is we may end up with even more SKUs. ;)

Thinking further out, I wonder will chiplets (on cards) possibly allow for user customisable layout, i.e. you buy a card and have maybe 8 or 16 sockets on it, into these you can install modules depending on your workload. Do you want rasterisation, RT, GPGPU, custom ASIC for some blockchain or AI etc. etc. put on the power you need for the task. It would also allow gradual upgrades and customisation on a per game level (to a point, it would be a hassle to swap chips every time you change game). i.e. when a new engine comes out, you may need to "rebalance" your "chip-set" <G>.

Inventory stems from crypto crash and that's a common knowledge you also possess. Stop manipulating. They've already reported $1.4B in October 2018.

Nvidia has warehouses full of small Maxwell and Pascal chips they struggle to move. It'll most likely be written off - hence the drop in stock lately.

And it seems you haven't looked into AMD's financial statement lately.

They have $0.8B inventory (12% of FY revenue). So it's not exactly better than Nvidia's situation (14% of FY revenue).

And AMD's inventory is likely mostly GPUs while revenue is CPU+GPU.

Does it make sense to you?Citation needed.