Monday, March 18th 2019

NVIDIA to Enable DXR Ray Tracing on GTX (10- and 16-series) GPUs in April Drivers Update

NVIDIA had their customary GTC keynote ending mere minutes ago, and it was one of the longer keynotes clocking in at nearly three hours in length. There were some fascinating demos and features shown off, especially in the realm of robotics and machine learning, as well as new hardware as it pertains to AI and cars with the all-new Jetson Nano. It would be fair to say, however, that the vast majority of the keynote was targeting developers and researchers, as usually is the case at GTC. However, something came up in between which caught us by surprise, and no doubt is a pleasant update to most of us here on TechPowerUp.

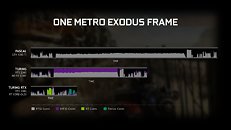

Following AMD's claims on software-based real-time ray tracing in games, and Crytek's Neon Noir real-time ray tracing demo for both AMD and NVIDIA GPUs, it makes sense in hindsight that NVIDIA would allow rudimentary DXR ray tracing support to older hardware that do not support RT cores. In particular, an upcoming drivers update next month will allow DXR support for 10-series Pascal-microarchitecture graphics cards (GTX 1060 6 GB and higher), as well as the newly announced GTX 16-series Turing-microarchitecture GPUs (GTX 1660, GTX 1660 Ti). The announcement comes with a caveat letting people know to not expect RTX support (think lower number of ray traces, and possibly no secondary/tertiary effects), and this DXR mode will only be supported in Unity and Unreal game engines for now. More to come, with details past the break.NVIDIA claims that DXR mode will not run the same on Pascal GPUs relative to the new Turing GTX cards, primarily given how the Pascal microarchitecture can only run ray tracing calculations in FP32 mode, which is slower than what the Turing GTX cards can do via a combination of FP32 and INT32 calculations. Both will still be slower and less capable of hardware with RT cores, which is to be expected. An example of this performance deficit is seen below for a single frame from Metro Exodus, showing the three different feature sets in action.Dragon Hound, an MMO title shown off before by NVIDIA at CES, will be among the first game titles, if not the very first, to enable DXR support for the aforementioned GTX cards. This will be followed by other game titles that already have NVIDIA RTX support, including Battlefield V and Shadow of the Tomb Raider, as well as synthetic benchmarks including Port Royal. General incorporation into Unity and Unreal engines will help a lot coming forward, and NVIDIA then mentioned that they are working with more partners across the board to get DXR support going. Time will tell how well these implementations go, and the performance deficit coming with them, and we will be sure to examine DXR in the usual level of detail TechPowerUp is trusted for. But for now, we can all agree that this is a welcome move in greatly increasing the number of compatible devices capable for some form of real-time ray tracing, which in turn will enable game developers to focus on implementation as well now that there is a larger market.

Following AMD's claims on software-based real-time ray tracing in games, and Crytek's Neon Noir real-time ray tracing demo for both AMD and NVIDIA GPUs, it makes sense in hindsight that NVIDIA would allow rudimentary DXR ray tracing support to older hardware that do not support RT cores. In particular, an upcoming drivers update next month will allow DXR support for 10-series Pascal-microarchitecture graphics cards (GTX 1060 6 GB and higher), as well as the newly announced GTX 16-series Turing-microarchitecture GPUs (GTX 1660, GTX 1660 Ti). The announcement comes with a caveat letting people know to not expect RTX support (think lower number of ray traces, and possibly no secondary/tertiary effects), and this DXR mode will only be supported in Unity and Unreal game engines for now. More to come, with details past the break.NVIDIA claims that DXR mode will not run the same on Pascal GPUs relative to the new Turing GTX cards, primarily given how the Pascal microarchitecture can only run ray tracing calculations in FP32 mode, which is slower than what the Turing GTX cards can do via a combination of FP32 and INT32 calculations. Both will still be slower and less capable of hardware with RT cores, which is to be expected. An example of this performance deficit is seen below for a single frame from Metro Exodus, showing the three different feature sets in action.Dragon Hound, an MMO title shown off before by NVIDIA at CES, will be among the first game titles, if not the very first, to enable DXR support for the aforementioned GTX cards. This will be followed by other game titles that already have NVIDIA RTX support, including Battlefield V and Shadow of the Tomb Raider, as well as synthetic benchmarks including Port Royal. General incorporation into Unity and Unreal engines will help a lot coming forward, and NVIDIA then mentioned that they are working with more partners across the board to get DXR support going. Time will tell how well these implementations go, and the performance deficit coming with them, and we will be sure to examine DXR in the usual level of detail TechPowerUp is trusted for. But for now, we can all agree that this is a welcome move in greatly increasing the number of compatible devices capable for some form of real-time ray tracing, which in turn will enable game developers to focus on implementation as well now that there is a larger market.

113 Comments on NVIDIA to Enable DXR Ray Tracing on GTX (10- and 16-series) GPUs in April Drivers Update

they enabled it because of crytek show off ray tracing on AMD GPU

How convenient for nvidia to turn some feature on/off via driver update to billions of GPU worldwide

from some cubical in office

I'm not saying RTRT is a gimmick. It definitely adds to the quality of the graphics but it is a mixture of ray tracing and rasterization.

The reason for enabling DXR is simple, it reminds me of something @Vayra86 said in another thread:Edit:

Just look at that Metro comparison slide:

www.nvidia.com/content/dam/en-zz/Solutions/geforce/news/geforce-rtx-gtx-dxr/geforce-rtx-gtx-dxr-one-metro-exodus-frame.png

That Turing RTX is clearly a marketing stunt on the slide - not directly comparable due to lower resolution - but RT cores are very clearly a huge boost.

If you're like me and can't stand noise (crawling surfaces) at all, then these implementations of raytracing are all downgrades.

We're a very, very, very long way from taking AAA games released today, deleting all of the old lighting models and replacing them completely with real time raytracing from sources. In twenty years, maybe, we'll be real time raytracing games released a decade ago (like Crysis). Not doing low resolution raytracing shadows then drowning it out with anti-aliasing like this garbage does.

Something interesting from Nvidia's article on this: www.nvidia.com/en-us/geforce/news/geforce-gtx-ray-tracing-coming-soon/

Note the FPS changes from using RT cores. Port Royal obviously gets a huge boost, Metro gets a really large boost, Shadow of Tomb Raider gets noticeable boost and Battlefield's boost is... small. It is not surprising but good to see actual data on this. This is due to two things - how much of the frame time goes to ray tracing as well as what is needed to set up ray tracing and how it is done. I wish we had detailed data for each of them, at least in the same way as the metro frame time slide.

And, thank you so much, Crytek.Hehe, decisions, decisions, right?

Huang's choices at this point:

1) Pretty fast DXR on non-RTX cards => leads to "WTF NVDA, did you fool us?"

2) Pretty slow DXR on non-RTX cards => leads to "WTF NVDA, who the f*ck needs this shit?"

#poorhuang

#leatherdudes

Tech has been in their pockets for a while. Pascal Quadros do raytracing in various forms (Optix, mostly). Titan V had DXR support from the beginning and was the primary development vehicle for it. They needed to move the tech to consumer drivers, test it and wait for a good time to release. Big event, fairly decent DXR implementation in Metro, seems like the time was now.

TL;DR: DXR strikes me as something that's just ground work for engine developers to dabble with. It's not something game developers should waste their time on. And most won't, unless gifts.

Also DXR is a D3D12 extension which means it should work on any D3D12 capable GPU via shaders emulation. NVIDIA has not enabled RTX on RTX-less cards, they have enabled the software emulation of a D3D12 feature, DXR.Crytek has not implemented DXR - they have a custom algorithm for ray-traced reflections.This has nothing to do with Crytek. Crytek (speaking of the demo) has nothing to do with DXR. This is all about enabling a feature which should work by definition and either be accelerated in HW or emulated via shaders.

Anyway, this is a great way to tease the technology and boost adoption rates. Overall what's coming out now is looking a whole lot more like actual industry effort, broad adoption and multiple ways to attack the performance problem. The one-sided RTX approach carried only by a near-monopolist wasn't healthy. This however, looks promising.

So nVidia spent years upon years "doing something with RT" to come up with glorious (stock market crashing :))))) RTX stuff that absolutely had to use RTX cards, because, for those stupid who don't get it: it has that R in it! It stands for "raytracing". Unlike GTX, where G stands for something else (gravity perhaps)

So they come with that ultimate plan to bend over customers in 360°+ manner, with shiny expensive RTX cards absolutely needed to experience that ungodly goodness brought to you by the #leatherman.

Did I say "years"? Right, so years of development to bring you that RTX thing.

But then, there is a twist. Somewhere in January, the #leatherman smokes something unusual and asks his peasants, whether it wouldn't be even cooler, if that whole R thing somehow worked with G things.

And engineers kinda say "kinda yes", but finance officers say "kinda no, because less monez".

Surely, Crytek RT stealing RTX thunder => "no no no, wait, we can also do it on non RTX cards" is just a coincidence, it is more than obvious.

For the said savings to work, one should not have to do 2 versions of the game.

RT must become a massive product for devs to embrace it en mass and not for "gifts".

If our chips were fast enough to do full RT in games (like we do RTRT in renders CGI movies), you wouldn't need a GPU.

CPUs are more suited to ray tracing in general. It's just that they're usually optimized for other tasks (low latency etc).I doubt.

The time you've wasted on writing that post could have been spent on reading few paragraphs of this:

en.wikipedia.org/wiki/Ray_tracing_(graphics)